- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

CheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: Firewall priority queues setting

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Firewall priority queues setting

Hi Experts,

I have a customer PoC with high latency issue.

They will use CIFS for file access at the same time(usual at the beginning of work time), when FW throughput/connections become higher(1.5Gbps/50K)latency will increase from 2ms to 40ms above.

I would like to know if this setting could be optimized our situation.

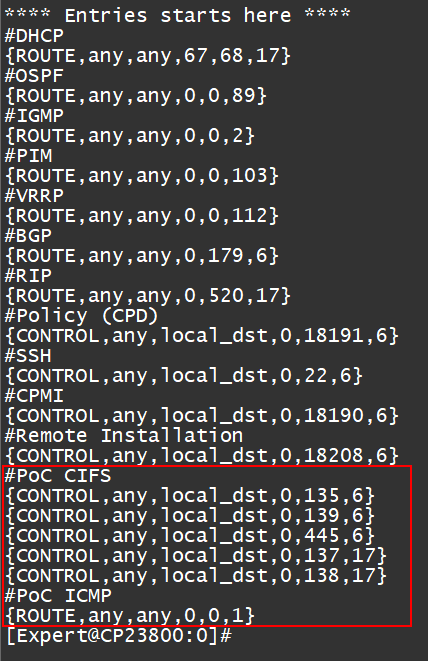

I tried to enable this feature on R80.10, could someone please help to verify the format is correct?

Thanks for help!

Summary my questions below, please share your experience to me. (high latency environment)

Many thanks!

1) As sk105762 mentioned, PrioQ will be activated only when CPU is overload.

Does it mean even one of CPU cores consumes 100% then PrioQ mechanism should be activated?

What’s the exact condition to trigger this feature?

2) Does this feature can optimize the network latency for some scenario such as CIFS file sharing? (Lan access)

3) Could someone help to verify my format is correct or need to modify?

Jarvis.

16 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sim affinity as a solution ?

Jerry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jerry

I use the default settings for SNDs (4 core with SMT)

CPU loading around 30%~40% at work time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the CIFS access is to internal ressources only you could exclude this traffic from TP...

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Günther

I only use FW blade.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try to move rule which allows this traffic to the top of the rulebase, to be matched by SecureXL and be accelerated.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jozko,

Thanks for your advice, I will try it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Modifying the Firewall Priority Queue settings is not the proper way to accomplish what you are trying to do; this feature is only intended to ensure that critical firewall control traffic is not inordinately delayed by heavy user traffic flows when a Firewall Worker core reaches 100% utilization. While Priority Queues are enabled by default in R80.10, they do not start actively prioritizing traffic until a Firewall Worker reaches 100% utilization, and only for that overloaded Firewall Worker. All other non-overloaded Firewall Workers are still doing FIFO.

You probably just need to tune the firewall to reduce the latency, please provide output from the following commands and I can advise further:

enabled_blades

fwaccel stat

fwaccel stats -s

grep -c ^processor /proc/cpuinfo

/sbin/cpuinfo

fw ctl affinity -l -r

netstat -ni

fw ctl multik stat

Most likely cause of high latency during busy times is APCL/URLF policy being forced to inspect high-speed LAN to LAN traffic due to the inappropriate use of "Any" in the Destination column of the APCL/URLF policy layer, or object Internet is not being calculated correctly due to incomplete or inaccurate firewall topology definitions.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[Expert@CP15600:0]# /sbin/cpuinfo

HyperThreading=enabled

[Expert@CP15600:0]# enabled_blades

fw SSL_INSPECT

[Expert@CP15600:0]# fwaccel stats -s

Accelerated conns/Total conns : 16140/17125 (94%)

Delayed conns/(Accelerated conns + PXL conns) : 2871/16837 (17%)

Accelerated pkts/Total pkts : 28029939/35118855 (79%)

F2Fed pkts/Total pkts : 411002/35118855 (1%)

PXL pkts/Total pkts : 6677914/35118855 (19%)

QXL pkts/Total pkts : 0/35118855 (0%)

You have 16 physical cores (32 via SMT) and only 4 SND/IRQ cores which is the default. As shown above roughly 80% of the traffic crossing the firewall is being fully accelerated by SecureXL due to the limited number of blades enabled which is great, but 80% of the traffic crossing the firewall can only be processed by the 4 SND/IRQ cores which is almost certainly causing the bottleneck you are seeing.

I'd suggest disabling SMT/Hyperthreading via cpconfig which will drop you back to 16 physical cores, then assigning 10 CoreXL kernel instances via cpconfig which will allocate 6 discrete (non-hyperthreaded) SND/IRQ cores. Hyperthreading is actually hurting your performance in this particular situation; Multi-Queue is probably not necessary either and imposes additional overhead, but leave it enabled for now.

Other than that everything else looks good.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy,

Thanks for your analyze,

I had setup 6 core for 2 physical NIC (012, 345) , Enable MQ, also disable SMT on other appliance two days ago.

I will increase 6 core to 10 core for SNDs as your suggest.

Does increase rx-ringsize is suggest ? or keep it to default value ?

I will replace the appliance on next week and hope everything is good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No need to adjust the ring buffer size, as that is a last resort and you have zero RX-DRPs anyway. Indiscriminately cranking up the ring buffer size can cause a nasty performance-draining effect known as BufferBloat between interfaces with widely disparate bandwidth available, at least with the typical FIFO processing of interface ring buffers.

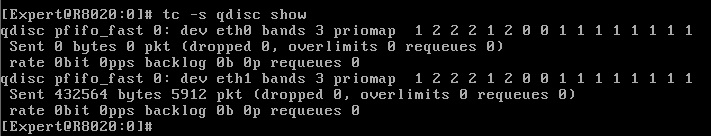

Some Advanced Queue Management strategies such as Controlled Delay (CoDel) can be very effective in mitigating the effects of Bufferbloat on Linux systems with the 3.x kernel.

However for the first time publicly I present to all of you this very exciting (at least to me) screenshot from R80.20EA in my lab:

CoDel appears to be present in the updated R80.20EA security gateway kernel, and it is enabled by default as shown by the tc -s qdisc show command! Looks like my days of issuing dire warnings about increasing firewall ring buffer sizes are numbered...

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy,

Excuse me, I have another question...

In this case, if PXL pkts/Total pkts is 80%, how can I tune ?

by the way thanks for your info. Let me derive much benefit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume this is a different gateway you're asking about?

PXL is not bad per-se, but it does mean that traffic is being subjected to blades like App Control, URL Filtering, IPS, etc.

If you were trying to get a little more performance out of a gateway, you might exclude some traffic from these blades through configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

So you are saying that increasing RX/TX ring sizes to the maximum (4096) can cause some serious issues ? Even if there is valid reason for increasing it (dropped packets) ?

We have recently increased RX/TX ring sizes based on CP recommendation.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a common mantra that packet loss should be avoided at all cost, yet TCP's congestion control algorithm relies on timely dropping of packets when the network is overloaded so that all the different TCP-based connections trying to utilize the congested network link can settle at a stable transfer speed that is as fast as the network will allow. Increasing ring buffer sizes can increase jitter to the point it incurs a kind of "network choppiness" that causes all the TCP streams traversing the firewall to constantly hunt for a stable transfer speed, and they all end up backing off far more than they should in these types of situations:

1) Step down from higher-speed to lower-speed interface (i.e. 10Gbps to 1Gbps) and the lower-speed link is fully utilized

2) Traffic from multiple interfaces all trying to pile into a single fully-utilized interface

3) Traffic from two equal-speed interfaces (i.e. 1Gbps) running with high utilization, yet upstream of one of the interfaces there is substantially less bandwidth, like a 100Mbps Internet connection

Note that two equal-speed interfaces cannot have these issues occur assuming there is truly the amount of bandwidth available upstream of the two interfaces that matches their link speed. In my book I tell the sordid tale of "Screamer" and "Slowpoke", two TCP-based streams competing for a fully-utilized firewall interface that has had its ring buffers increased to the maximum size, thus causing jitter to increase by 16X. Increasing ring buffer sizes is a last resort, generally more SND/IRQ cores should be allocated first and then perhaps use Multi-Queue. I'd suggest reading the Wikipedia article about Bufferbloat.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for excellent explanation !

Time to buy your book ![]()

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 27 | |

| 19 | |

| 10 | |

| 9 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |

Upcoming Events

Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEAThu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEAAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter