- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Automatic TCP MSS Adjustment

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Automatic TCP MSS Adjustment

Hi Everybody,

I'm looking for input on something that I recently ran across. Our organization runs a lot of VPNs, both with external agencies and internally as well. Because all of our internal communication runs over VPNs, we have configured TCP MSS clamping for a few years now to try to combat fragmentation and slowdowns. From the documentation that we read, to do it in GuiDBEdit, you have to set the "fw_clamp_tcp_mss_control" value to True on the gateway object, and then set the "mss_value" on each of the individual interfaces of the gateway that have traffic going into/out of a VPN tunnel. We calculated the MSS value we thought would be appropriate, put it on the internal interfaces in GuiDBEdit, and it seems to have worked, we haven't had a lot of issues with fragmentation.

Jump forward to last week. We've had issues with getting high-throughput on a particular VPN that we use for SAN replication, so I've been playing around with different settings in the VPN community and on the two gateways. One thing that I did was to turn off IP compression in the VPN community. That about doubled the VPN throughput and pretty much fixed the problem in itself. We've always had IP compression turned on for our internal VPNs and never really thought about how it might be slowing them down. The other changes I made were to reset the MSS value on the SAN interfaces of the source and destination gateways to 0 in GuiDBEdit. I did that because we had turned down the MTU value on the SANs themselves to 1280, so I figured there shouldn't be any fragmentation in the VPN if the SANs were negotiating a lower MSS value on their own. I was then doing a "tcpdump -i [interface] -vvv | grep mss" from the CLI of the two gateways, and I found that the SANs were not starting with a lower MSS value themselves, they were still starting with 1460, but over the VPN tunnel, one or both of the gateways turned the MSS value down to 1387. We've never set the MSS value on any interfaces to 1387, so I'm not sure where that value came from, but it seems like an appropriate MSS value to account for all of the headers that get appended to VPN traffic, so it looked like one or both of the gateways were doing automatic TCP MSS adjustment on the traffic. I've highlighted in red below the change in initial MSS values in the new connection.

Source Gateway:

[sourceIP].64484 > [destinationIP].iscsi-target: Flags [S], cksum 0x754f (correct), seq 2506933430, win 65535, options [mss 1460,nop,wscale 2,nop,nop,TS val 0 ecr 0], length 0

[sourceIP].iscsi-target > [destinationIP].64484: Flags [S.], cksum 0x7fb9 (correct), seq 946781532, ack 2506933431, win 65535, options [mss 1387,nop,wscale 5,nop,nop,TS val 0 ecr 0], length 0

Destination Gateway:

[sourceIP].64484 > [destinationIP].iscsi-target: Flags [S], cksum 0x7598 (correct), seq 2506933430, win 65535, options [mss 1387,nop,wscale 2,nop,nop,TS val 0 ecr 0], length 0

[sourceIP].iscsi-target > [destinationIP].64484: Flags [S.], cksum 0x7fb9 (correct), seq 946781532, ack 2506933431, win 65535, options [mss 1387,nop,wscale 5,nop,nop,TS val 0 ecr 0], length 0

This has been a pipe dream of mine for a while to have the TCP MSS adjustment feature work automatically, where the gateways could automatically detect if a new TCP connection was going into a VPN and set the MSS value themselves to account for the VPN headers, and if the traffic isn't going into a VPN just leave the MSS value to whatever the source machine set. Based on the Check Point documentation we read on setting up the TCP MSS adjustment, we thought that if you left the mss_value at 0 the gateway would just not do MSS adjustment on that interface, so you had to set the mss_value to something other than 0, but I'm questioning if 0 actually means to set the MSS automatically. So, I have a few questions:

- Am I making this up, or is automatic TCP MSS adjustment an actual thing? If not, Check Point PLEASE make it so.

- Is this a new feature that was introduced in one of the R80 releases? On the two gateways where I saw this, one is running R80.40, the other is R80.30 3.10 kernel. I usually comb through the release notes and I've never seen anything about a new feature for automatic TCP MSS adjustment.

- If it's an actual feature, what are the conditions to how/when it works? Does it work on the encrypt (source gateway), decrypt (destination gateway), or both? Does it work with IP Compression turned on in the VPN community or does that need turned off in order to work?

- Does it work on the Gaia Embedded SMB (now Quantum Spark?) gateways? We have quite a few 1500 appliances, so if automatic TCP MSS adjustment is a reality, I really need it to work on them as well.

I'm looking forward to hearing everyone's feedback on this. Having automatic TCP MSS adjustment would make my life a whole lot easier than trying to figure out which interfaces need the mss_value set and going into GuiDBEdit every time we have a new interface, plus the interfaces always change when you replace a gateway and reset SIC, so then I have to go back in to GuiDBEdit and set the mss_value on all of the interfaces over again.

Thanks everyone!

Wilson

9 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is news to me if it works.

Possibly what is driving this is overall SecureXL changes that occurred in R80.20.

I don’t believe those changes have propagated to SMB appliances, or if they have, it’s only in the newer appliances.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found:

sk114675: Cannot access 700 /1400 SMB appliance via WebUI or SSH with "MSS clamping" enabled

sk101219: New VPN features in R77.20 and higher (including R80.x versions):

(2) MSS adjustments for Clear and IPsec traffic

The above applies to Centrally managed SMB devices such as 1100/1400 as well, however, the firmware from sk114675 (R77.20.51) needs to be installed to avoid the mentioned issue there involving clamping and WebUI/SSH access.

sk111412: 'MSS clamping' value not enforced on interface for VPN traffic

So his is possible on centrally managed SMBs 8)

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First off, having IP compression enabled in the VPN community ensures that traffic cannot be accelerated by SecureXL at all, and I believe forces all that traffic into the F2F/slowpath. That is why you got such a big performance jump when you disabled compression. So while previously you were dealing with Firewall Worker/F2F handling of your MTU issue, you are almost certainly now dealing with how SecureXL/sim handles it. This got a lot more complicated starting in R80.20 when SecureXL was overhauled and there were many early problems with this. So just be aware of this moving forward and that you may need to tweak sim/SecureXL kernel variables.

Also be aware this shift may now incur higher loads on your SND/IRQ cores (especially because you are utilizing VPNs at LAN speeds) so you may want to check SND/IRQ core utilization, and adjust your CoreXL split if necessary to reduce the number of firewall workers/instances.

Also I hope you are using some form of AES and not 3DES which is 2-3 times slower. If your appliance model supports AES-NI hardware acceleration, switching to AES-GCM will provide even better performance.

Are you using IKEv1 or IKEv2?

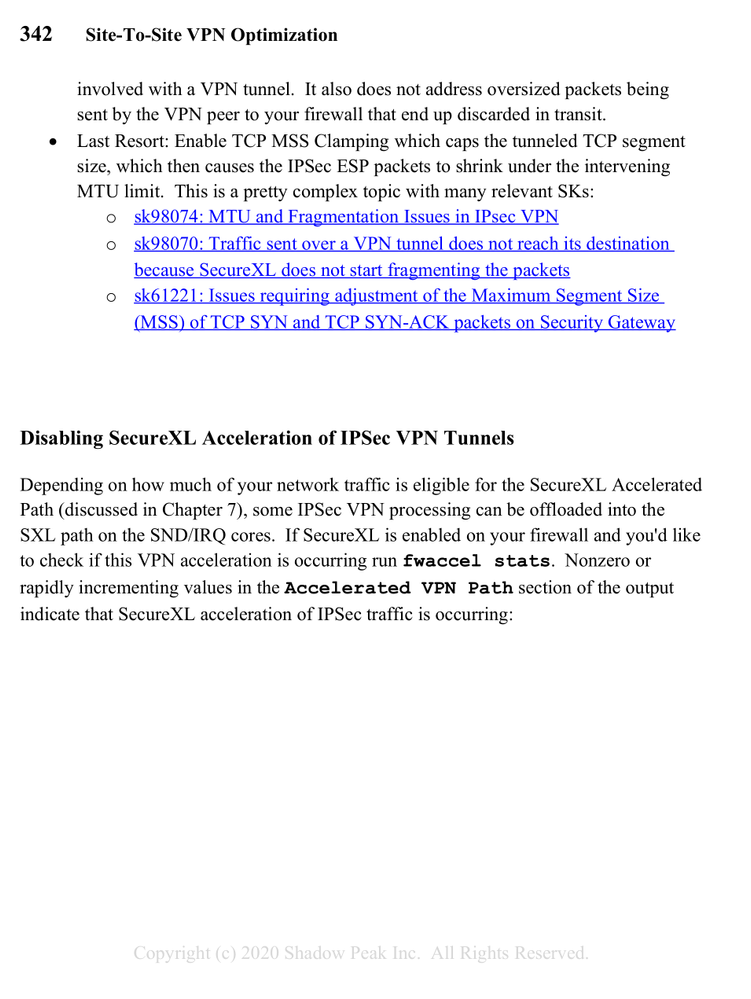

The answer to your questions 1-3 are in my book which is quoted below, and what you are looking for is called Path MTU Discovery; it should work on the Embedded Gaia SMB models but I'm not 100% sure, tagging the resident SMB expert @G_W_Albrecht to see if he knows. Let me know if you have any further questions after reading the book excerpt below, the only correction is that IKEv2 does not have a Path MTU Discovery mechanism built-in. At press time I thought PMTUD was part of its built-in DPD-like mechanism but it is not.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I thought the downside of using AES-GCM is that while it's faster than AES-CBC, it's not supported by SecureXL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you can read above, this remark concerns AES-NI hardware acceleration, not SecureXL. See sk105119 - Best Practices - VPN Performance for details! Without AES-NI, SecureXL does encrypt/decrypt the VPN Traffic (therefore the downside). You need certain hardware to be able to use this, and it will work with OpenServer, too.

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GCM support was added to SecureXL in R80.30, and also later versions of the Jumbo HFAs in R80.10 and R80.20. sk152832: VPN traffic being dropped for "decryption failed" when SecureXL enabled

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good question. I'd be happy for checkpoint to provide something more consolidated on this topic as well.

For what i've seen on the different SKs on the matter if you only care about VPN you should have:

on fwkern.conf :

- fw_clamp_vpn_mss = 1

On simkern.conf:

- sim_clamp_vpn_mss=1

- Also question, how to check if this parameter is on?

- mss_value

- on all involved interfaces

This one is not required for VPN:

fw_clamp_tcp_mss_control=0

But mss_value setting seems to be still required according to the documentation.

If you do an fw monitor -F do you see different MSS on the SYN in the inbound and outbound chains?

Juancho

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- sim_clamp_vpn_mss=1

- Also question, how to check if this parameter is on?

In R80.20+ just append -a to a regular fw ctl get int command and it will try to show you the variable's value in both fwk and sim, assuming it exists. You may get an error message if it doesn't exist in both which is expected in most cases like this:

[Expert@gw-c9e604:0]# fw ctl get int sim_clamp_vpn_mss -a

FW:

Get operation failed: failed to get parameter sim_clamp_vpn_mss

PPAK 0: sim_clamp_vpn_mss = 1

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've checked a cluster on Azure and i've observed the same behaviour. MSS clamped to 1375.

TAC Confirmed the gateway will reduce the MSS to compensate the VPN overhead automatically if fw_clamp_vpn_mss = 1

sim_clamp_vpn_mss=1 are on.

If you need to reduce MSS more then you would need the mss_value setting

Juan

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 15 | |

| 11 | |

| 6 | |

| 3 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter