- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- Re: R81.10 Open Server. 100mbit throttling. am i t...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R81.10 Open Server. 100mbit throttling. am i the only one?

So here the other day i started noticing incredibly slow performance across a site-2-site tunnel to a customer of mine..

after digging around i found out the performance issues is because of my hardware and not the customer.

Right now we are running R81.10 on open server.

has anybody else experienced throttling in performance? all kinds of speedtest/intervlan test will make the performance stuck on a roughly 100Mbit speed.

though the underlying hardware can perform much much more.

if do a "local" performance test on a windows server 2019 my copy/move file performance is a roughlt 700-1000mbit/sec.

but as soon as i do anykind of "wan" related traffic or intervlan. the speeds drops immediately to 100Mbit's.

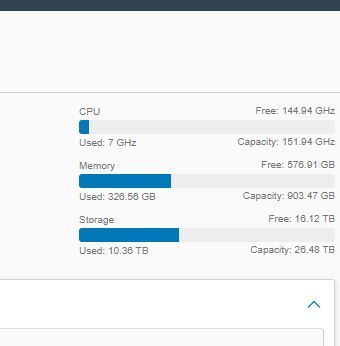

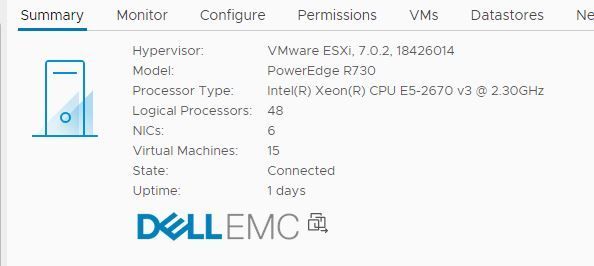

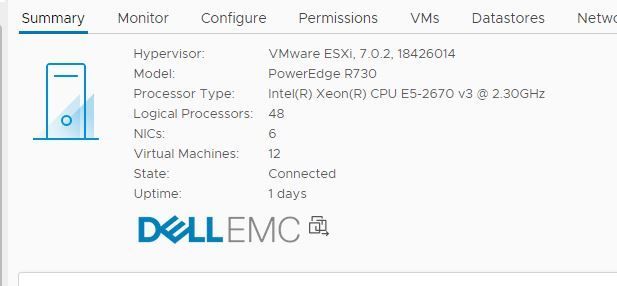

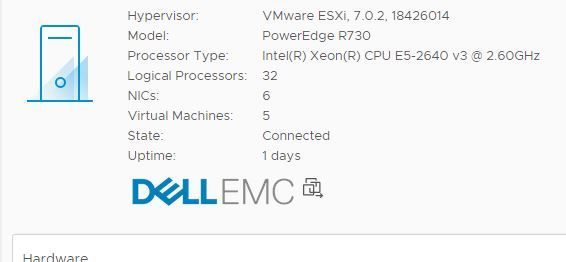

The virtual machines + open server is running on ISCSI. 25GBIT uplink from the SAN.

the Dell poweredge server's are connected at 10GBIT.

the san is running a Raid10 with 12. 2TB SSD's and a SSH cache of 2 * 1TB NVME.

as long as i test performance with "local" speed. (not crossing the firewall interfaces) speed is insanely fast with everything i do, but everytime traffic hits the firewall. the performance drops immediately..

am i the only one seeing it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have R81.10 and had not seen this. Was it upgraded or new install?

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It got upgraded from R81.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, this is not Open Server if you're running on VMware, but CloudGuard Network Security.

What hardware type did you set the NICs for?

If E1000, try VMXNET3.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Misunderstood the term then 🙂

it’s already vmxnet3…:(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems there is a bottleneck somewhere.

First of all, check NIC speed settings. If interfaces are configured on high speed, start checking the rest of potential bottlenecks. Start with top or cpview to see if any of CPUs is spiking. Full scope and flow are described in sk167553.

Since you are on eSX, look also into sk104848. The title says "management", but the basic VMware performance tips are the same.

I have seen similar cases on multiple versions, usually those were NICs configured on low speed and/or half-duplex.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The bottleneck right at 100Mbps is interesting, but not necessarily a network problem. Please provide the output of the "Super Seven" commands run while the firewall is bottlenecked against the 100 Mbps barrier for the entire duration while the commands are run. This will help indicate where the bottleneck is, also grab a screenshot of top running during the bottlenecking, I'm curious to see if you are getting a nonzero st (steal) CPU percentage.

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reviewing some of the settings discussed in sk169252 may also be helpful for you.

What JHF take is currently installed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since you say "anything wan related", someone has to ask the stupid but obvious question, what's the limit of the ISP connection, and have this bit been testet separate ?

If it's just one VPN, the ISP on the other end is also relevant.