- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Call For Papers

Your Expertise, Our Stage

Ink Dragon: A Major Nation-State Campaign

March 11th @ 5pm CET / 12pm EDT

AI Security Masters E4:

Introducing Cyata - Securing the Agenic AI Era

The Great Exposure Reset

AI Security Masters E3:

AI-Generated Malware

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Hybrid Mesh

- :

- Firewall and Security Management

- :

- HA Cluster with 150+ VLAN Interfaces

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

HA Cluster with 150+ VLAN Interfaces

Hi all

We are running the following configuration on our core datacenter firewalls:

- 2 Clusters with 23800 Applicances R77.30 JHF 216

- 150+ VLANs on bonds of 10GB Interfaces

We're experiencing issues with the failover/failback mechanism, where it seems, that "routed" process hangs during writing a lot of information in the database (dbget/dbset commands). When routed is hanging, clish becomes unresponsive as well as the modules ends up in split brain situation (some vlan backup addresses are active on both members of the clusters). We have a Check Point Service Request open to deal about that for a while, unfortunaly not getting close to a solution.

Has anyone experienced anything similar? We were even able to reproduce the issue on our 4600er lab firewalls by configuring additional 140 VLAN interfaces on the standard internal ports.

Does also anyone run a configuration with that many interfaces using ClusterXL? Before we consider moving from VRRP to ClusterXL (as this seems to be Check Point Mainstream), we want to make sure, that we don't go from bad to worse.

Let me know some of your experiences...

Regards,

Roger

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all

after a really long time with Check Point support, we received the following statements:

After verification and confirmation by our R&D here are the following limitations in terms of number of supported interfaces on VRRP and ClusterXL:

- In sk39676 we have the statement that only 127 VRRP interfaces are supported - and not more than that.

Our R&D team has confirmed that this is indeed the correct limitation for VRRP.

- As per sk31631 we support 1024 ClusterXL interfaces

Thus, for the scenario/environment the customer has - VRRP indeed appears to be not suitable - and indeed ClusterXL appears to be the suitable solution from that perspective.

So, we now have it "officially" confirmed, that there's a limitation of using many VLAN interfaces in a VRRP setup.

On a sidenote:

- We know, that the limitation of 127 interfaces mentioned in the SK above come from the design of the maximum priority of 255 per VRID, while the minimum priority delta is 2. Results in a maximum supportet interfaces of 127, because the priority isn't allowed to become a negative number. But Check Point fixed that at some point and made sure, that the priority never gone lower than 1.

- That's also why Check Point R&D stated in the same call, that they've been testing VRRP with 400 interfaces in the lab without issues, but only testet failover / failback mechanism by disabling virtual router instead of just disabling a single VLAN interface.

- We've been fiddling with that case for 5 and a half months (!!) including several outages in that time.

Therefore, since Check Point doesn't plan to fix the issues in the routed process ("works as designed")... the one and only solution is moving to ClusterXL.

Regards

Roger

17 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each network packet on the 10 GBit interface triggers a hardware interrupt. The dispatcher is called (triggers a software interrupt) and distributes the packet to the FW worker. The FW worker processes the package. The Routed is also strongly affected here. I would use several 10 GBit interface and several bond interface. Update to the newest r77.30 hotfix.

Doing:

- more 10 GBit bound interfaces

- distribute the vlan's to several bond interfaces. this is performance enhancing.

- enable SecureXL

- enable nat templates

- enable drop-templates

- optimize the rules for SecureXL

- enable CoreXL

- optimize dispatcher and fw-worker

- enable multiqueueing if it possible

- dynamic dispatcher enable

- update to newest r77.30 hotfix

Regards

Heiko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Heiko

Thanks for your answer. We're aware of most of the points. Upgrade to the latest hotfix is a valid point, which we have postponed because of the running Check Point Service Request. Unless they advise us to do the upgrade, we'll stick to the "problem" configuration. Although, on our Lab firewalls where we could reproduce the issue, we're running the JHF Take 292.

We have multiple interfaces in the bonds as well as CoreXL and SecureXL running. Multiqueue is enabled on the most loaded interfaces, unfortunaly only possible to enable on exactly 5 physical interfaces. Not sure what you mean regarding the "dynamic dispatcher enable".

The most important part in my post were the questions:

1.) Has someone experienced something similar using VRRP?

2.) Does also anyone run a configuration with that many interfaces using ClusterXL?

Regards

Roger

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According Check Point, there is limit of 1024 VLANs per box. But it depands on the environment, so this is just recommandation.

Is routed restarting ?

Do you have coredumps created ?

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, according to the datasheet, 1024 vlans are supported.

Is routed restarting ? --> No, it's freezing/hanging. Stays in this state for at least several hours...

Do you have coredumps created ? --> No, and we even enabled more debugging (part of the Check Point Case)

Again, we'd rather like to know if we're the only one experiencing such an issue and if this will possibly be better in a ClusterXL configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would suggest to try to switch from VRRP configuration to ClusterXL on your lab firewalls. I would be interested in results myself too.

I think I might have seen something similar in our environment, although I don't know all details. There are several clusters with IPSO R75.40 and IPSO clustering enabled, there is a main bond interface with many vlans. If anyone adds an interface to the cluster, it "dies" for several seconds-minutes and then goes back up again. I thought it is just a "special" behavior of IPSO clustering and old software. I can check how many vlans are on this interface tomorrow.

And here are some details on CoreXL Dynamic Dispatcher. Although I would expect that you see some clear issues with load sharing between cores if there are some problems with CoreXL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We're actually about to do that on a second pair of lab firewalls. I'll let you know about our experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Roger,

We use actually only ClusterXL with our customers. Yes, also with many VLAN's about 200-400 on 23K appliance.

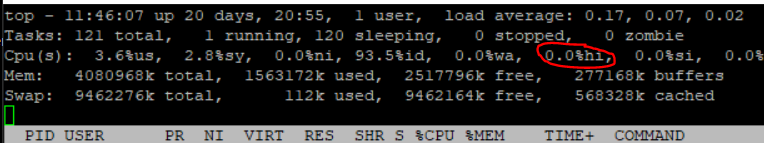

With 10 GBit+ interfaces the hardware interupts usually cause problems.

You see this with top under "hi".

# top

I think the main problem is performance tunning on such systems. As I said, use as much interface as possible and the tuning steps from my previous entry.

This reduces the number of hardware interrupts per interface. They put less strain on the dispatcher. Use more dispatcher if necessary or use multiqueueing.

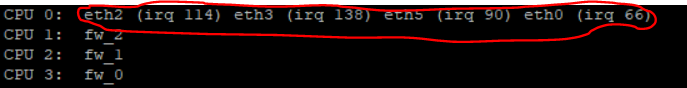

You see this with the following command under the Ethernet-Interface:

# more /proc/interrupts

-> If you have more dispatchers they are distributed among the CPU's.

You see this with:

# fw ctl affinity -l -r -v -a

-> If you have more dispatchers they are distributed among the CPU's. The interrupts are assigned to the CPU.

If necessary enable dynamic dispatcher.

Optimize SecureXL to use the SXL path or medium path.

PS:

Install R77.30 JHF 302.

Regards

Heiko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Heiko

Thank you for the answer, which one actually replied some of the questions. We're still working on the Lab Firewalls using ClusterXL, so we cannot share any news yet. We have it up and running, but without many interfaces yet.

In production, we have the dynamic dispacher not enabled, but as far as I understand it, this feature is used to better balance the load of the fw_workers cores, but not on the network part, where we think the problem is. On our 24 core applicance, since we don't do any fancy stuff with NGTP++ (just plain firewalling), we also changed the core distribution to 12 core for firewalling and 12 cores for the rest, including networking. We also enabled multiqueing for the most loaded interfaces, but unfortunaly, multiqueuing just supports up to 5 physical interfaces.

When looking at "top" command, the hardware interrupts aren't really the issue, the value ist between 0.5 and 1%. But the software interrupts are around 25%.

When checking /proc/interrupts, we can see, that those interfaces where we enabled multiqueing, the interrupts are handled by more than just 1 core, therefore seems to work as expected.

About JHF 302, do you have some information, that this will fix something in this matter? Because we didn't get any signal from Check Point in the open case that we should upgrade.

Regards

Roger

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you consult sk115641 yet ?

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why should I? We're not using ClusterXL yet...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You wrote that routed is hanging - this looks very similar to the issue from sk115641 where routed is fixed by a HF. But this willl need TAC, i suppose.

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do keep in mind that some switches do not support more than 128 VLAN's on the box, most of the times these are access switches not the core switches, but still.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, afaik our cisco switches support up to 4096 vlans and we're not that close to the limit 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't recall exactly anymore, we had this issue with a VSX system that was connected to some older 2960 switches and there was an issue with the spanning tree, which had a 128 vlan limit on those boxes.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Roger,

A high utilization of software interrupts is a problem between dispatcher and firewall worker. This means the firewall worker is overloaded.

Solution:

- increase the number of firewall workers

- relieve the firewall worker

- Optimize SecureXL so that the medium path is used better and the F2F path is used less.

- Switch off or optimize blades with high CPU load (IPS, Https intervention,...).

Regards

Heiko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Heiko

Well, afaik the 25% load of software interrupts are far away from being critical. And your other suggestions are about tuning the load of the firewall workers. Let me get this straight, we don't have an issue with an overloaded firewall, so we don't need to tweak anything there. It's all about routed in VRRP, which seems to have issues when using a lot of interfaces.

Regards

Roger

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all

after a really long time with Check Point support, we received the following statements:

After verification and confirmation by our R&D here are the following limitations in terms of number of supported interfaces on VRRP and ClusterXL:

- In sk39676 we have the statement that only 127 VRRP interfaces are supported - and not more than that.

Our R&D team has confirmed that this is indeed the correct limitation for VRRP.

- As per sk31631 we support 1024 ClusterXL interfaces

Thus, for the scenario/environment the customer has - VRRP indeed appears to be not suitable - and indeed ClusterXL appears to be the suitable solution from that perspective.

So, we now have it "officially" confirmed, that there's a limitation of using many VLAN interfaces in a VRRP setup.

On a sidenote:

- We know, that the limitation of 127 interfaces mentioned in the SK above come from the design of the maximum priority of 255 per VRID, while the minimum priority delta is 2. Results in a maximum supportet interfaces of 127, because the priority isn't allowed to become a negative number. But Check Point fixed that at some point and made sure, that the priority never gone lower than 1.

- That's also why Check Point R&D stated in the same call, that they've been testing VRRP with 400 interfaces in the lab without issues, but only testet failover / failback mechanism by disabling virtual router instead of just disabling a single VLAN interface.

- We've been fiddling with that case for 5 and a half months (!!) including several outages in that time.

Therefore, since Check Point doesn't plan to fix the issues in the routed process ("works as designed")... the one and only solution is moving to ClusterXL.

Regards

Roger

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 32 | |

| 18 | |

| 12 | |

| 11 | |

| 10 | |

| 8 | |

| 6 | |

| 6 | |

| 6 | |

| 5 |

Upcoming Events

Fri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáFri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáTue 24 Mar 2026 @ 06:00 PM (COT)

San Pedro Sula: Spark Firewall y AI-Powered Security ManagementAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter