- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Access Control and Threat Prevention Best Practices

5 November @ 5pm CET / 11am ET

Ask Check Point Threat Intelligence Anything!

October 28th, 9am ET / 3pm CET

Check Point Named Leader

2025 Gartner® Magic Quadrant™ for Hybrid Mesh Firewall

HTTPS Inspection

Help us to understand your needs better

CheckMates Go:

Spark Management Portal and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Who rated this post

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AKS network with CloudGuard VMSS

I want to present an architecture to solve some needs that came to my mind when use AKS in Azure and try to apply egress policy per pod or ingress threat prevention from within other Azure vNETs or Internet.

First, we will understand that AKS is the K8S Control plane offer from MS in Azure and uses VM as worker nodes, this allows the user to easy deploy K8S clusters and the Worker Nodes are deployed in their own vNETs.

This solution came with two offering in Networking the traditional Kubenet and Azure CNI.

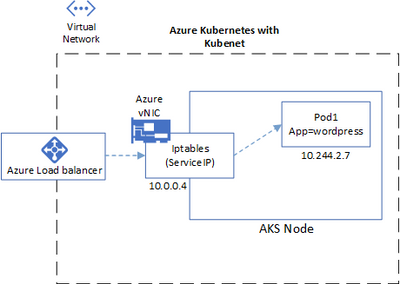

Kubenet: will deploy each node with an IP from the Azure vNET but each Pod will have an IP only inside the cluster and Services take care to NAT it for ingress exposing trough Azure LB or Worker Node will NAT Hide for egress. This is the default CNI for AKS and many implementations. The flow in this offer will look like this.

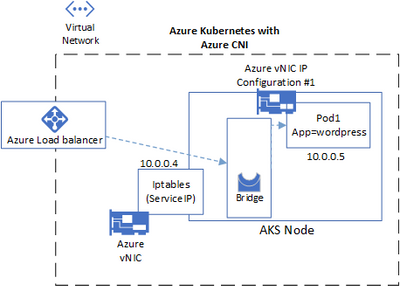

Azure CNI: this option provides integration with the underlying Azure vNET, all Pods are created with a direct IP address from the vNET where the worker reside (there is a Preview feature that allows selecting a different subnet, but for the purposes of this posting that do not worked). Now this means that the Pod have an external IP from the cluster and the flow will look like this.

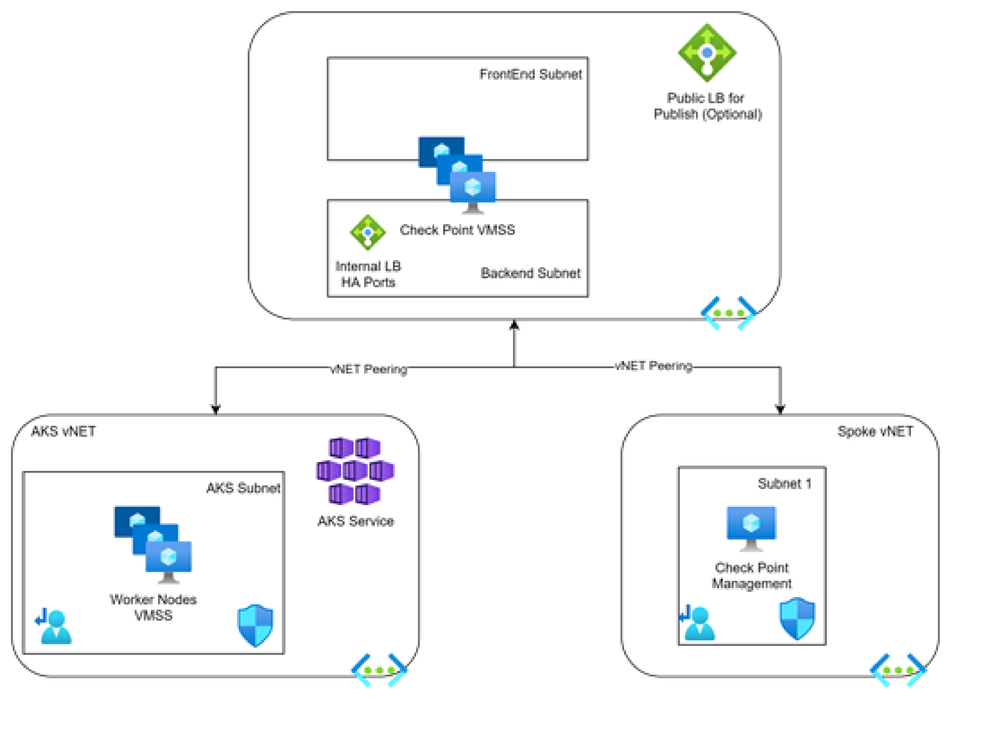

Now to protect this I'll deploy a VMSS from Check Point and use Hub and Spoke topology to centralize the networking and create a point of contact outside of the vNET, the Spoke vNETs will send the traffic to the Check Point Gateways by Route Tables and allows the communication between vNETs with rules in the gateways.

Now when investigating inside the logs I saw that AKS send me the packets with the NAT Hide of the Worker Node, even when each Pod has an IP address from the AKS Subnet.

Digging found IPtables is used and the rules that applies NAT masquerade looks like this.

sudo iptables -t nat -nvL POSTROUTING

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

14204 860K KUBE-POSTROUTING all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes postrouting rules */

0 0 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

14190 860K IP-MASQ-AGENT all -- * * 0.0.0.0/0 0.0.0.0/0 /* ip-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom IP-MASQ-AGENT chain */ ADDRTYPE match dst-type !LOCAL

The KUBE-POSTROUTING chain has just a Return the MASQUERADE chain has no effect, since just apply for any outside docker and all reach the IP-MAQ-AGENT chain, this one looks like this.

sudo iptables -t nat -nvL IP-MASQ-AGENT

Chain IP-MASQ-AGENT (1 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- * * 0.0.0.0/0 10.100.0.0/16 /* ip-masq-agent: local traffic is not subject to MASQUERADE */

14 911 MASQUERADE all -- * * 0.0.0.0/0 0.0.0.0/0 /* ip-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain) */

the chain matches the block from the vNET as return and anything else MASQUERADE that means NAT Hide, so that's happening

Now let's check who is managing this rules from Kubernetes, since all this should be orchestrated;

# Lets check for any ip-masq pods in kube-system

kubectl get pods -n kube-system -o wide|grep ip-masq

azure-ip-masq-agent-g2dsn 1/1 Running 0 4h52m 10.100.0.4 aks-nodepool1-44430483-vmss000000 <none> <none>

azure-ip-masq-agent-j27xx 1/1 Running 0

# Now lets see if there are configmaps we can look at

kubectl get configmaps -n kube-system|grep ip-masq

azure-ip-masq-agent-config 1 4h55m

So that configmap is the one that administrate the ip-masq-agent pod, if we check it we see an interesting data with nonmasqueradeCIDR

kubectl get configmap azure-ip-masq-agent-config -n kube-system -o yaml

apiVersion: v1

data:

ip-masq-agent: |-

nonMasqueradeCIDRs:

- 10.100.0.0/16

- 10.0.0.0/8

masqLinkLocal: true

resyncInterval: 60s

kind: ConfigMap

So if we add the 0.0.0.0/0 or any other vNET CIDR to the list it will change at IPTABLES chain level

# Edit the config map and add a row to the nonMasqueradeCIDRS

# I know...kubectl edit is evil...but we're just playing around here

kubectl edit configmap azure-ip-masq-agent-config -n kube-system

# Check the config

kubectl get configmap azure-ip-masq-agent-config -n kube-system -o yaml

apiVersion: v1

data:

ip-masq-agent: |-

nonMasqueradeCIDRs:

- 10.100.0.0/16

- 10.100.0.0/16

- 10.0.0.0/8

- 0.0.0.0/0

# Now lets see if that impacted our iptables

sudo iptables -t nat -nvL IP-MASQ-AGENT

Chain IP-MASQ-AGENT (1 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- * * 0.0.0.0/0 10.100.0.0/16 /* ip-masq-agent: local traffic is not subject to MASQUERADE */

0 0 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 /* ip-masq-agent: local traffic is not subject to MASQUERADE */

This change make me see the Pod IP in the Logs of the Check Point instead of the Node IP, so now we can create rules per Pod.

I'll attach a video showing the live logs and config.

About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter