- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Improve Your Security Posture with

Threat Prevention and Policy Insights

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: cphwd_q_init_ke process running out of control...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

cphwd_q_init_ke process running out of control after upgrade to R80.30

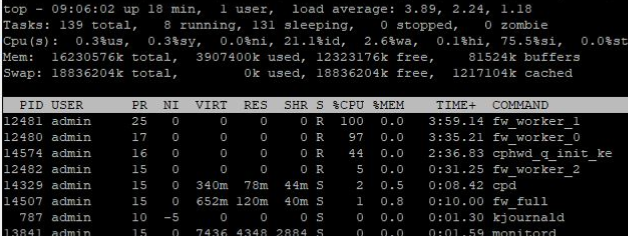

We upgraded from R80.10 to R80.30 (running the latest ga jumbo hotfix take) about 10 days ago on a year old 5900 appliance. In the time since the upgrade, the CPU has been getting absolutely hammered on that box, when it was operating in the single digits of utilization before the upgrade. The culprit for this increase appears to be the cphwd_q_init_ke process, which is consistently the top talker on the device and using up to 100% of the CPU.

Has anyone seen this before? Is this a new process as part of 80.30? Any tips for how to improve this? If this keeps up I'll have to roll back to 80.10 which I would prefer not to do.

22 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you install the last JHFA? It fixes some bugs with 100% CPU usage.

Jumbo Hotfix Accumulator for R80.30 - from Take 76

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the heads up, still on take 50, I'll have to try this one

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Upgraded to jhf 107 last night but am seeing the same performance unfortunately. As an example, at the moment the cphwd_q_init_ke process is at 80% cpu utilization, the next top talker is a fw worker at 4%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Were you able to find the cause of this issue? We tried to go from R80.10 to R80.30 JHF111, however all 3 workers became 100% on CPU, plus "cphwd_q_init_ke" in the top processes too.

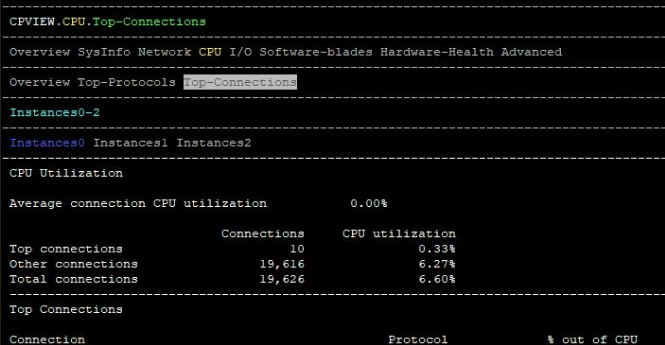

I go to check top connections and there is nothing:

fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 18903 | 18990

1 | Yes | 2 | 18795 | 18892

2 | Yes | 1 | 18670 | 18732

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is this marked as solved when the OP clearly states the the jumbo did not solve his issue??

We are having hte same issue on 5900 gateways upgraded to R80.30 from R80.10 and on the latest jumbo 155.

Any ideas??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After digging more into this I believe the issue is that R80.20 shifted encryption from the firewall workers to the SND and as a result we were seeing the cphwd_q_init_ke process getting overrun because SND now had a massive amount of encryption overhead on top of the normal traffic queues the SND is responsible for running.

Changing our worker-SND distribution and enabling multi-queue appears to have eliminated this problem for us. As an example, we shifted from 14 workers and 2 cpus dedicated to SND on a 5900 appliance to 6 workers and 10 SND with multi-queue enabled, CPU utilization returned to pre-80.30 levels and that network became stable again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!

So you have 10 SND and only 6 corexl instances?

How does your "sim affinity -l" and "fw ctl affinity -l -r" look like??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nothing really astonishing if you run any of the fw ctl affinity -l -a -v commands because multiqueue is enabled, you only really see "interface x has multiqueue enabled" for those and CPUs without a worker show up blank.

From what I understand in talking with TAC the SND load is balanced among the non-firewall worker CPUs

So for instance if you had interfaces 1 and 2 multiqued and 10 SNDs, those 10 snd CPUs would distribute the load from those 2 interfaces. Without multiqueue you'd have a single CPU assigned to each of those interfaces.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are fighting with similar problem, but on R80.20 and latest JHF are not very helpfull.

Can you post multi queue output (cpmq get -v), as we may have issues with this and want to compare with a working config.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have this issue after going from 100->1000 VPN remote users and switching form visitor mode to NAT-T.

TAC try to solve it adding to kernel simi_reorder_hold_udp_on_f2v=0 but it won't help much. Process cphwd_q_init_ke still killing one of CPU with SND.

Anybody know what are the best practice to core distribution if we want to handle better VPN - Remote Access traffic? Does increasing SND from 2 to 6 make sense??

Ver 80.20 Take: 141

Best regards,

Rafal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a hotfix for this issue: sk165853

High CPU usage on one CPU core when the number of Remote Access users is high

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has anybody noticed this behavior after installing that fix on R80.30 Gaia 2.6:

Good:

- VPN load is now smoothly spread across available SND cores (and not just only on the first SND core anymore)

- There are now as many cphwd_q_init_ke PIDs in the top of CPU load as there are fw_worker. Before, it was just one.

Bad:

- All cphwd_q_init_ke PIDs visible in top with high CPU load are assigned to the same CPU core as fw_worker_0.

Lets take a closer look at that:

Here we have an OpenServer with 12 cores for example. Licence allows 8 cores. 2 cores are assigned as SND, 6 as firewall worker. Pretty much default.

# fw ctl affinity -l -r -a -v

CPU 0: eth0 (irq 147)

CPU 1: eth1 (irq 163) eth2 (irq 179)

CPU 2: fw_5

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 3: fw_4

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 4: fw_3

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 5: fw_2

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 6: fw_1

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 7: fw_0

lpd dtlsd fwd wsdnsd in.acapd mpdaemon rad vpnd rtmd in.asessiond usrchkd pepd dtpsd cprid cpd

CPU 8:

CPU 9:

CPU 10:

CPU 11:

All:

The current license permits the use of CPUs 0, 1, 2, 3, 4, 5, 6, 7 only.

Interface eth4: has multi queue enabled

Interface eth5: has multi queue enabled

Let's take a look at the affinity map for all cphwd_q_init_ke PIDs:

# for p in `ps -eo pid,psr,comm | grep "cphwd_q_init_ke" | cut -d" " -f2`; do taskset -p $p; done

pid 8290's current affinity mask: 80

pid 8291's current affinity mask: 80

pid 8292's current affinity mask: 80

pid 8293's current affinity mask: 80

pid 8294's current affinity mask: 80

pid 8295's current affinity mask: 80

pid 8296's current affinity mask: 80

pid 8297's current affinity mask: 80

pid 8298's current affinity mask: 80

pid 8299's current affinity mask: 80

pid 8300's current affinity mask: 80

pid 8301's current affinity mask: 80

pid 8302's current affinity mask: fff

pid 8330's current affinity mask: 80

pid 8331's current affinity mask: 80

pid 8332's current affinity mask: 80

pid 8333's current affinity mask: 80

pid 8334's current affinity mask: 80

pid 8335's current affinity mask: 80

pid 8336's current affinity mask: 80

pid 8337's current affinity mask: 80

pid 8338's current affinity mask: 80

pid 8339's current affinity mask: 80

pid 8340's current affinity mask: 80

pid 8341's current affinity mask: 80

pid 8342's current affinity mask: fff

So we have 26 cphwd_q_init_ke PIDs (why 26?) and 2 of them have no restriction of the number of CPUs but 24 are restricted to core 7 (hex 80 is binary 10000000 and you count core from right to left on this bitmask).

When we check top on a highly loaded VPN gateway, it looks like this after installing this patch:

top - 09:56:01 up 2 days, 20:52, 1 user, load average: 7.45, 7.21, 6.94

Tasks: 233 total, 7 running, 226 sleeping, 0 stopped, 0 zombie

Cpu0 : 0.0%us, 0.0%sy, 0.0%ni, 43.1%id, 0.0%wa, 2.0%hi, 54.9%si, 0.0%st

Cpu1 : 0.0%us, 0.0%sy, 0.0%ni, 45.1%id, 0.0%wa, 2.0%hi, 52.9%si, 0.0%st

Cpu2 : 1.9%us, 3.8%sy, 0.0%ni, 59.6%id, 0.0%wa, 0.0%hi, 34.6%si, 0.0%st

Cpu3 : 1.9%us, 3.8%sy, 0.0%ni, 52.8%id, 0.0%wa, 0.0%hi, 41.5%si, 0.0%st

Cpu4 : 0.0%us, 4.0%sy, 0.0%ni, 66.0%id, 0.0%wa, 0.0%hi, 30.0%si, 0.0%st

Cpu5 : 1.9%us, 1.9%sy, 0.0%ni, 63.5%id, 0.0%wa, 0.0%hi, 32.7%si, 0.0%st

Cpu6 : 2.0%us, 2.0%sy, 0.0%ni, 66.7%id, 0.0%wa, 0.0%hi, 29.4%si, 0.0%st

Cpu7 : 0.0%us, 2.0%sy, 0.0%ni, 9.8%id, 0.0%wa, 0.0%hi, 88.2%si, 0.0%st

Cpu8 : 0.0%us, 0.0%sy, 0.0%ni,100.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu9 : 0.0%us, 0.0%sy, 0.0%ni,100.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu10 : 0.0%us, 0.0%sy, 0.0%ni,100.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu11 : 0.0%us, 0.0%sy, 0.0%ni,100.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Mem: 32680048k total, 10670220k used, 22009828k free, 465576k buffers

Swap: 33551744k total, 0k used, 33551744k free, 3085604k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ P COMMAND

6754 admin 15 0 0 0 0 S 43 0.0 103:28.34 3 fw_worker_4

6755 admin 15 0 0 0 0 R 37 0.0 97:36.02 2 fw_worker_5

6750 admin 15 0 0 0 0 R 33 0.0 92:26.70 7 fw_worker_0

6753 admin 15 0 0 0 0 R 33 0.0 90:05.07 4 fw_worker_3

6752 admin 15 0 0 0 0 S 31 0.0 98:53.03 5 fw_worker_2

6751 admin 15 0 0 0 0 S 29 0.0 94:25.99 6 fw_worker_1

8298 admin 15 0 0 0 0 S 25 0.0 27:53.63 7 cphwd_q_init_ke

8297 admin 15 0 0 0 0 R 14 0.0 22:37.70 7 cphwd_q_init_ke

8292 admin 15 0 0 0 0 S 8 0.0 20:27.46 7 cphwd_q_init_ke

8296 admin 15 0 0 0 0 S 8 0.0 31:20.98 7 cphwd_q_init_ke

16558 admin 15 0 772m 216m 43m R 8 0.7 88:07.54 3 fw_full

17016 admin 15 0 327m 101m 31m S 6 0.3 22:11.44 3 vpnd

8290 admin 15 0 0 0 0 S 2 0.0 28:00.28 7 cphwd_q_init_ke

8291 admin 15 0 2248 1196 836 R 4 0.0 71:34.38 5 top

8290 admin 15 0 0 0 0 R 2 0.0 28:00.27 7 cphwd_q_init_ke

So we see only 6 of these 26 PIDs for cphwd_q_init_ke here.

Why?

Of course, we could use our root priviledges here to reassign these PIDs to other cores using taskset, but I guess this will be called unsupported. And maybe we will broke something with that. Maybe the devs had a good reason, why they bound this PIDs to the same core with fw_worker_0.

Any thoughts on that?

@PhoneBoyMaybe you can ask R&D, if this is the desired behaviour with this patch? That would really help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

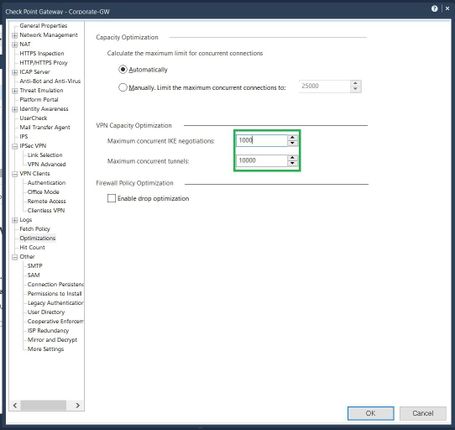

2 X 15600, 28 cores, R80.30, 2500 RUVPN

1- Increase CPU for SND

VPN encryption and decryption is handled by cphwd_q_init_ke and it is handled by SND, you need to increase the number of SND in the firewall. By reduce the number of workers, you will assign more CPU to SND.

- In a clustering environment (28 cores available) :

- Having a window for the change. The change requires to reboot the box because coreXL distribution can only be done on startup

- To change the SNDs

- cponfig on the standby member.

- Choose option for coreXL distribution and reduce the number of workers by 6. For example, if the number is 28, change it to 22 (6 to SND)

- Reboot the standby. As we're decreasing the workers, the standby will take over. This will drop some state full connections since there's no sync between members at this point.

- Make the same changes on other member and reboot.

2- Adjust VPN Capacity Optimization (Check encrypt/decrypt failed -> with CPview)

- Increase Maximum concurrent IKE negociations

- Increase Maximum concurrent tunnels

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

We are facing the same behaviour. R80.30 JHA 210, 18 workres, 6 DNS's, multiqueue enabled for 2 interfaces and cphwd_q_init_ke is still mostly on CPU 23 (on which fw_worker_0 is running) :

ps -eo pid,psr,comm | grep "cphwd_q_init_ke"

10271 23 cphwd_q_init_ke

10272 23 cphwd_q_init_ke

10273 23 cphwd_q_init_ke

10274 23 cphwd_q_init_ke

10275 23 cphwd_q_init_ke

10276 23 cphwd_q_init_ke

10277 23 cphwd_q_init_ke

10278 23 cphwd_q_init_ke

10279 23 cphwd_q_init_ke

10280 23 cphwd_q_init_ke

10281 23 cphwd_q_init_ke

10282 23 cphwd_q_init_ke

10283 23 cphwd_q_init_ke

10284 23 cphwd_q_init_ke

10285 23 cphwd_q_init_ke

10286 23 cphwd_q_init_ke

10287 23 cphwd_q_init_ke

10288 23 cphwd_q_init_ke

10289 23 cphwd_q_init_ke

10290 23 cphwd_q_init_ke

10291 23 cphwd_q_init_ke

10292 23 cphwd_q_init_ke

10293 23 cphwd_q_init_ke

10294 23 cphwd_q_init_ke

10295 23 cphwd_q_init_ke

10296 23 cphwd_q_init_ke

10297 23 cphwd_q_init_ke

10298 23 cphwd_q_init_ke

10299 3 cphwd_q_init_ke

with high load. With the same split but without multiqueue no change either.

Any ideas?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For my setup multiqueue and sending drastically more workers to SND took care of the issue. I ended up sending about half to SND and half as workers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How Your cphwd_q_init_ke distribution looks like now?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've replaced the gateway with a significantly larger one than when i first posted this issue, I no longer see that process at all, but it is completely different hardware. For the final year of that gateway we increased the SND and implemented multiqueue and it dramatically improved the performance of that process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for info. We'll try to change the split.

Best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have resolved issue with cphwd_q_init_ke, caused by high number of Remote Access users with switching RA encryption from 3Des to AES.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agree with Dilian as well, move to AES from 3DES if you're using that for encryption

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That exactly solves the issue, and applies for RA and Site to Site VPNs. Refer to https://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reviving this thread, perhaps it helps someone in the future.

Had the same issue on a 5100 cluster yesterday with cphwd_q_init_ke maxing out the CPU. This cluster is the hub for around 40 S2S VPN's. What happened was the customer team was troubleshooting a different issue and changed the VPN community to establish a tunnel per pair of hosts.

Come Monday morning with remote sites starting up for business, the poor cluster tried to establish around 37000 tunnels which was just asking too much. Changed community setting to tunnel per gateway pair and CPU utilization reverted to baseline.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 26 | |

| 18 | |

| 13 | |

| 8 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 4 | |

| 4 |

Upcoming Events

Wed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchWed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasWed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter