- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- R81.20 and Ring buffers

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R81.20 and Ring buffers

As more and more of our customer move to R81.20 we seem to be getting more and more performance related tickets.

One thing I noticed it that the ring buffers for R81.20 are smaller hen they used to be. I think ever since R77 the ring buffers tended to be 1024 for both RX and TX and they were smaller before that.

I have hardly seen any issue with RX ringbuffers set to 1024 but now we see theyy are smaller on cean installations of R81.20 and we see too many customrs with weird performance issue. And I see RX buffers set to 512 or even 256.

1. Is there a reason why these RX buffers got decreased by default? As I could find no mention of that change in any documentation or Secure Knowledge article.

2. Is there any reason why I should NOT set them back to 1024 for all of these customer?

Regards, Hugo.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

21 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see what you are saying. I never really paid too much attention to it, but comparing azure lab and regular one, I see the difference.

Andy

regular lab:

CP-FW-01> show interface eth0 rx-ringsize

Receive buffer ring size:256

Maximum receive buffer ring size:4096

CP-FW-01>

CP-FW-01> show interface eth0 tx-ringsize

Transmit buffer ring size:1024

Maximum transmit buffer ring size:4096

CP-FW-01>

Azure:

cpazurecluster1> show interface eth0 rx-ringsize

Receive buffer ring size:9362

Maximum receive buffer ring size:18139

cpazurecluster1>

cpazurecluster1> show interface eth0 tx-ringsize

Transmit buffer ring size:1024

Maximum transmit buffer ring size:2560

cpazurecluster1>

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@the_rock can you please provide the output of ethtool -i eth0 and ethtool -S eth0 on the Azure system that is reporting the 9362 RX ring size? The somewhat odd value (9362) makes me think it may be dynamically allocated or scaled.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Its a lab, so that would make sense.

Andy

[Expert@azurefw:0]# ethtool -i eth0

driver: hv_netvsc

version:

firmware-version: N/A

expansion-rom-version:

bus-info:

supports-statistics: yes

supports-test: no

supports-eeprom-access: no

supports-register-dump: no

supports-priv-flags: no

[Expert@azurefw:0]# ethtool -S eth0

NIC statistics:

tx_scattered: 0

tx_no_memory: 0

tx_no_space: 0

tx_too_big: 0

tx_busy: 0

tx_send_full: 0

rx_comp_busy: 0

rx_no_memory: 0

stop_queue: 0

wake_queue: 0

vf_rx_packets: 5402818

vf_rx_bytes: 5051231197

vf_tx_packets: 45000650

vf_tx_bytes: 4863385021

vf_tx_dropped: 0

tx_queue_0_packets: 0

tx_queue_0_bytes: 0

rx_queue_0_packets: 40119764

rx_queue_0_bytes: 8056670503

[Expert@azurefw:0]#

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The size of the ring buffer refers to the number of slots/entries/descriptors in the ring buffer.

If any change is required please refer to sk42181 - How to increase sizes of buffer on SecurePlatform/Gaia for Intel NIC and Broadcom NIC.

Increasing the size of receive and transmit ring buffers allocated by the NIC driver allows the NIC driver to queue more incoming/outgoing packets, and thus decreases packet drops (especially in scenarios with high CPU utilization).

What are the side effects of increasing the buffer? What are the downsides of implementing the buffer increase?

Increasing the ring buffer size causes the interface to store more data before sending an interrupt to the CPU to process that data. The longer (bigger) the queue, the longer the time it will take for the packets to be de-queued (processed). This will cause latency in traffic, especially if the majority of the traffic are small packets (e.g., ICMP packets, some UDP datagrams). The main reason to increase the ring buffer size on an interface is to lower the amount of interrupts sent to the CPU. Due to the inevitable latency factor, increasing the ring buffer size must be done gradually, followed by a test period.

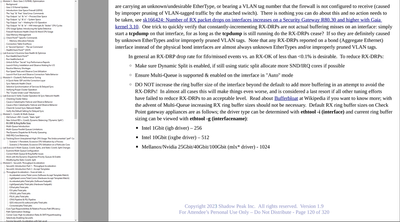

Here you have a screenshot of default values:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure about the source. But I have seen 40 Gbps QLS cards get 512 or even 256 size buffers. And that seems to contradict this source. So this is definitly something that needs attention along with better information on what other steps should be taken.

At the moment I have a ticket open with TAC and they have on with R&D and it is yet no clear how it should be configured and where the issue might be.

But for this customer trouble started with going from R81.20 with Jumbo take 26 to Jumbo Take 41. And it still persists in Jumbo Take 45.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let us know what they say Hugo.

Best,

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hugo,

You are reporting performance problems in the later releases and surmising that the RX ring buffer size is the cause, are you seeing an RX-DRP rate in excess of 0.1% on any interfaces? Unfortunately in Gaia 3.10 RX-DRP can now also be incremented upon receipt of a undesirable Ethertype (like IPv6) or an improperly pruned VLAN tag (what I call "trash traffic" that we can't handle anyway), not just due to a full ring buffer which causes loss of frames that we want to process. In Gaia 2.6.18 this trash traffic was silently discarded and did not increment RX-DRP.

To compute the true RX-DRP rate for ring buffer misses you'll have to do some further investigation with ethtool -S. See sk166424: Number of RX packet drops on interfaces increases on a Security Gateway R80.30 and higher .... As long as the true RX-DRP rate is below 0.1% you shouldn't worry about it. I know this can be difficult to accept but not all packet loss is bad; TCP's congestion control algorithm is actually counting on it.

On every system I've seen the ring buffer sizes are: 1GBit (256), 10Gbit (512), 40-100Gbit (1024). Are you sure you are not looking at the TX ring buffer setting? Those are sometimes smaller. Generally it is not a good idea to increase ring buffer sizes beyond the default unless true RX-DRPs are >0.1%, (and you have allocated all the SNDs you can) as doing so will increase jitter, possibly confusing TCP's congestion control algorithm and causing Bufferbloat, which actually makes performance worse under heavy load.

As to the ring buffer size of 9368 for the hv_netvsc driver in Azure reported by @the_rock, it is my impression that Azure (or perhaps the current kernel version used by Gaia) does not support vRSS which means multi-queue is not supported either. Therefore no matter how many SND cores you have only one of them can empty a particular interface's ring buffer. I'd surmise that the strangely large 9368 RX ring buffer size is an attempt to compensate for this limitation, and it may even increase itself automatically as load demands. This has already been discussed to some degree here: distribution SNDs in hyper-v environment

But it may not matter since traditionally the ring buffer was used as a bit of a "shock absorber" to absorb the impact of a flood of frames making the transition across the bus from a hardware-driven buffer (on the NIC card itself) to a software-driven RX ring buffer in RAM. But in Azure everything is software and there is no physical NIC, at least that is exposed to the VM. That is why there can probably never be interface errors like RX-ERR or possibly even RX-OVR so the size of the ring buffer may not really matter either.

Once again if the "true" RX-DRP rate is <0.1% I'd leave the RX ring buffer size at the default for physical interfaces. Read the Bufferbloat article for a good explanation of why.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually increasing Rx buffers to 1024 had the most impact. But it did not resolve the isssue.

However as was explained by Check Point increasing Rx buffers in a number of steps is about as logical to R&D as a doctor who listens to your complaints and prescribes antibiotics. EN as a good docter they even double the dose in a few days if the desired effect is not reached.

So at this moment I think your comments have yet to be noted by R&D.

Today it seems we may have a match to an issue under investigation. For this there is a custom hotfix that will be portfixed for the customer and tested in the setup they have replicated for us. So we hope this will fix the issue at hand.

Increasing Rx buffers to 4096 did resolve RxDrops on the physical interfaces but now we see them on the Warp interfaces.

Which pretty much tells us that the first action by R&D was not the best approach.

Playing with Ring Buffers is much more complicated these days but that message is not yet part of R&D doctrine.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any word from TAC on it?

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems we have a match on https://support.checkpoint.com/results/sk/sk181860 and we are waiting for the portfix and a maintenance window to implement.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the update.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder what is behind "a high number of interfaces" as mentioned in the SK. I've gateways with 10's of VLAN on R81, .10, .20 and haven't encountered this situation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Totally logical point @Alex-

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would also be curious to know how many is too many. @Hugo_vd_Kooij how many total interfaces are being reported by fw ctl iflist on the system with the issue?

Based on the function name I'd speculate there is an issue with the code that maps interface numbers (shown by fw ctl iflist) to the raw device names, which would be needed for just about every packet passing through. Perhaps there is some kind of index or hash function that allows fast searching of this cache, but it breaks down with too many interfaces present and has to go back to linear searching? The threshold is probably some power of two such as 256, 512, etc. The overall maximum number of supported interfaces for a standard gateway is 1024 (VSX is 4096), so I'll go out on a limb and guess that Hugo's gateway has more that 512 interfaces.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I recall from the last call this was something in the order of 120 interfaces.

But as I don't manage it I have no actual figure as that informaton went straight from customer to TAC.

The thing to look out for if you run perftop is if the following process is on the top:

get_dev2ifn_hash

By now I know that to be a big red flag.

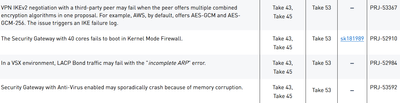

The hotfix for this is schedule in the next jumbo Hotfix after take 53.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue in SK181860 issue is now also addressed in Jumbo Hotfix Take 54.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On WRP interface it is stated as 'cosmetic'

https://support.checkpoint.com/results/sk/sk178547

-------

Please press "Accept as Solution" if my post solved it 🙂

Please press "Accept as Solution" if my post solved it 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The source is Check Point Professional Services - Health Check Report. The screenshot is from Timothy Hall.

There are still important notes on Jumbo Take 45 that are addressed in Take 53: https://sc1.checkpoint.com/documents/Jumbo_HFA/R81.20/R81.20/Important-Notes.htm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i get a lot of RX buffers overflows since migrating from 5088/23800 to "newer" firewalls like 7000,26000,9100.

But, it only seems to affect interfaces where S2S Ipsec traffic is happening.

Eg. with 260Mbit Ipsec Traffic on an 10G interface on a i get up to 2% of packets dropped.

When increasing the rx buffer from the default 512 to 2048, i still get around 0.2% packet drops.

When increasing the rx buffer to 4096 there are no more drops.

does anyone see the same behaviour?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this is a known issue with the Intel igb/ixgbe/ice drivers. If you have a large number of VPN tunnels terminating on an interface (presumably the external, Internet-facing one) using any of these drivers, they do not support Multi-Queue traffic balancing by SPIs on the SND cores. This will result in ring buffer overflows. Here is an excerpt from my Gateway Performance Optimization course discussing it:

IGB/IXGBE/ICE Driver Issues Balancing IPSec Traffic on SNDs

If possible, try to avoid terminating a large number of IPSec VPNs on an Ethernet interface that is utilizing Intel’s igb, ixgbe, or ice driver. Generally, for most organizations, all VPN tunnels terminate on the External (Internet-facing) interface. These older drivers do not support the balancing of IPSec traffic by SPI, and may cause severe SND load imbalances if a large number of IPSec tunnels are in use: sk180678: Improved Multi-Queue distribution of IPsec SPI traffic & sk183525: High CPU usage on one SND core.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 10 | |

| 7 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter