- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Migrating cluster from old to new hardware

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Migrating cluster from old to new hardware

Hi,

We are finally replacing our FW cluster with old UTM appliances for 5600 appliances. I would like to keep the same names in the policy, but since the interface names change I would like to know what the best way is to migrate to the new appliances with minimal outage.

I was about to failover to HA -

- move cables from the Primary appliance to the new 5600 Primary appliance,

- migrate export of the policy. Then remove all references of the existing cluster from the policy and delete the whole cluster from the management server.

- create a new cluster with initially 1 member (the new primary 5600) establish SIC and configure cluster with all new interfaces - Add cluster to the rules where the old cluster was removed

- Remove cables from Old HA Firewall,while installing the policy to the new Primary

- connect new 5600 HA and add to the cluster (and install policy)

Any other (or better) recommendations for a smooth migration to the new hardware?

Or can I just delete 1 cluster member and add the new hardware with different interface names to the cluster object?

Many thanks.

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's then really straight forward:

1. Start with standby node and migrate configuration of the node to the new node:

I would do this by using actual clish config, editing it to change interface names (best with search/replace)

Don't forget to do other config changes not represented in clish to the new node (scripts/fwkern.conf/etc)

2. Disconnect old standby from network

3. Conncet new standby to network

4. Reset sic through smartconsole

5. Change version/appliance type in general properties of cluster object

6. Change advanced configuration of interfaces in the topology/network management part to allow different interface names on both nodes. It doesn't matter if the cluster interface name is the new one or the old one during migration. But I prefer to have the new one already.

7. Install policy

8. Failover to new node with new version using cphastop on active node

9. Repeat above steps 1-7 to 2nd old node.

9 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this a Full-HA Cluster (Management on same box as gateway)? Or is it a distributed environment (Management running on separate server)?

I am not sure as you are speaking of migrate export in your description.

If it is distributed, you can easily change the existing cluster object to have interface names adjusted to new names and also adjust appliance hardware type.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Norbert,

It's a distributed environment. Only hardware replacement of the cluster, so should be straight forward. Management is running R80.10 existing cluster R77.20 and new hardware will be running R80.10.

It's only that we need to keep the outage window as small as possible.

My only concern is the change in interface names and how to make that as smooth as possible.

The migrate export is basically only a safety measure to have a good configuration of the policies to rollback to if the migration fails.

So short: Is it easier to remove the whole cluster object from the policy and create a new one with the new hardware/interface names or can I remove one Firewall object from the cluster and add a new one in with the new interfaces, although both cluster members than have different interface names.

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's then really straight forward:

1. Start with standby node and migrate configuration of the node to the new node:

I would do this by using actual clish config, editing it to change interface names (best with search/replace)

Don't forget to do other config changes not represented in clish to the new node (scripts/fwkern.conf/etc)

2. Disconnect old standby from network

3. Conncet new standby to network

4. Reset sic through smartconsole

5. Change version/appliance type in general properties of cluster object

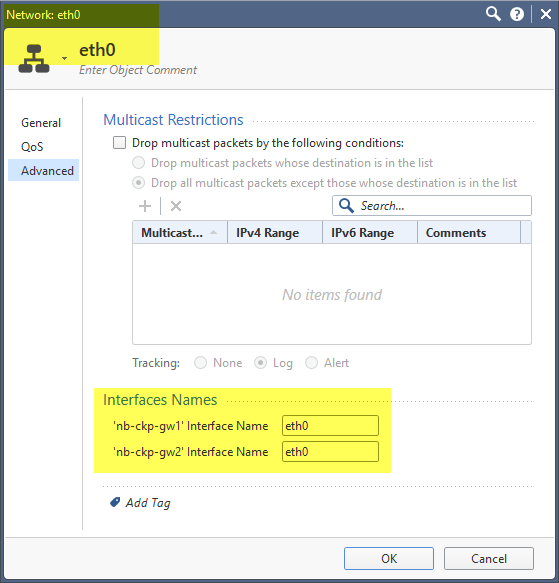

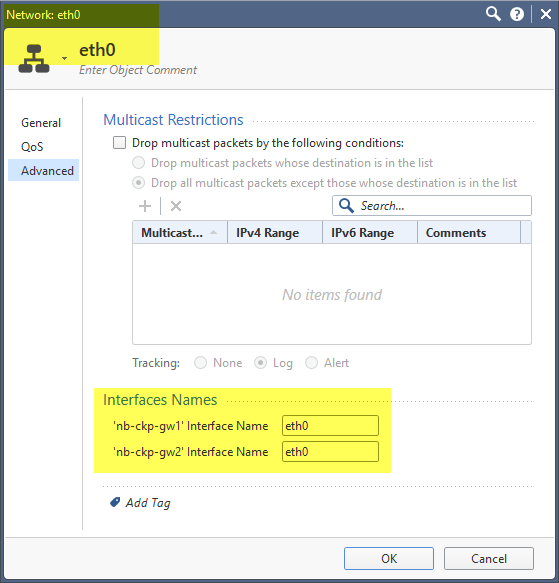

6. Change advanced configuration of interfaces in the topology/network management part to allow different interface names on both nodes. It doesn't matter if the cluster interface name is the new one or the old one during migration. But I prefer to have the new one already.

7. Install policy

8. Failover to new node with new version using cphastop on active node

9. Repeat above steps 1-7 to 2nd old node.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Norbert,

I'll give it a go.

I have done similar replacement s in the past from open server to appliance or Solaris to appliance. It was always straight forward, but somehow this one (maybe because it looks different in R80.10) it confused me.

Thanks a lot.

Jan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any other considerations if using VRRP?

Thank You

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It should be fairly similar steps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll piggyback on the back of this post with another question. I know mis-matched hardware in a cluster is not supported, but that aside, would it actually still work?

What is it about the cluster members that must be the same for ClusterXl to be happy? I suspect # of CPU cores has to match? What about RAM amount? Interface names - I.e. could one server have eth0, eth1, eth2, etc. and the other server have WAN, LAN1, LAN2, etc?

I'm wondering on the best way migrate from open server to appliance. The hardware will clearly be different. Can I add the one of the new appliances in to the existing Open Server cluster and flip over to it to cut out downtime? Or are they so mis-matched that my only option is downtime and physical cable swap from old to new?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RAM is not taken into consideration in case ClusterXL. You can have one machine with 16 GB and other cluster member with 32 GB and you can have active-standby cluster.

Interface names are not taken into consideration in case ClusterXL. Only IPs are important. During policy push, the gateway will assign IPs to the actual interface on the machine despite you have configured different interface name in Topology.

Only CPU Cores are important, the machine with more IPv4 cores will became Ready. It might be also not relevant for R80.40+.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Jozko,

I am doing similar migration from 13500 appliances to 15400 models and need limited downtime. I am also thinking about less rollback complexity as well.

The 13500 and 15400 seem to display the same core count in cpview. I am unsure however if both would sync anyway so it would probably be best to disconnect the active member just before policy install.

Any thoughts on this?

The second method I am thinking about is to:

1. Disconnect the standby member early in the maintenance window and create a new cluster object with same cluster IP

2. Add new standby member to new cluster, get interfaces and complete topology setup and cluster settings.

3. Do a Where used and Replace to replace the old cluster object with the new one, set policy target to the new firewall cluster,

4. Disconnect the old active member and finally push policy on the new firewall

Some gotchas in my environment would be that we use identity agent so users will need to trust the new certificate of the gateway I believe.

Thanks for your input.

I am doing similar migration from 13500 appliances to 15400 models and need limited downtime. I am also thinking about less rollback complexity as well.

The 13500 and 15400 seem to display the same core count in cpview. I am unsure however if both would sync anyway so it would probably be best to disconnect the active member just before policy install.

Any thoughts on this?

The second method I am thinking about is to:

1. Disconnect the standby member early in the maintenance window and create a new cluster object with same cluster IP

2. Add new standby member to new cluster, get interfaces and complete topology setup and cluster settings.

3. Do a Where used and Replace to replace the old cluster object with the new one, set policy target to the new firewall cluster,

4. Disconnect the old active member and finally push policy on the new firewall

Some gotchas in my environment would be that we use identity agent so users will need to trust the new certificate of the gateway I believe.

Thanks for your input.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 18 | |

| 13 | |

| 8 | |

| 5 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter