- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Improve Your Security Posture with

Threat Prevention and Policy Insights

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- High latency on CPAP-SG3600 HA-cluster running Gai...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

High latency on CPAP-SG3600 HA-cluster running Gaia R81.20. HCP complains about 99.26% F2F traffic.

Greetings,

I'm facing issues with high latency on a CPAP-SG3600 HA-cluster. I have a TAC-case on this topic, but I wanted to check if anyone has some pointers.

This HA-cluster has been doing just fine until mid-February. Then we started facing periods with extreme latency through the active firewall (3000 ms+), and forcing a failover would always solve it. Then the issue would just re-occur a few hours later. Rinse and repeat.

The HA-cluster has been running Gaia R81.20 GA since November without issues until mid-February. In late February, we applied R81.20 JHF Take 8, but it made no difference. Last week I did a clean install from USB on both gateways. I did also run HDT (Hardware Diagnostic Tools). But still the same behaviour.

After digging through /var/log/messages I noticed messages pointing towards issues with PrioQ. After disabling PrioQ the latency issues went away. But they keep returning, not as frequently as earlier, but it still happens often. I also stumbled upon sk180437 - Unexpected traffic latency or outage on a Security Gateway / Cluster after policy installation. Noticed we had similar messages in /var/log/messages as referenced in the SK, so I applied the solution. This didn't seem to change anything.

https://support.checkpoint.com/results/sk/sk180437

Upon reviewing my latest Health CheckPoint (HCP) report, I noticed it complaining about:

F2F rate is high. Can be reduced by optimizing rule-base, changing blades or additional configurations - check 'sk98348' section (3-5). packets in the last 5 seconds: 214177, slow path packets: 212613, percentage: 99.269762859690815%

This struck me as quite odd. We don't have anything in our firewall policy that should hamper SecureXL in such a way:

[Expert@:0]# fwaccel stat

+---------------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+---------------------------------------------------------------------------------+

|0 |KPPAK |enabled |eth5,Mgmt,eth1,eth2,eth3,|Acceleration,Cryptography |

| | | |eth4 | |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,3DES,DES,AES-128,AES-256,|

| | | | |ESP,LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256, |

| | | | |SHA384,SHA512 |

+---------------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : enabled

NAT Templates : enabled

LightSpeed Accel : disabled

But we are clearly having issues with accelerated traffic as pretty much all traffic is hitting F2F:

[Expert@:0]# fwaccel stats -s

Accelerated conns/Total conns : 22/687 (3%)

LightSpeed conns/Total conns : 0/687 (0%)

Accelerated pkts/Total pkts : 96180156/7276233558 (1%)

LightSpeed pkts/Total pkts : 0/7276233558 (0%)

F2Fed pkts/Total pkts : 7180053402/7276233558 (98%)

F2V pkts/Total pkts : 3707650/7276233558 (0%)

CPASXL pkts/Total pkts : 188774/7276233558 (0%)

PSLXL pkts/Total pkts : 87362563/7276233558 (1%)

CPAS pipeline pkts/Total pkts : 0/7276233558 (0%)

PSL pipeline pkts/Total pkts : 0/7276233558 (0%)

QOS inbound pkts/Total pkts : 0/7276233558 (0%)

QOS outbound pkts/Total pkts : 0/7276233558 (0%)

Corrected pkts/Total pkts : 0/7276233558 (0%)

[Expert@:0]# fwaccel stats -p

F2F packets:

--------------

Violation Packets Violation Packets

-------------------- --------------- -------------------- ---------------

Pkt has IP options 12 ICMP miss conn 1625812

TCP-SYN miss conn 4770065 TCP-other miss conn 24372326

UDP miss conn 3562308642 Other miss conn 242

VPN returned F2F 1106 Uni-directional viol 0

Possible spoof viol 0 TCP state viol 109

SCTP state affecting 0 Out if not def/accl 0

Bridge src=dst 0 Routing decision err 0

Sanity checks failed 0 Fwd to non-pivot 0

Broadcast/multicast 0 Cluster message 25468811

Cluster forward 9483581 Chain forwarding 0

F2V conn match pkts 4707 General reason 0

Route changes 0 VPN multicast traffic 0

GTP non-accelerated 0 Unresolved nexthop 29

[Expert@:0]# fwaccel stats

Name Value Name Value

---------------------------- ------------------- ---------------------------- -------------------

LightSpeed Accelerated Path

--------------------------------------------------------------------------------------------------------

hw accel inbound bytes 0 hw accel packets 0

hw accel outbound bytes 0 hw accel conns 0

hw accel total conns 0 hw accel tcp conns 0

hw accel non-tcp conns 0

Accelerated Path

--------------------------------------------------------------------------------------------------------

accel packets 96203817 accel bytes 65663576815

outbound packets 96203313 outbound bytes 65787905840

conns created 3423544 conns deleted 3422909

C total conns 635 C TCP conns 486

C non TCP conns 149 nat conns 2671316

dropped packets 7704 dropped bytes 775666

fragments received 592 fragments transmit 0

fragments dropped 0 fragments expired 592

IP options dropped 0 corrs created 0

corrs deleted 0 C corrections 0

corrected packets 0 corrected bytes 0

Accelerated VPN Path

--------------------------------------------------------------------------------------------------------

C crypt conns 2 enc bytes 780268880

dec bytes 50958400 ESP enc pkts 1050432

ESP enc err 136 ESP dec pkts 554343

ESP dec err 0 ESP other err 1

espudp enc pkts 0 espudp enc err 0

espudp dec pkts 0 espudp dec err 0

espudp other err 0

Medium Streaming Path

--------------------------------------------------------------------------------------------------------

CPASXL packets 188774 PSLXL packets 87384461

CPASXL async packets 188774 PSLXL async packets 78909801

CPASXL bytes 179631938 PSLXL bytes 61559666392

C CPASXL conns 0 C PSLXL conns 613

CPASXL conns created 450 PSLXL conns created 3416719

PXL FF conns 0 PXL FF packets 8473823

PXL FF bytes 6982538553 PXL FF acks 3525076

PXL no conn drops 0

Pipeline Streaming Path

--------------------------------------------------------------------------------------------------------

PSL Pipeline packets 0 PSL Pipeline bytes 0

CPAS Pipeline packets 0 CPAS Pipeline bytes 0

QoS Paths

--------------------------------------------------------------------------------------------------------

QoS General Information:

------------------------

Total QoS Conns 0 QoS Classify Conns 0

QoS Classify flow 0 Reclassify QoS policy 0

FireWall QoS Path:

------------------

Enqueued IN packets 0 Enqueued OUT packets 0

Dequeued IN packets 0 Dequeued OUT packets 0

Enqueued IN bytes 0 Enqueued OUT bytes 0

Dequeued IN bytes 0 Dequeued OUT bytes 0

Accelerated QoS Path:

---------------------

Enqueued IN packets 0 Enqueued OUT packets 0

Dequeued IN packets 0 Dequeued OUT packets 0

Enqueued IN bytes 0 Enqueued OUT bytes 0

Dequeued IN bytes 0 Dequeued OUT bytes 0

Firewall Path

--------------------------------------------------------------------------------------------------------

F2F packets 7182789713 F2F bytes 1378432955760

TCP violations 109 F2V conn match pkts 4707

F2V packets 3709013 F2V bytes 239565290

GTP

--------------------------------------------------------------------------------------------------------

gtp tunnels created 0 gtp tunnels 0

gtp accel pkts 0 gtp f2f pkts 0

gtp spoofed pkts 0 gtp in gtp pkts 0

gtp signaling pkts 0 gtp tcpopt pkts 0

gtp apn err pkts 0

General

--------------------------------------------------------------------------------------------------------

memory used 40405632 C tcp handshake conns 243

C tcp established conns 218 C tcp closed conns 25

C tcp pxl handshake conns 243 C tcp pxl established conns 203

C tcp pxl closed conns 25 DNS DoR stats 21

(*) Statistics marked with C refer to current value, others refer to total value

As a temporary workaround, we have disabled all threat-prevention blades. These gateways aren't crazy powerful. I guess it makes sense for it to start showing performance issues when barely any traffic is getting accelerated. And I suppose the problems related PrioQ is most likely a result of other things, not a trigger for the latency issues.

The question is why so much traffic is hitting F2F. I have examined the firewall policy, which consists of 116 rules. The first rule containing applications is rule 107, an in-line layer for outbound traffic for a specific subnet. All rules having applications are within in-line layers towards the bottom of the policy package. I have a really hard time understanding why so little of the traffic is being accelerated.

Does anyone else have any experience with this? Any pointers to what I should look for to figure out and solve this behaviour?

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

Labels

29 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do any of the following apply to your configuration / policy as these all impact SecureXL?

PPPoE interfaces aren't supported by SecureXL

Rules with RPC / DCOM / DCE-RPC services.

Rules with Client Authentication or Session Authentication.

When IPS protection "SYN Attack" ("SYNDefender") is activated in SmartDefense / IPS.

When IPS protection "Small PMTU" is activated in IPS.

When IPS protection "Network Quota" is activated in IPS (refer to sk31630).

When IPS protection "Malicious IPs" (DShield.org Storm Center) is activated in IPS (because it uses Dynamic Objects).

Refer also: sk32578

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

None of this should be relevant. The four IPS-related things might apply when running Threat Prevention as we run Autonomous Threat Prevention using the "Perimeter" profile. Not sure how we would verify that as we have so little control when using Autonomous Threat Prevention. But this statistic is with only the following blades currently enabled:

[Expert@:0]# enabled_blades

fw vpn urlf appi

There is no PPPoE. Rules disabling acceleration would be showing from the "fwaccel stat" command. Currently, we are using none of those.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Chris_Atkinson made a good point in his last response. In the old days of CP, R77 and before, TAC would always suggest to customers to use service with protocol "NONE", not default one, so there is no IPS inspection taking place. Is it ideal, I would say no, BUT, if it works, at least its good workaround. Though, you need to confirm 100% what service might be a "culprit"

To clarify my point, this is ONLY advisable if you know what service is causing the problem, no need to fiddle with it otherwise.

Do you have TAC case open for this or not yet?

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a ticket with TAC going. But it's moving slowly and the latest recommendation was to add traffic to fast acceleration, which seems like a strange thing to bring up in my opinion. Fast accel is a great tool to have specific traffic bypass inspection. But this is a scenario where SecureXL doesn't seem to really engage at all. Looking into fast acceleration seems more like a desperate attempt at tossing enough traffic into the accelerated path in order to have the issue go away. It doesn't really make much sense in terms of figuring out why barely anything is being accelerated in the first place.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @RamGuy239

I think it would help us big time if you could generate below:

and

Btw, hcp is so easy now in R81.20, as you can access it once done via https://x.x.x.x:portnumber/hcp link

Cheers mate.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HCP is great, HCP was the test that pointed my towards the F2F issue to begin with.

I have never heard about S7PAC, looks liks a nice tool:

[Expert@:0]# ./s7pac

+-----------------------------------------------------------------------------+

| Super Seven Performance Assessment Commands v0.5 (Thanks to Timothy Hall) |

+-----------------------------------------------------------------------------+

| Inspecting your environment: OK |

| This is a firewall....(continuing) |

| |

| Referred pagenumbers are to be found in the following book: |

| Max Power: Check Point Firewall Performance Optimization - Second Edition |

| |

| Available at http://www.maxpowerfirewalls.com/ |

| |

+-----------------------------------------------------------------------------+

| Command #1: fwaccel stat |

| |

| Check for : Accelerator Status must be enabled (R77.xx/R80.10 versions) |

| Status must be enabled (R80.20 and higher) |

| Accept Templates must be enabled |

| Message "disabled" from (low rule number) = bad |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 278 |

+-----------------------------------------------------------------------------+

| Output: |

+---------------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+---------------------------------------------------------------------------------+

|0 |KPPAK |enabled |eth5,Mgmt,eth1,eth2,eth3,|Acceleration,Cryptography |

| | | |eth4 | |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,3DES,DES,AES-128,AES-256,|

| | | | |ESP,LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256, |

| | | | |SHA384,SHA512 |

+---------------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : enabled

NAT Templates : enabled

LightSpeed Accel : disabled

+-----------------------------------------------------------------------------+

| Command #2: fwaccel stats -s |

| |

| Check for : Accelerated conns/Totals conns: >25% good, >50% great |

| Accelerated pkts/Total pkts : >50% great |

| PXL pkts/Total pkts : >50% OK |

| F2Fed pkts/Total pkts : <30% good, <10% great |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 287, Packet/Throughput Acceleration: The Three Kernel Paths |

+-----------------------------------------------------------------------------+

| Output: |

Accelerated conns/Total conns : 21/643 (3%)

LightSpeed conns/Total conns : 0/643 (0%)

Accelerated pkts/Total pkts : 114950023/8029316457 (1%)

LightSpeed pkts/Total pkts : 0/8029316457 (0%)

F2Fed pkts/Total pkts : 7914366434/8029316457 (98%)

F2V pkts/Total pkts : 4081912/8029316457 (0%)

CPASXL pkts/Total pkts : 188774/8029316457 (0%)

PSLXL pkts/Total pkts : 95956257/8029316457 (1%)

CPAS pipeline pkts/Total pkts : 0/8029316457 (0%)

PSL pipeline pkts/Total pkts : 0/8029316457 (0%)

QOS inbound pkts/Total pkts : 0/8029316457 (0%)

QOS outbound pkts/Total pkts : 0/8029316457 (0%)

Corrected pkts/Total pkts : 0/8029316457 (0%)

+-----------------------------------------------------------------------------+

| Command #3: grep -c ^processor /proc/cpuinfo && /sbin/cpuinfo |

| |

| Check for : If number of cores is roughly double what you are excpecting, |

| hyperthreading may be enabled |

| |

| Chapter 7: CoreXL Tuning |

| Page 239 |

+-----------------------------------------------------------------------------+

| Output: |

4

HyperThreading=disabled

+-----------------------------------------------------------------------------+

| Command #4: fw ctl affinity -l -r |

| |

| Check for : SND/IRQ/Dispatcher Cores, # of CPU's allocated to interface(s) |

| Firewall Workers/INSPECT Cores, # of CPU's allocated to fw_x |

| R77.30: Support processes executed on ALL CPU's |

| R80.xx: Support processes only executed on Firewall Worker Cores|

| |

| Chapter 7: CoreXL Tuning |

| Page 221 |

+-----------------------------------------------------------------------------+

| Output: |

CPU 0:

CPU 1: fw_2 (active)

cprid lpd mpdaemon fwd core_uploader wsdnsd fwucd rad in.acapd in.asessiond topod vpnd scrub_cp_file_convertd watermark_cp_file_convertd cprid cpd

CPU 2: fw_1 (active)

cprid lpd mpdaemon fwd core_uploader wsdnsd fwucd rad in.acapd in.asessiond topod vpnd scrub_cp_file_convertd watermark_cp_file_convertd cprid cpd

CPU 3: fw_0 (active)

cprid lpd mpdaemon fwd core_uploader wsdnsd fwucd rad in.acapd in.asessiond topod vpnd scrub_cp_file_convertd watermark_cp_file_convertd cprid cpd

All:

Interface eth5: has multi queue enabled

Interface Mgmt: has multi queue enabled

Interface eth1: has multi queue enabled

Interface eth2: has multi queue enabled

Interface eth3: has multi queue enabled

Interface eth4: has multi queue enabled

+-----------------------------------------------------------------------------+

| Command #5: netstat -ni |

| |

| Check for : RX/TX errors |

| RX-DRP % should be <0.1% calculated by (RX-DRP/RX-OK)*100 |

| TX-ERR might indicate Fast Ethernet/100Mbps Duplex Mismatch |

| |

| Chapter 2: Layers 1&2 Performance Optimization |

| Page 28-35 |

| |

| Chapter 7: CoreXL Tuning |

| Page 204 |

| Page 206 (Network Buffering Misses) |

+-----------------------------------------------------------------------------+

| Output: |

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Mgmt 1500 0 0 0 0 0 0 0 0 0 BMU

bond0 1500 0 111712603 0 0 0 109914171 0 0 0 BMmRU

bond0.100 1500 0 21204368 0 0 0 18533468 0 0 0 BMRU

bond0.200 1500 0 89 0 0 0 5219 0 0 0 BMRU

bond0.208 1500 0 2341439 0 0 0 3421902 0 0 0 BMRU

bond0.224 1500 0 1855780 0 0 0 1972088 0 0 0 BMRU

bond0.302 1500 0 56764963 0 0 0 78537570 0 0 0 BMRU

bond0.304 1500 0 269 0 0 0 11378 0 0 0 BMRU

bond0.305 1500 0 599 0 0 0 43714 0 0 0 BMRU

bond0.306 1500 0 1038214 0 0 0 1283056 0 0 0 BMRU

bond0.666 1500 0 28071813 0 0 0 6091292 0 0 0 BMRU

bond1 1500 0 87779393 0 0 1756 94961630 0 0 0 BMmRU

eth1 1500 0 3939682497 0 0 0 3932095607 0 0 0 BMRU

eth2 1500 0 95412972 0 0 0 56903145 0 0 0 BMsRU

eth3 1500 0 16266273 0 0 0 53004939 0 0 0 BMsRU

eth4 1500 0 51309094 0 0 0 55634926 0 0 0 BMsRU

eth5 1500 0 36414301 0 0 1756 39274963 0 0 0 BMsRU

lo 65536 0 2094776 0 0 0 2094776 0 0 0 LNRU

interface eth1: There were no RX drops in the past 0.5 seconds

interface eth1 rx_missed_errors : 0

interface eth1 rx_fifo_errors : 0

interface eth1 rx_no_buffer_count: 0

interface eth2: There were no RX drops in the past 0.5 seconds

interface eth2 rx_missed_errors : 0

interface eth2 rx_fifo_errors : 0

interface eth2 rx_no_buffer_count: 0

interface eth3: There were no RX drops in the past 0.5 seconds

interface eth3 rx_missed_errors : 0

interface eth3 rx_fifo_errors : 0

interface eth3 rx_no_buffer_count: 0

interface eth4: There were no RX drops in the past 0.5 seconds

interface eth4 rx_missed_errors : 0

interface eth4 rx_fifo_errors : 0

interface eth4 rx_no_buffer_count: 0

interface eth5: There were no RX drops in the past 0.5 seconds

interface eth5 rx_missed_errors : 0

interface eth5 rx_fifo_errors : 1756

interface eth5 rx_no_buffer_count: 0

+-----------------------------------------------------------------------------+

| Command #6: fw ctl multik stat |

| |

| Check for : Large # of conns on Worker 0 - IPSec VPN/VoIP? |

| Large imbalance of connections on a single or multiple Workers |

| |

| Chapter 7: CoreXL Tuning |

| Page 241 |

| |

| Chapter 8: CoreXL VPN Optimization |

| Page 256 |

+-----------------------------------------------------------------------------+

| Output: |

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 352 | 3492

1 | Yes | 2 | 367 | 1346

2 | Yes | 1 | 389 | 1288

+-----------------------------------------------------------------------------+

| Command #7: cpstat os -f multi_cpu -o 1 -c 5 |

| |

| Check for : High SND/IRQ Core Utilization |

| High Firewall Worker Core Utilization |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 173 |

+-----------------------------------------------------------------------------+

| Output: |

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 22| 78| 22| ?| 36976|

| 2| 40| 45| 15| 85| ?| 36977|

| 3| 40| 44| 16| 84| ?| 36977|

| 4| 35| 49| 15| 85| ?| 36977|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 22| 78| 22| ?| 36976|

| 2| 40| 45| 15| 85| ?| 36977|

| 3| 40| 44| 16| 84| ?| 36977|

| 4| 35| 49| 15| 85| ?| 36977|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 21| 79| 21| ?| 68428|

| 2| 37| 38| 26| 74| ?| 34211|

| 3| 37| 35| 28| 72| ?| 68420|

| 4| 31| 39| 30| 70| ?| 68424|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 21| 79| 21| ?| 68428|

| 2| 37| 38| 26| 74| ?| 34211|

| 3| 37| 35| 28| 72| ?| 68420|

| 4| 31| 39| 30| 70| ?| 68424|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 23| 77| 23| ?| 72900|

| 2| 39| 41| 20| 80| ?| 36454|

| 3| 39| 38| 22| 78| ?| 72921|

| 4| 37| 42| 22| 78| ?| 36459|

---------------------------------------------------------------------------------

+-----------------------------------------------------------------------------+

| Thanks for using s7pac |

+-----------------------------------------------------------------------------+

HCP as already been utilized as i mentioned in my original post. It was HCP that was pointing me to there being a terrible SecureXL utilization.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will review it in a bit.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree, you got 98% F2F packets, that may explain some of your issues, for sure. Can you run cpview and just tab between fields and look for heavy connections/services. Also, can you run -> fw ctl multik print_heavy_conn

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any SQLNET2 traffic per sk179919 ?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, sk179919 has me somewhat confused. When I try the command "fw tab t connections -z" I'm just getting this:

[Expert@:0]# fw tab t connections -z

-z option must be used with connections table

I don't expect this to be relevant. Shouldn't be a lot of SQL traffic in this environment at all.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume it’s because the command should be fw tab -t connections -z

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Funny how the SK shows the wrong command. When running "fw tab -t connections -z" I get a rather extensive output. Nothing showing anything SQLNET2 though.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is unlikely your rulebase configuration is causing the high F2F, output of fwaccel stat says accept templates are fully enabled which have nothing to do with forcing traffic F2F anyway. All disabling priority queueing did was reduce the extra CPU load caused by re-prioritization of traffic when a worker core hits 100% (which is happening often), but the underlying cause of the high CPU load for the workers in the first place is excessive F2F.

This may seem a rather silly question, but are you sure these reports and commands were run on the active cluster member? It is expected behavior to see near 100% F2F on the standby member in ClusterXL HA.

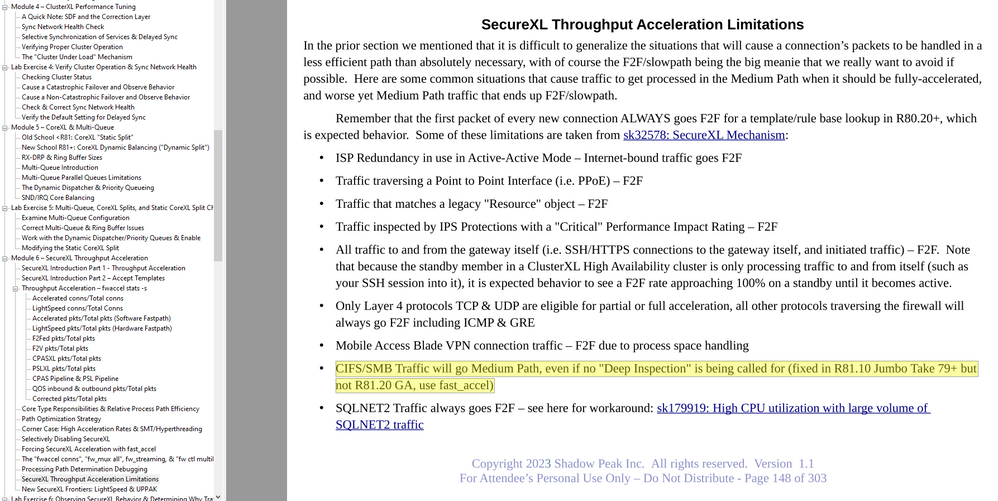

At this point the only way to conclusively determine why so much traffic is going F2F is to run a kernel debug which will show you the reason; I'm a bit surprised TAC hasn't suggested this yet. This topic is covered in my Gateway Performance Optimization class, here are the relevant pages from the class for all current situations I know of that can cause heavy F2F (feedback on any missing ones from anyone reading is always welcome!), as well as the debug procedure itself. One you have the needed debug output in f2f.log run the command:

grep "accelerated, reason" /var/log/f2f.log (note there is a single space between the ","and "reason"):

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the detailed response. I will run the debug and see if it might give us any pointers. Strange for TAC to not mention this, I specifically asked for any details that I could run to get a better understanding of the F2F behaviour.

The output is indeed from the current active member, not the standby member.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, when checking in on this more closely it seems like the HA-cluster is "spamming" our local DNS-server with UDP-53. UDP-53 is showing as our top protocol via cpview by a large margin, and traffic (mostly from the standby member, but also the active member at times) towards our DNS-server is almost always showing under top connections.

When I do the debug and filter for UDP-53 it's showing a ton of:

@;44710299;27Mar2023 9:17:51.017858;[vs_0];[tid_1];[fw4_1];fw_update_first_seen_packet_statistics: fwconn_get_app_opaque() returned invalid conn stats opaque <dir 0, 172.30.2.3:43276 -> 172.30.2.98:53 IPP 17>.;

@;44710299;27Mar2023 9:17:51.018952;[vs_0];[tid_1];[fw4_1];fwconn_stats_set_non_accelerated_reason: NULL conn_stats. couldn't update conn <dir 0, 172.30.2.3:43276 -> 172.30.2.98:53 IPP 17>;

@;44710313;27Mar2023 9:17:51.156824;[vs_0];[tid_1];[fw4_1];fw_update_first_seen_packet_statistics: fwconn_get_app_opaque() returned invalid conn stats opaque <dir 1, 172.30.2.2:63252 -> 172.30.2.98:53 IPP 17>.;

@;44710314;27Mar2023 9:17:51.173930;[vs_0];[tid_1];[fw4_1];fw_update_first_seen_packet_statistics: fwconn_get_app_opaque() returned invalid conn stats opaque <dir 1, 172.30.2.2:63252 -> 172.30.2.98:53 IPP 17>.;

This are the standby (.3) and active (.2) members utilising UDP-53 towards our DNS-server.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "reasons" that can be provided by the F2F debug are not really documented, but if you post it here we should be able to figure it out. Any traffic emanating from the firewall itself (such as DNS lookups) always goes F2F.

Run a tcpdump with the -vv option matching the DNS traffic, that will show you precisely what is being looked up and may give a hint as to what is going on. Any chance you have domain objects in your rulebase? sk133313: Many DNS traffic logs after adding access rules with Domain Objects. These can cause heavy DNS lookups emanating from the firewall and other issues as described here in my course:

Beware: Use of Domain Objects

Use of domain objects "i.e. shadowpeak.com" in policies prior to version R80.10 was strongly discouraged, as doing so could cause huge amounts of latency for all new connections as the gateway tried to perform reverse DNS lookups to resolve the domain object name to an IP address. Thankfully domain objects received an overhaul in R80.10 that avoided this DNS situation and restored the ability to use fully-qualified domain objects in the policy without performance impact in most cases: sk120633: Domain Objects in R8x. However the use of non-FQDN domain objects can still cause issues, and should be avoided whenever possible as described here: sk162577: Traffic latency through Security Gateway when Access Control Policy contains non-FQDN Doma...

There have also been some relatively recent enhancements to DNS inspection added, not sure if it could be related but you may want to check these out: sk161612: Domain Object Enhancement - DNS Passive Learning and Advanced DNS Security Software Blade Location?

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We don't have any non-FQDN domain objects. I think the DNS traffic is expected. We are using updatable objects for Geo etc. I had a remote session with @Ilya_Yusupov, and it doesn't seem like F2F is to blame for the latency . We don't have a lot of traffic on this HA-cluster, so our amount of UDP-53 and UDP-161 appears to skew the statistics. Having above 90% F2F is expected as DNS, SNMP, and ICMP stand for most of our traffic.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @RamGuy239 for your time today.

as we saw in the remote the issue is not related to F2F but to the fact that on a date the issue happened we saw PM working hard.

we will continue the investigation through the support channel

Thanks,

Ilya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RamGuy239 I can assure you that @Ilya_Yusupov will fix your problem 100%. He helped me with really strange ISP redundancy issue for the customer and was there along the way until we found a solution.

Amazing guy! 👍👍

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @RamGuy239,

few questions in order to understand the case better.

1. when you had R81.20 GA was the traffic also goes to F2F?

2. Any chance your GW is configured as a proxy?

3. any chance you are using IPS Redundancy in Load Sharing mode?

Thanks,

Ilya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed good suggestion regarding proxy, refer:

sk92482: Performance impact from enabling HTTP/HTTPS Proxy functionality

(Irrespective of relevance here, have asked for the SecureXL mechanism SK to be updated to reference the above as a factor impacting performance.)

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no proxy functionality enabled.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Difficult to say. I don't have any statistics as I did a clean install from USB.

2. No proxy enabled.

3. No ISP Redundancy or Load Sharing.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ilya_Yusupov

I have SR-6-0003568821 going with Check Point TAC.

Certifications: CCSA, CCSE, CCSM, CCSM ELITE, CCTA, CCTE, CCVS, CCME

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi RamGuy,

I have an high latency on dns query on CPAP-SG3600 HA-cluster running Gaia R81.10 but as you see my F2F seems ok.

Accelerated conns/Total conns : 2/1561 (0%)

LightSpeed conns/Total conns : 0/1561 (0%)

Accelerated pkts/Total pkts : 5386581254/6027749482 (89%)

LightSpeed pkts/Total pkts : 0/6027749482 (0%)

F2Fed pkts/Total pkts : 641168228/6027749482 (10%)

F2V pkts/Total pkts : 32719436/6027749482 (0%)

CPASXL pkts/Total pkts : 744281475/6027749482 (12%)

PSLXL pkts/Total pkts : 4451320711/6027749482 (73%)

CPAS pipeline pkts/Total pkts : 0/6027749482 (0%)

PSL pipeline pkts/Total pkts : 0/6027749482 (0%)

CPAS inline pkts/Total pkts : 0/6027749482 (0%)

PSL inline pkts/Total pkts : 0/6027749482 (0%)

QOS inbound pkts/Total pkts : 0/6027749482 (0%)

QOS outbound pkts/Total pkts : 0/6027749482 (0%)

Corrected pkts/Total pkts : 0/6027749482 (0%)

were you able to solve it?

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I noticed high DNS latency at a customer site even though their DNS servers were properly configured and responding quickly. Disabling Anti-bot solved the problem which means it is the new DNS protections added in R81.20, but they can't be turned off easily from the SmartConsole until R82: sk178487: ThreatCloud DNS Tunneling Protection & sk175623: ThreatCloud Domain Generation Algorithm (DGA) protection

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

unfortunately we got the dns latency also on clients with ThreatProtection, HTTPS inspection and AV/URL Filtering disabled only firewalling is applied.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 26 | |

| 19 | |

| 10 | |

| 8 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 4 | |

| 4 |

Upcoming Events

Wed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasWed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter