- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

Quantum SD-WAN Monitoring

Watch NowCheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

MVP 2026: Submissions

Are Now Open!

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Connectivity issues from standby gateway after...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Connectivity issues from standby gateway after R80.10 -> R80.30 upgrade

Good day,

I have recently completed an upgrade from R80.10 to R80.30 (Management + 2 gateways in HA cluster). The upgrade itself was successful but I have noticed one issue on the standby gateway. We cannot ping or do NSlookups etc from the standby node. License checks also fails on this node.

What I have attempted thus far:

- Set the "fw ctl set int fwha_forw_packet_to_not_active 1" on both gateways

- Followed the guidance in sk147093 (fw ctl zdebug output matched that in the SK, as per below, IP sanitised)

121670435;[cpu_1];[SIM-207375815];update_tcp_state: invalid state detected (current state: 0x10000, th_flags=0x10, cdir=0) -> dropping packet, conn: [<1.1.1.1,2022,2.2.2.2,88,6>][PPK0];

@;121670435;[cpu_1];[SIM-207375815];sim_pkt_send_drop_notification: (0,0) received drop, reason: general reason, conn:

It is important to note that all connectivity is restored when I do a fw unloadlocal. There has also been no changes to either NAT or firewall policies.

I've found a couple of posts on Checkmates describing similiar issue, but unfortunately no resolution apart from the steps above.

I will also log a TAC case, but hoping to hear if anyone has experienced similiar issues after an upgrade?

Thanks,

Ruan

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Everyone,

We worked with TAC and manage to resolve the issue. In the end we had to follow step 4 in sk43807. All updates etc are working and all warnings in Smartconsole have been cleared.

Cheers,

Ruan

18 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All R80.30 gateway clusters we run are using VRRP and I can set this NAT function on the cluster object and still do not understand why this option is not available for ClusterXL.

I really don't.

I really don't.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like sk147493 - seems no R80.30 Jumbo has this fix yet...

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understood from TAC that there is a hotfix available, but they prefer not to deploy as it might be overwritten by the next Jumbo, causing behaviour regression.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have opened a case with TAC. They seemed surprised that the kernel parameter did not fix the issue, I will update this thread once we have a resolution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What i do wonder is why this is regarded as an issue ? Usually, i do not issue ping nor nslookup from the CLI of standby cluster members - or is there a very good reason for that ?

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The nslookup prevents that the gateway has access to the Check Point cloud, so when there is a failover, many things need to get their updates at that moment...

Meaning there is no updated URL/APCL database, IPS (when set to the gateway gets it by itself), Dynamic objects will fail during the first minute.

Also Cpuse will not be able to show you the list of available downloads, so when you want to update the cluster with the latest Jumbo, you need to make the member master first wait for it to get the update list etc etc.

Meaning there is no updated URL/APCL database, IPS (when set to the gateway gets it by itself), Dynamic objects will fail during the first minute.

Also Cpuse will not be able to show you the list of available downloads, so when you want to update the cluster with the latest Jumbo, you need to make the member master first wait for it to get the update list etc etc.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Everyone,

We worked with TAC and manage to resolve the issue. In the end we had to follow step 4 in sk43807. All updates etc are working and all warnings in Smartconsole have been cleared.

Cheers,

Ruan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm seeing this issue still in R80.40, is the 'solution' listed here by Ruan still considered the accepted approach to remediate?

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same issue for me with a new cluster R80.40 on OpenPlatform (Dell R640). Whichever member is in standby state cannot establish outbound connections (UDP, TCP, ICMP, etc) . The odd thing is that only traffic generated from the firewall going outbound is having the issue. Traffic that establishes a connection to the firewall (pings, ssh, etc) is working just fine from external IPs on the Internet.

I checked the SYNC interface and the local STANDBY member's generated traffic to the Internet is being forwarded to the ACTIVE member over the SYNC interface and ignoring the default gateway. I am seeing the source external interface IP of the STANDBY member and destination of the external google server I am pinging and the destination MAC address of the ACTIVE member's sync interface IP. This is with a simple ping to google. DNS to an external DNS server doesn't work either as that is also forwarded to the ACTIVE member.

This seems like pretty basic stuff that needs to work. I don't have this problem on clusters running R80.10 or earlier (I have never used R80.10 or R80.20 on gateways so I don't know about them). We really shouldn't need any workarounds for the STANDBY to be able to send local outbound traffic to check for updates. The default route is not being used so something in the checkpoint firewall stuff is intercepting the local traffic and forwarding it to the ACTIVE member's SYNC interface IP. An 'ip route get <www.google.com IP>' shows that it should be forwarded to the default gateway and not over the SYNC interface.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I fixed the issue with 'fw ctl set int fwha_forw_packet_to_not_active 1'. I tried that once (had the same setting on other sites) and it didn't work so I assumed something else was problematic. Not sure why it didn't work the first time I tried it but it is working now.

It appears the forwarding of cluster member originating traffic to the active member is expected and normal behavior. The gateways hide behind the cluster IP. STANDBY sends STANDBY originating traffic through the SYNC network with it's own external IP as the source. The ACTIVE member NATs the source IP to look like it came from the external cluster IP. Return traffic goes back to the ACTIVE member and then gets forwarded to the STANDBY.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It does work, but I guess the question still remains as to why a cli hack is needed for what is basically a required function. Ah well, perhaps in R80.50 and beyond it will become a simple tick button..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A simple tick button that has always been there for a VRRP cluster....

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you found a tick box in the GUI for 'fw ctl set int fwha_forw_packet_to_not_active 1', please do share.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesingly, I just found sk93204 article that was last updated in May 2020 but showing it applies to R80.40 saying that you should not use the 'fwha_forw_packet_to_not_active 1' setting on R80.20+ for a 'Standyby drops' problem and not to do anything for that for the newer versions. It claims you should use 'fw ctl set int fwha_silent_standby_mode 1' for R80.20+ to solve a 'Peer drops' problem. I never set that and only just found that article. I am pretty sure that I had the 'Standby drops' problem though because the standby couldn't connect outbound to checkpoint. It seems like the 'fwha_forw_packet_to_not_active 1' is still needed from my experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This behaviour changed in R80.40. In R80.30 the traffic is still sent between the gateways, but they both use the same interface. In R80.40 the standby uses the sync interface instead. This broke stuff for us (I have a ticket open). The standby gateway needs to access a website through a VPN tunnel. Works fine pre-R80.40

Since the upgrade, the standby forwards the packet over the sync interface, the active encrypts it, send it through the tunnel, gets the reply, decrypts it, then drops it because it says the interface is illegal...

dropped by vpn_encrypt_chain Reason: illegal interface group

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "illegal interface group" issue has been recently discovered and the fix is being integrated into JHF.

For now please ask the TAC to obtain the hotfix for PMTR-60663. As a temporary workaround, set fwha_cluster_hide_active_only to 0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jon,

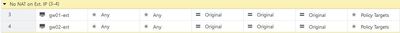

Something else that worked well for me in a couple of other instances (and is perhaps a bit easier to do) is to do NAT rules which forces a "no hide":

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The challenges (and solutions) related to Standby's connections are described in sk169154, section "Standby member's connections"

A few points relevant to this thread:

- Standby's connections are asymmetric since the replies are handled by Active. In other words, each member only sees the packets in a single direction.

- In R80.20 and later versions, deep inspection and security are applied more aggressively by default, even on local connections. This causes the active member drop some standby's connections (because it doesn't see all packets).

- The solution is making Standby's connection to be handled by the active member even on the outbound direction. Controlled by fwha_cluster_hide_active_only (enabled by default in R80.40)

- Using manual NAT rules to prevent NAT on private member's IPs is not supported. If such rules are defined, it will cause connectivity issues in the new mode above. If it's required for some reason to disable cluster NAT, use one of the supported methods (sk31832, sk34180)

- The parameter fwha_forw_packet_to_not_active has now no bearing in this scenario and should not be set to 1.

In general, connections from standby should work seamlessly in the latest versions. If there are issues:

- If the version is R80.40 or above, check that you don't have manual no NAT rules enabled.

- For earlier versions, enable the parameter fwha_cluster_hide_active_only (you may need to add an access policy rule if using management pre R80.40, as described in sk169154)

If still any problems, please contact me directly at mrogovin@checkpoint.com

Thanks,

Michael

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 25 | |

| 20 | |

| 9 | |

| 8 | |

| 7 | |

| 5 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |

Upcoming Events

Tue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter