- Products

Quantum

Secure the Network IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloudGuard CloudMates

Secure the Cloud CNAPP Cloud Network Security CloudGuard - WAF CloudMates General Talking Cloud Podcast - Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Discover the Future of Cyber Security:

What’s New in Check Point’s Quantum R82

Pick the Best of the Best of

CheckMates 2024!

Share your Cyber Security Insights

On-Stage at CPX 2025

Simplifying Zero Trust Security

with Infinity Identity!

Zero Trust Implementation

Help us with the Short-Term Roadmap

CheckMates Go:

What's New in R82

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- R80.20 Managing R80.10 Gateway - CPU Increase

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R80.20 Managing R80.10 Gateway - CPU Increase

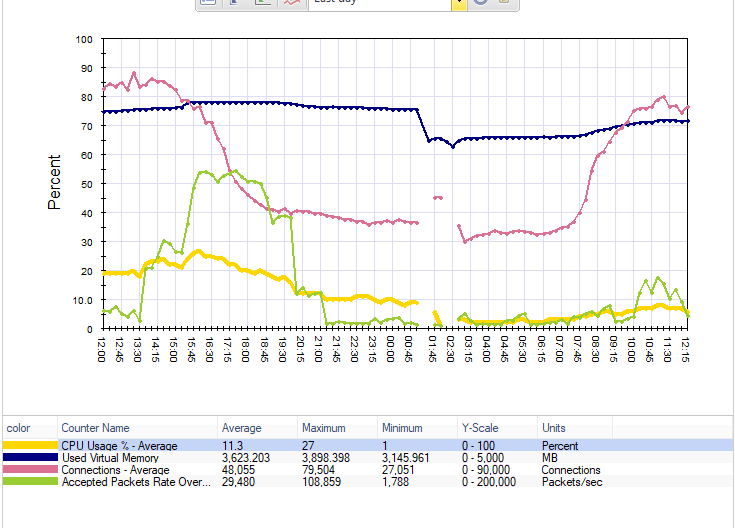

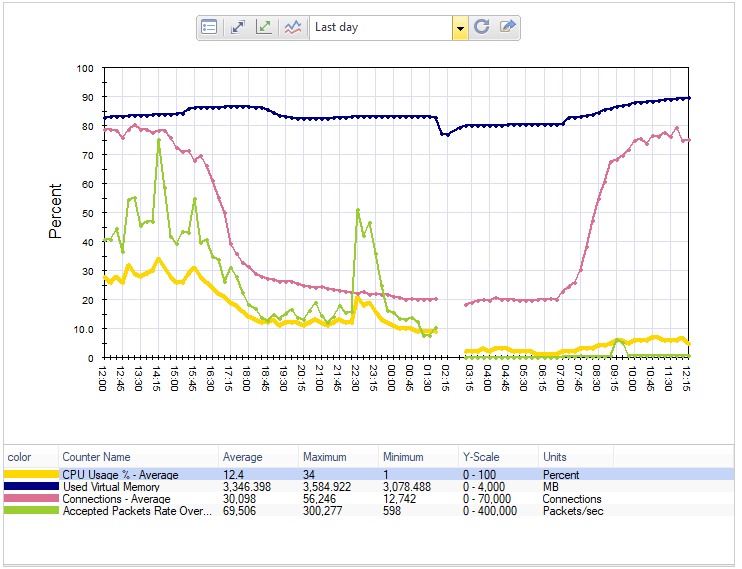

After upgrading our MDS and MLM to R80.20, we are seeing average CPU increases across all of clusters after pushing policy to the gateways. It has become very apparent on one of our firewalls that is licensed for four cores. The four gateways I am seeing this on are open hardware. I do have a pair of appliance based clusters that do not seem to have any increase in CPU. All gateways running 80.10.

I've opened a ticket with Check Point, but they haven't heard about anything like this and frankly, didn't seem too interested in getting to the bottom of it.

Has anyone else seen this out there?

42 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reboot of the two eight core clusters didn't do anything. I also told CoreXL to change the worker count to the same number already active and did an additional reboot but that didn't help either.

Plan now is to install the latest JHF on two of my clusters and, if I get approval, completely turn off CoreXL for one reboot, reactivate it and reboot again and see if that works. I understand that that could possibly cause a short outage after failover. Fortunately I have this pair of firewalls that I can tinker with a little bit in the wee hours of the morning.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good news. The JHF install did not have any affect, and neither did disabling and re-enabling CoreXL.

Remembering that changing the firewall instance count on one of my walls wiped out the issue completely, I changed the instance count on one cluster member from 6 to 7, and rebooted. When I failed over and checked the CPU usage and for other symptoms, I saw that CPU usage was down and CMI Applications and SXL notifications were nearly non-existent. I did the same on the other cluster member, failed over and sure enough, no sign of the symptoms associated with the CPU increase. I went back and returned the instance count on both gateways back to 6, still looking good.

I repeated the process on my other cluster member, and sure enough, no high CMI Applications nor SXL notifications spikes and CPU usage was down across the board. We are about nine hours past the change and looking super green.

One more firewall to go....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jason, to clear it up: you applied the JHF, rebooted and the issue remained?

But, the issue for now had disappeared when you reconfigure the instance count?

Need to know as this weekend will be deploying the JHF (as I've a pending reboot for another issue), so I want to check what happens if I follow your steps (or don't).

P.S.: it's amazing the similarity of your performance graphics with ours, it has to be the same issue for both of us (but I don't have a cluster... For now).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I can tell the only thing that has helped the issue in all three instances is the changing of the firewall instances, that's it.

JHF, reboot, just cycling CoreXL did not appear to help.

It is my understanding that if you fail from one gateway with different firewall instance counts, you will lose state and stuff may break. We were really quiet last night so nobody complained. Our change tonight will definitely be noticed.

Sent via the Samsung Galaxy S7, an AT&T 4G LTE smartphone

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well that is odd that changing the CoreXL split seems to fix the high CPU on the workers. Now wondering:

1) Perhaps the Dynamic Dispatcher (DD) is somehow causing it, or its sibling Priority Queues (PQ) is inappropriately becoming active on all workers and driving up their CPU load until the CoreXL split is changed. On a firewall with the issue it might be interesting to disable the DD and PQ, reboot without making any other changes and see if that has any effect. Obviously doing so may cause an imbalance of CPU load among the workers, but I'm curious to see if their overall CPU load drops.

2) Are you using Multi-Queue at all? If so have you run cpmq reconfigure after booting into the new core allocation and then rebooted again? Failure to do this can cause some strange issues and may try to load up CPUs with SoftIRQ processing tasks that should not be.

The mystery continues...

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gateway Performance Optimization R81.20 Course

now available at maxpowerfirewalls.com

now available at maxpowerfirewalls.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

?4 for 4 now. I carried out the process on our primary datacenter firewall. Seeing a drop from 19% CPU average this time last Saturday down to 5% CPU average while pushing more traffic.

Unfortunately (or fortunately?) I am all out of firewalls to test with. I'll try to build something out when I get caught up next week in virtual. I see about trying your suggestions. I definitely won't be installing 80.20 on production management until this gets fixed. Part of me is a little scared of installing the 80.10 management hotfix for managing 80.20 gateways...

It does seem that if you experience this issue, you are looking at a disrupting change.

Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

?We are not using Multiqueue on this gateway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, last weekend I installed the last JHF for R80.10, right now I'm applying the conflicting policy package and monitoring the CPU usage. After a few minutes the CPU keeps in 50% avg. usage but the two fw_worker processes keeps a 80~90% avg. (remember I've only two of four licensed cores), but then finally it stabilize a bit around 20~30% overall and for the workers.

Also the network performance doesn't seem to be negatively impacted, as the ping to the LAN interface remains <1ms with no packet loss and a simple online speed test gave the correct bandwidth limit (30 Mbps for downstream, 10 Mbps for upstream) I configured in the QoS.

Sooooo, it appears to be solved with the JHF installation, maybe could be tested and documented as a bug for the prior release I have (if you need the exact take installed when the issue happened, let me know as I'll try to get it).

On the other side, SecureXL stills shows the "no license" error, I've already involved my local TAC to check out the issue (will post any news on the thread I created specifically for that issue).

Thanks for the help guys!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the lack of updating.

It's been a quiet couple of weeks so far on the firewall side for us. No issues whatsoever since changing the coreXL count, which has been a relief. A Florida vacation also did wonders for my state of mind. =D

Still no sense from Check Point as to what caused this issue. I assume that they are looking into it from their end but I haven't heard anything.

Until then, we will be sitting on our hands when it comes to upgrading to R80.20 at all.

I still plan on lab'ing up some firewalls to see if I can get this to happen again, but will I even know what I am looking for other than the increase in CPU usage?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A VM lab (one four core VM + clone of our production management) didn't yield any conclusive results. Using iperf to generate traffic, we didn't see any noticeable increase in CPU nor any of the latency in ICMP we were seeing in our production environment after the policy installation.

We are going to be installing the R80.10 hotfix that will allow us to manage R80.20 gateways because we have an R80.20 gateway that needs to be managed. It was the original reason behind our upgrade and it has been sitting without a management server for about 6 weeks now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jason,

Did you end up getting to the bottom of your issue?

Thanks,

Jonne.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Jonne,

No we did not ever get a definitive cause for this issue and we are still running 80.10 on our management server with the patch that allows management of R80.20 gateways.

Things have been hectic lately, but I am hoping to get R80.30 installed on a management server and get a couple of open hardware firewalls running the same code to see if the issue can be replicated. With R80.30 coming out, our R80.10 management servers are getting a little long in the tooth.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Jason,

Thanks for the update. I had R80.10 MDS managing a R80.20 VSX VSLS Cluster for a few weeks and noticed that the active R80.20 VSX gateway appeared to use more CPU under load then when it was running R77.30. The MDS is now on R80.20 and I have not noticed any real difference of CPU usage of any of our Gateways (R77.30, R80.10 & R80.20) since being managed by R80.20 MDS. We started seeing strange behaviour in various builds of SmartConsole R80.20 after recently installing KB4886153 .NET 4.8 update on Windows 10 build 1809. We will be upgrading one of our R77.30 Security Gateway Clusters to R80.20 at the end of August, so I'll let you know if I notice any significant performance issues.

Regards,

Jonne.

- « Previous

-

- 1

- 2

- Next »

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 33 | |

| 17 | |

| 13 | |

| 10 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 4 | |

| 3 |

Trending Discussions

Upcoming Events

Mon 25 Nov 2024 @ 02:00 PM (EST)

(Americas) AI Series Part 3: Maximizing AI to Drive Growth and EfficiencyTue 26 Nov 2024 @ 10:00 AM (CET)

EMEA Session: Automated Collaborative Prevention with 3rd Party Endpoint SolutionsTue 26 Nov 2024 @ 03:00 PM (CET)

Navigating NIS2: Practical Steps for Aligning Security and ComplianceTue 26 Nov 2024 @ 05:00 PM (CET)

Discover the Future of Cyber Security: What’s New in Check Point’s Quantum R82Tue 03 Dec 2024 @ 10:00 AM (GMT)

UK Community CNAPP Training Day 1: Cloud Risk Management WorkshopTue 03 Dec 2024 @ 05:00 PM (CET)

Americas CM Session: Automated Collaborative Prevention with 3rd Party Endpoint SolutionsMon 25 Nov 2024 @ 02:00 PM (EST)

(Americas) AI Series Part 3: Maximizing AI to Drive Growth and EfficiencyTue 26 Nov 2024 @ 10:00 AM (CET)

EMEA Session: Automated Collaborative Prevention with 3rd Party Endpoint SolutionsTue 26 Nov 2024 @ 03:00 PM (CET)

Navigating NIS2: Practical Steps for Aligning Security and ComplianceTue 26 Nov 2024 @ 05:00 PM (CET)

Discover the Future of Cyber Security: What’s New in Check Point’s Quantum R82Tue 03 Dec 2024 @ 05:00 PM (CET)

Americas CM Session: Automated Collaborative Prevention with 3rd Party Endpoint SolutionsTue 03 Dec 2024 @ 10:00 AM (GMT)

UK Community CNAPP Training Day 1: Cloud Risk Management WorkshopWed 04 Dec 2024 @ 10:00 AM (GMT)

UK Community CNAPP Training Day 2: Cloud Risk Management WorkshopAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2024 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter