- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

Policy Insights and Policy Auditor in Action

19 November @ 5pm CET / 11am ET

Access Control and Threat Prevention Best Practices

Watch HereOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Increasing Fifo Buffers on Firewall Interface

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Increasing Fifo Buffers on Firewall Interface

Hi Community

I had a problem with a customer whereby there was a lot of packet re transmissions on their network. This was observed from an fwmonitor. I got the statistics of the interface and found that there was a lot of RX-DRPs ( rx_missed_errors). I followed the article to increase the FIFO buffer size in clish and this seems to solved the issue. The errors aren't increasing anymore.

I have never had to do this before for any other customer, and am curious as what might cause the interface buffers to fill up? Could it be just increased traffic on the network or could it be that there is a misconfigured switch somewhere in the network?

I also noticed that default value for rx-ringsize is only 256 whereas its maximum is 4096. Is there a reason the default is so low?

Thanks in advance and best regards

John

- Tags:

- interface

12 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the ratio of RX-DRP to RX-OK? If higher than 0,1% then tuning is needed.

RX-DRP means that firewall does not have enough FIFO memory buffer (descriptors) to hold the packets while waiting for a free interrupt to process them. What does this mean? In a very simplified explanation it means that the memory buffer on the NIC itself is not emptied quick enough by the CPU before new frames arrives.

To check for network buffer errors on that NIC run this command:

netstat -ni | grep interface_name (look for RX-DRP)

What kind of NIC hardware do you have on that interface?

ethtool -i interface_name to check what driver is used. If the driver is bge or tg3 then it's Broadcom which is not recommended for production traffic. If the driver is E1000, e100e,igb,ixgbe or w83627 then it's Intel which is recommended and can provide doubling of performance without additional tuning.

Increasing ring buffer size is a common recommendation but not desirable. The best way is to allocate more core processing resources.

4096 is the maximum ring buffer size on most NICs. 256/512 is default. What did you increase to? Why not set to maximum? Because if the buffer size is larger it also means that it takes more processing to service that buffer. Coming back to the actual problem, not enough CPU resources to empty the RX ring buffer. But in some cases there is not sufficient processing resources available because of underpowered firewall and increasing ring buffer size is the solution.

Run also the command sar -u to take a look at your CPU utilization or if you have the monitoring blade present for graphical view.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Adjusting the ring buffer is usually a last resort.

There are other performance tuning steps you should take first.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is really a masterpiece of a great sk. Bravo!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To expand upon what Dameon Welch Abernathy said, increasing the ring buffers is a last resort due to the possible introduction of an insidious performance-draining effect known as Bufferbloat. After increasing firewall ring buffer sizes in the past I noticed that while it did reduce or eliminate RX-DRPs, it frequently caused a "choppiness" in the network traffic flow under load that I couldn't fully quantify. While doing research for the first edition of my book I discovered the formal term for this effect: Bufferbloat - Wikipedia. Worth a quick read and a reminder that adding more of something is not always better when it comes to performance tuning...

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your helpful comments guys. I will give more detail on the setup.

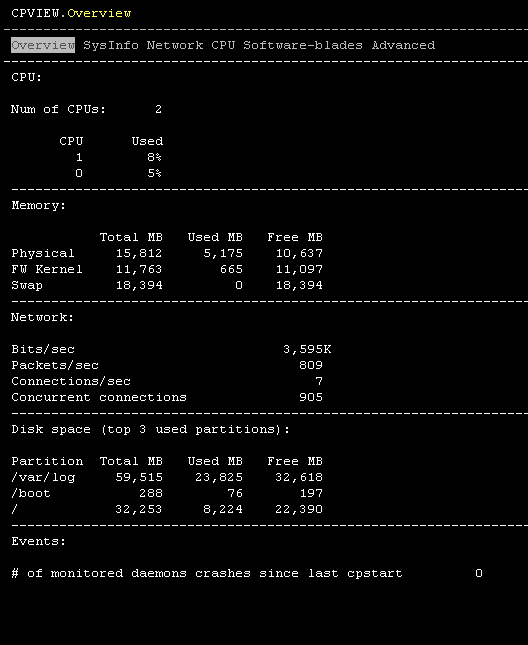

Its a self managed cluster of Checkpoint 5400s. 2 cpus = model name : Intel(R) Pentium(R) CPU G3420 @ 3.20GHz (not a great proc).

16 gigs of RAM, installed with Gaia R77.30.

No strain on resources.

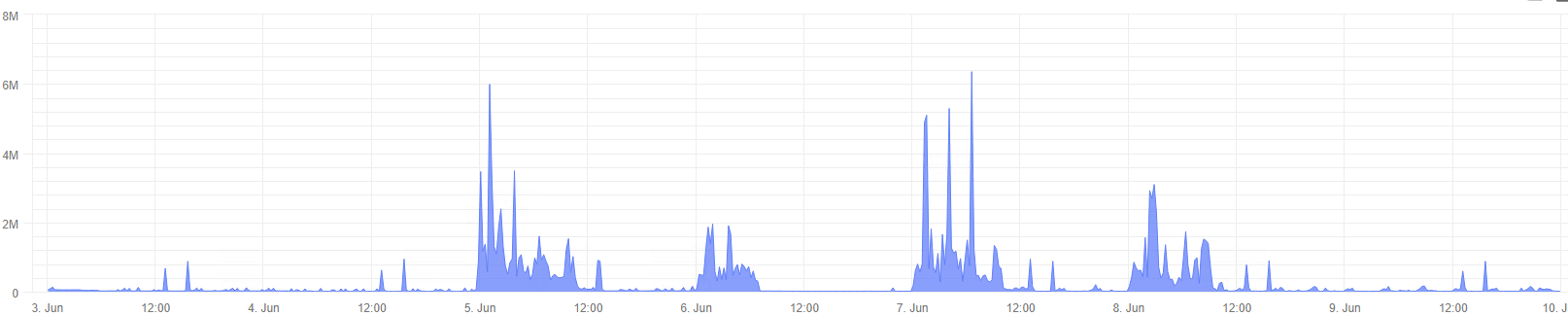

Here's the traffic going through for the last week

Nothing major.

I increased the buffer to 2048 and will monitor any increases.

I will also get a look at the SK for tuning the firewalls.

Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

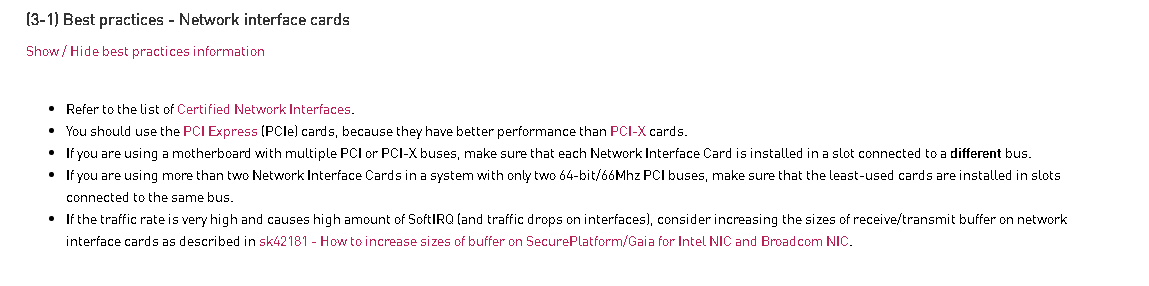

I had a look at the tuning doc suggested by Dameon. Under Interface Tuning

Since it's a Checkpoint appliance I'm guessing the first 4 points are already optimized?

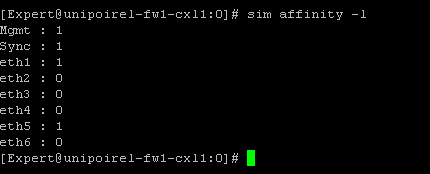

Eth1 & Eth3 are the troublesome interfaces and looking at sim affinity -l, they're on different cores

All in all I dont think there's much else I can check for this issue.

Thanks

john

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll see if I can get the boss man to purchase your book. Looks like we'd seriously benefit from it though!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys (last update for a while)

My boss came in and said that we have Tim's book, so I read the relevant chapter. Excellently written and great example. Very detailed and made a lot of sense.

So what I have done is turned off secureXL since it's only a 2 core firewall and left the ring size buffer to 2048 (even though this isnt recommended in the book)

I hadnt heard of bufferbloat, but it does make sense that it would cause problems in a packet switched network.

Thanks again.John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

John Colfer do you mean you turned off CoreXL on your 5400? On a 2-core firewall that *may* improve things depending on a variety of factors. There are very few scenarios where turning off SecureXL is recommended and optimizations of SecureXL may really help here.

Just to clarify, Bufferbloat can only occur between interfaces of varying network speed (i.e. typically between your high-speed LAN interfaces and a lower-speed Internet or WAN link) and as such should not occur between network interfaces of the same speed.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have to disagree here partly. We have seen in the past that the default RX ring buffer was set to 256 and those are now set to 1024.

Bu if you run into a unit still running with 256 there I will upgrade that first before I do anything else in regard to tuning.

<< We make miracles happen while you wait. The impossible jobs take just a wee bit longer. >>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For traffic traversing interfaces that are both the same link speed, increasing the ring buffers should not cause Bufferbloat. It is when traffic steps down from say a 10Gbps interface to a 1 Gbps interface that increasing the RX ring buffer on the 10 Gbps interface (or the TX buffer on the 1Gbps interface) can cause Bufferbloat. Or better yet traffic traversing between two 10Gbps interfaces, but one of the 10Gbps interfaces only has 1Gbps available further upstream (say to an Internet router). Depending upon the RX buffer size on the upstream device's 10Gbps interface where the step-down from 10Gbps to 1Gbps occurs Bufferbloat can happen there too.

Default ring buffer sizes have increased as link speeds have increased, there is a rule of thumb networking vendors use: an interface should have a ring buffer sized sufficiently to hold up to 250ms of traffic. So increasing ring buffer sizes is not always bad, but once Bufferbloat starts happening it is very difficult to understand why the overall network performance is being constrained. Would strongly recommend reading the Bufferbloat Wikipedia article (Bufferbloat - Wikipedia) as it took some of the best minds in networking (including Vint Cerf and Van Jacobson) a *long* time to figure out what was going on as detailed here: BufferBloat: What's Wrong with the Internet?

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Self-Managed Cluster - that must be an euphemism for the dreaded Full Management HA cluster ![]() ...

...

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 42 | |

| 21 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |

Upcoming Events

Thu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchThu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter