- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Announcing Quantum R82.10!

Learn MoreOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- CoreXL: only one SND core is busy

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

CoreXL: only one SND core is busy

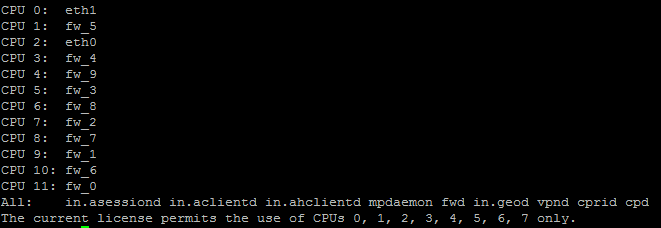

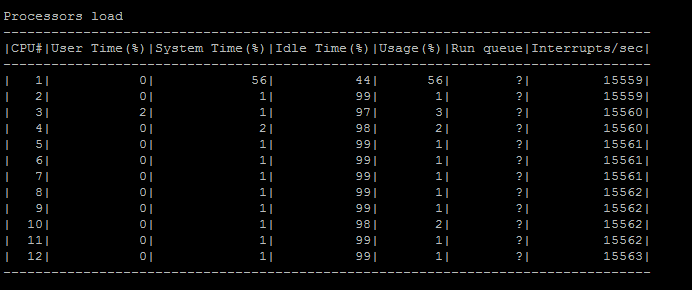

I'll put pictures because the copy paste breaks the table formatting:

fw ctl affinity -l -r

cpstat os -f multi_cpu (it's Sunday morning so not much traffic)

As you can see only the first SND (on the first CPU core) seems to be doing anything. The other SND (CPU 2/3 depending on the command) is mostly idling.

I suspect this may be because there is only one LACP bond interface (of two physical interfaces) on the gateway (but with many vlan subinterfaces). Therefore all the queuing of this bonding interface would maybe be handled by the first SND only, does that make sense?

If this is correct, I guess I should reconfigure CoreXL with only one SND?

It is an open server R77.30 gateway.

- Tags:

- corexl

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The SND/IRQ core is assigned to the physical interfaces, eth1 is being handled by CPU 0 while eth0 is being handled by CPU 2. 95% of your traffic is fully accelerated in the SXL path (and a relatively high templating rate of 49%) so higher CPU load is to be expected on your SND/IRQ cores, a few points:

- Is SMT/Hyperthreading enabled? (/sbin/cpuinfo) If so you need to disable it and then assign 4 kernel instances via cpconfig (which will assign 2 SND/IRQ cores). Having SMT enabled with such a high percentage of accelerated traffic actually hurts performance.

- Since it looks like all traffic is being handled by just two physical interfaces, I'd recommend enabling Multi-Queue on both of them even though the RX-DRP rate is well below 0.1%. This will help to spread the load out a bit among the 2 SND/IRQ cores.

- Your distribution of bonded traffic between the two physical interfaces looks pretty even, so no issues there.

- You definitely do NOT want to drop from 2 SND/IRQ cores down to one.

- I suppose you could configure 4 SND/IRQ cores w/ Multi-Queue enabled, not sure how much that would help since there are only two active physical NICs but it probably wouldn't hurt.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

6 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have 12 CPU cores, but in fact only 8 are licensed.

Anyway, have a look here if it helped:

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have 12 CPU cores, but in fact only 8 are licensed.

I know this but I don't see how it is related to the second SND idling.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Need full Super Seven command outputs to answer your question:

Super Seven Performance Assessment Commands (s7pac)

This will help answer questions such as how much traffic is accelerated, whether SecureXL is enabled, whether you are using manual affinity, etc.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are the missing 5:

fwaccel stats -s

Accelerated conns/Total conns : 7337/14761 (49%)

Accelerated pkts/Total pkts : 138590646/145791596 (95%)

F2Fed pkts/Total pkts : 5881723/145791596 (4%)

PXL pkts/Total pkts : 1319227/145791596 (0%)

QXL pkts/Total pkts : 0/145791596 (0%)

grep -c ^processor /proc/cpuinfo

12

netstat -ni (sorry it's quite long)

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

bond0 1500 0 1394995720953 5039 39663538 0 1379697498747 0 0 0 BMmRU

bond0.4 1500 0 52359489686 0 0 0 21412786937 0 0 0 BMmRU

bond0.5 1500 0 369278960681 0 0 0 747705040016 0 0 0 BMmRU

bond0.6 1500 0 62052221192 0 0 0 60992876777 0 0 0 BMmRU

bond0.7 1500 0 3713019794 0 0 0 18864528927 0 0 0 BMmRU

bond0.8 1500 0 0 0 0 0 1006311 0 0 0 BMmRU

bond0.10 1500 0 10606207916 0 0 0 7136356779 0 0 0 BMmRU

bond0.14 1500 0 26754476499 0 0 0 20827379092 0 0 0 BMmRU

bond0.19 1500 0 2382647063 0 0 0 706305695 0 0 0 BMmRU

bond0.24 1500 0 19065409562 0 0 0 14061884167 0 0 0 BMmRU

bond0.25 1500 0 294290424 0 0 0 302684345 0 0 0 BMmRU

bond0.26 1500 0 302497724 0 0 0 251089232 0 0 0 BMmRU

bond0.27 1500 0 116215093 0 0 0 110093772 0 0 0 BMmRU

bond0.29 1500 0 30457344 0 0 0 31915625 0 0 0 BMmRU

bond0.79 1500 0 891130915 0 0 0 1318803777 0 0 0 BMmRU

bond0.98 1500 0 3284038 0 0 0 651117 0 0 0 BMmRU

bond0.101 1500 0 119772512 0 0 0 137775320 0 0 0 BMmRU

bond0.107 1500 0 45142551 0 0 0 29757675 0 0 0 BMmRU

bond0.111 1500 0 8696900244 0 0 0 4742540248 0 0 0 BMmRU

bond0.116 1500 0 490016686 0 0 0 460636644 0 0 0 BMmRU

bond0.118 1500 0 4139898545 0 0 0 1977956603 0 0 0 BMmRU

bond0.121 1500 0 853925844 0 0 0 499164596 0 0 0 BMmRU

bond0.122 1500 0 179499941 0 0 0 132989328 0 0 0 BMmRU

bond0.170 1500 0 23396028007 0 0 0 28860775722 0 0 0 BMmRU

bond0.173 1500 0 8780230716 0 0 0 5201512913 0 0 0 BMmRU

bond0.211 1500 0 538112194 0 0 0 1180701623 0 0 0 BMmRU

bond0.216 1500 0 80064697232 0 0 0 44714847049 0 0 0 BMmRU

bond0.218 1500 0 718764 0 0 0 614903 0 0 0 BMmRU

bond0.249 1500 0 131502970 0 0 0 144575776 0 0 0 BMmRU

bond0.254 1500 0 84007379531 0 0 0 72725532974 0 0 0 BMmRU

bond0.255 1500 0 280525503 0 0 0 373980096 0 0 0 BMmRU

bond0.307 1500 0 0 0 0 0 612125 0 0 0 BMmRU

bond0.316 1500 0 338881243 0 0 0 354686563 0 0 0 BMmRU

bond0.349 1500 0 3261618818 0 0 0 3152789986 0 0 0 BMmRU

bond0.408 1500 0 6738301 0 0 0 6430679 0 0 0 BMmRU

bond0.409 1500 0 33830202905 0 0 0 15597198399 0 0 0 BMmRU

bond0.411 1500 0 45680844 0 0 0 27421501 0 0 0 BMmRU

bond0.413 1500 0 110364862743 0 0 0 53024348871 0 0 0 BMmRU

bond0.416 1500 0 5816040525 0 0 0 6348221543 0 0 0 BMmRU

bond0.417 1500 0 39405084138 0 0 0 15587313742 0 0 0 BMmRU

bond0.419 1500 0 3483246523 0 0 0 4422397258 0 0 0 BMmRU

bond0.507 1500 0 0 0 0 0 612685 0 0 0 BMmRU

bond0.511 1500 0 1580213 0 0 0 881229 0 0 0 BMmRU

bond0.518 1500 0 5093539 0 0 0 6175567 0 0 0 BMmRU

bond0.521 1500 0 414168783 0 0 0 650313999 0 0 0 BMmRU

bond0.611 1500 0 216808003 0 0 0 223187242 0 0 0 BMmRU

bond0.641 1500 0 199609888915 0 0 0 92413132506 0 0 0 BMmRU

bond0.643 1500 0 80757360 0 0 0 42352700 0 0 0 BMmRU

bond0.644 1500 0 761316 0 0 0 610334 0 0 0 BMmRU

bond0.645 1500 0 148104750 0 0 0 44926296 0 0 0 BMmRU

bond0.653 1500 0 10976675 0 0 0 7416242 0 0 0 BMmRU

bond0.661 1500 0 184047965 0 0 0 147412665 0 0 0 BMmRU

bond0.662 1500 0 0 0 0 0 611729 0 0 0 BMmRU

bond0.663 1500 0 0 0 0 0 616366 0 0 0 BMmRU

bond0.671 1500 0 99791137303 0 0 0 48503733230 0 0 0 BMmRU

bond0.672 1500 0 28107423 0 0 0 22814680 0 0 0 BMmRU

bond0.673 1500 0 19990806 0 0 0 23292324 0 0 0 BMmRU

bond0.674 1500 0 2819356727 0 0 0 1238707791 0 0 0 BMmRU

bond0.681 1500 0 26829948954 0 0 0 11069005509 0 0 0 BMmRU

bond0.682 1500 0 60479150 0 0 0 72024059 0 0 0 BMmRU

bond0.683 1500 0 224978500 0 0 0 306106657 0 0 0 BMmRU

bond0.691 1500 0 54724491309 0 0 0 26333668129 0 0 0 BMmRU

bond0.692 1500 0 1898751795 0 0 0 2639172349 0 0 0 BMmRU

bond0.694 1500 0 2728296755 0 0 0 1477418884 0 0 0 BMmRU

bond0.695 1500 0 869064 0 0 0 621226 0 0 0 BMmRU

bond0.696 1500 0 5275010566 0 0 0 2878140596 0 0 0 BMmRU

bond0.697 1500 0 4596758 0 0 0 4380694 0 0 0 BMmRU

bond0.698 1500 0 1951112 0 0 0 1826269 0 0 0 BMmRU

bond0.904 1500 0 13170157932 0 0 0 9857775878 0 0 0 BMmRU

bond0.905 1500 0 14251494963 0 0 0 10461430294 0 0 0 BMmRU

bond0.1025 1500 0 16777504 0 0 0 19842306 0 0 0 BMmRU

bond0.1026 1500 0 147591886 0 0 0 321596472 0 0 0 BMmRU

bond0.1032 1500 0 12563176 0 0 0 11953149 0 0 0 BMmRU

bond0.1080 1500 0 9763356910 0 0 0 14883147090 0 0 0 BMmRU

bond0.1099 1500 0 30982634 0 0 0 37281323 0 0 0 BMmRU

eth0 1500 0 740726327381 911 18724147 0 661416498362 0 0 0 BMsRU

eth1 1500 0 654269393572 4128 20939391 0 718281000385 0 0 0 BMsRU

lo 16436 0 44694202 0 0 0 44694202 0 0 0 LRU

fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 11 | 1765 | 18016

1 | Yes | 9 | 1240 | 17449

2 | Yes | 7 | 1346 | 29195

3 | Yes | 5 | 1182 | 29215

4 | Yes | 3 | 1735 | 14742

5 | Yes | 1 | 1585 | 14783

6 | Yes | 10 | 1740 | 57699

7 | Yes | 8 | 1633 | 16750

8 | Yes | 6 | 1088 | 19264

9 | Yes | 4 | 1477 | 18676

But the question is also, more generally speaking, in case of bonding is the SND assigned to the bonding interface or to the underlying physical interfaces?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The SND/IRQ core is assigned to the physical interfaces, eth1 is being handled by CPU 0 while eth0 is being handled by CPU 2. 95% of your traffic is fully accelerated in the SXL path (and a relatively high templating rate of 49%) so higher CPU load is to be expected on your SND/IRQ cores, a few points:

- Is SMT/Hyperthreading enabled? (/sbin/cpuinfo) If so you need to disable it and then assign 4 kernel instances via cpconfig (which will assign 2 SND/IRQ cores). Having SMT enabled with such a high percentage of accelerated traffic actually hurts performance.

- Since it looks like all traffic is being handled by just two physical interfaces, I'd recommend enabling Multi-Queue on both of them even though the RX-DRP rate is well below 0.1%. This will help to spread the load out a bit among the 2 SND/IRQ cores.

- Your distribution of bonded traffic between the two physical interfaces looks pretty even, so no issues there.

- You definitely do NOT want to drop from 2 SND/IRQ cores down to one.

- I suppose you could configure 4 SND/IRQ cores w/ Multi-Queue enabled, not sure how much that would help since there are only two active physical NICs but it probably wouldn't hurt.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much that answers all my questions

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 16 | |

| 12 | |

| 8 | |

| 7 | |

| 6 | |

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |

Upcoming Events

Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolFri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter