Weekend gone we retired our "jumbo jets" (aka 41k chassis) in favour of 26k appliances running R80.30 take 155 and 3.10 kernel.

Main drive were the limitations presented by scalable platform SP :

- we wanted to run virtual router in our VSX and that's not supported in SP

- we are not able to upgrade to R80.xSP train for multiple limitations therefore in R76SP50 we:

- were lacking FQDN objects

- were lacking Updatable objects

- had IA performance issues / CPU usage

- had 32bit kernel limitations

- troubleshooting cases with flow corrections between SGM blades proved to be cumbersome and tweaking a box would take considerable amount of hours in our environment. To give one example we had a case when certain TCP RST conditions were met, connection got "stuck" in corrected SGM and never released ending up in consuming all high ports for a particular client connections and eventually stopping traffic from that host (it was load balancer representing many clients so high ports were frequently reused)

- most importantly lack of freely available administrators that were able to maintain SP platform - we could not find anyone to replace admins that left our team. This created a major threat to contingency plans

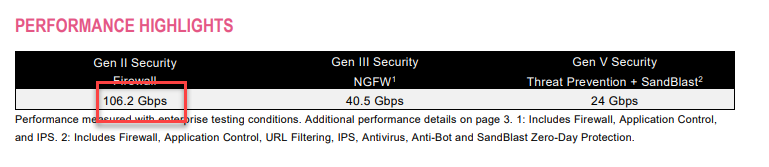

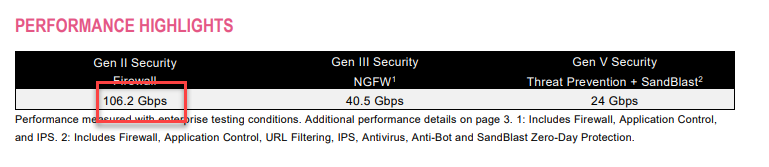

So the decision was made to move back to appliances. At the time we were seeing total backplane traffic in the chassis across all SGMs closing to 40Gbps. So we had no choice but to get 26000 which promised 100Gbps pure FW throughput:

Well. I guess all these years working with CP, you need to take all the numbers with pinch of salt.

What's the reality after running with it for couple of days? I personally don't think we will be able to push it beyond 60Gbps.

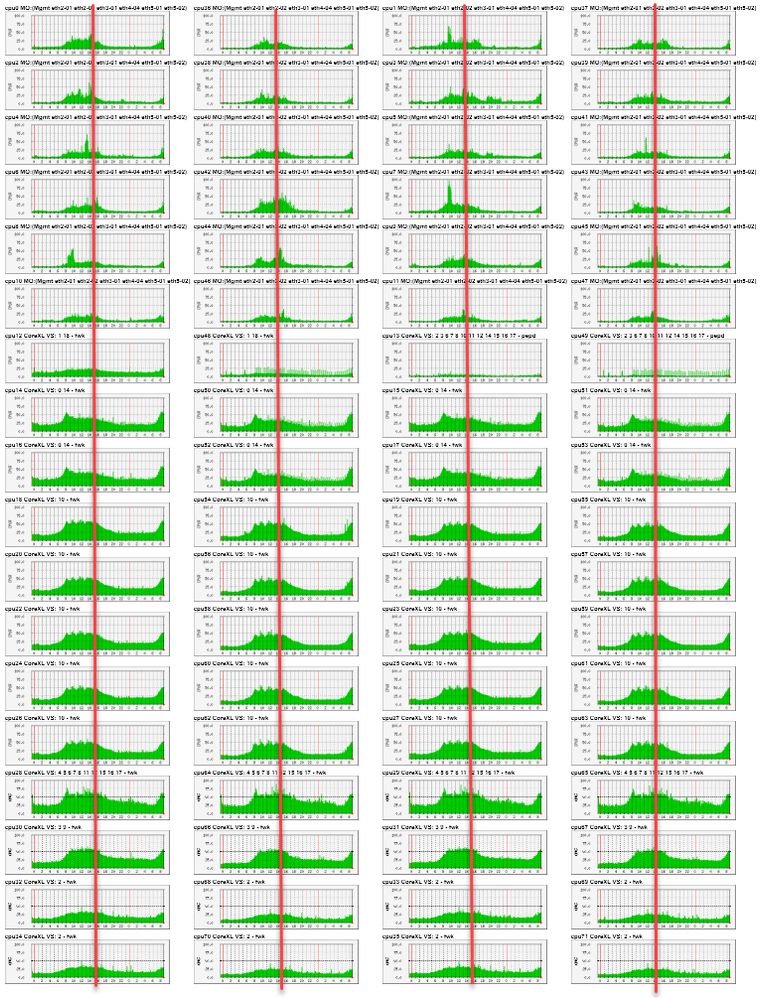

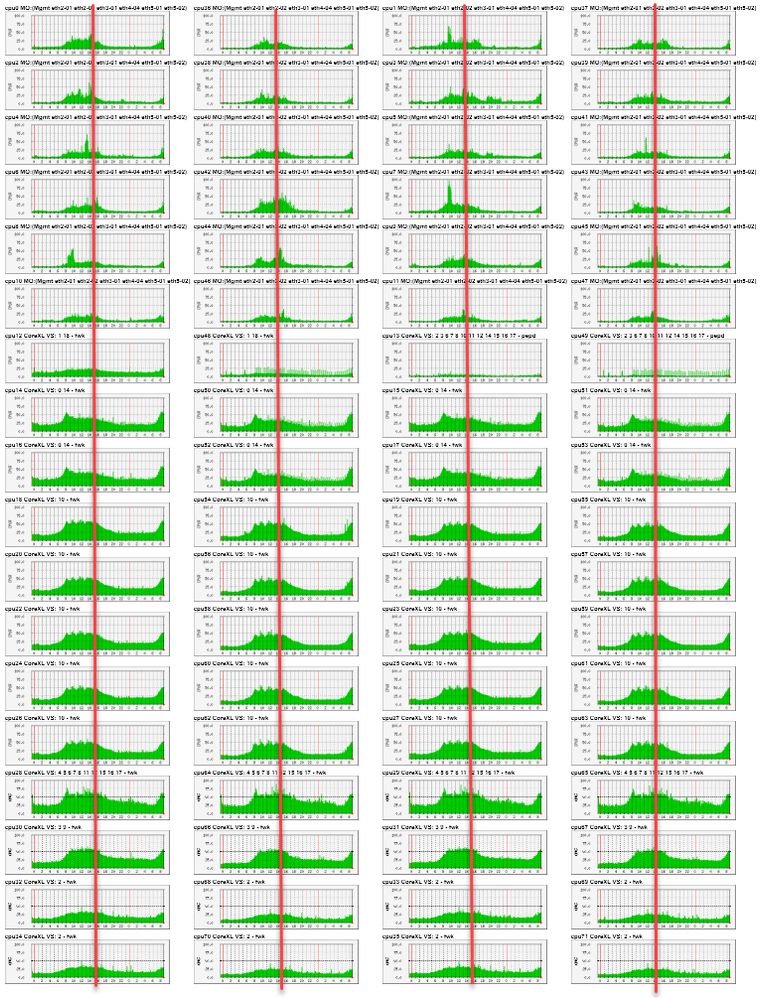

Why, the red line in the screenshot below represents time stamp when:

- total throughput was just under 20Gbps

- total concurrent connections was just over 1M

- total connection rate was 30k cps

- acceleration across all VSes was > 90%

- only FW and IA blades used

If I take average CPU usage across all 72 cores it works out approx 30% so I could "guestimate" that we could triple performance of the box before it maxes out in our environment:

Was it worth the upgrade as 41k easily would have done the same job performance wise or even better?

I have to admit that (lack of) SP platform admin availability plus the gain of FQDN and updatable objects and Virtual Router justified it for us. It might be short-lived due to capacity limitations but puts us in right direction. I have to admit that I'm glad to be moving away from SP. I don't think it's mature enough, just like VSX as a product was 7 years ago before reaching R77.30.

I would love to hear from those using VSX on open server HW and running R80.30 - how's the performance on those? I have heard that open servers outperform appliances by huge mile by using faster CPUs.