- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- Topologies and DNS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Topologies and DNS

Would very much appreciate your help with understanding the inner concepts of topologies that are driving me crazy in the last 36 hours.

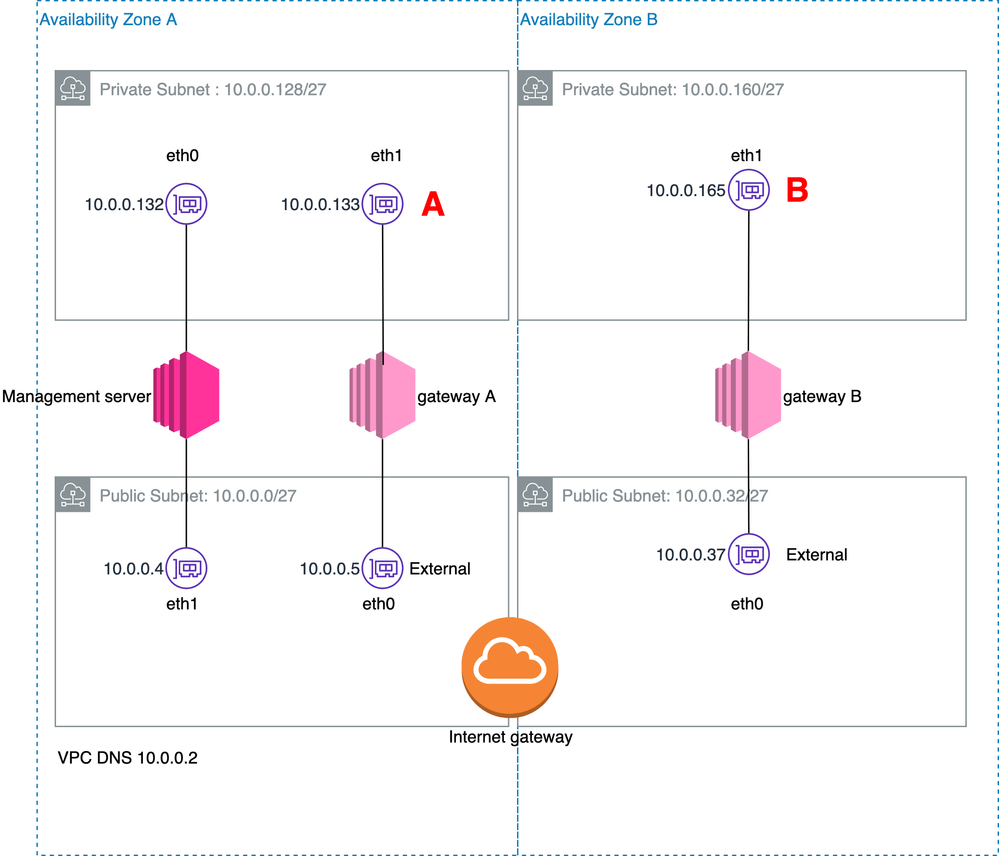

Refer to the base diagram in attachment, depicting a management server in the same AZ of a gateway, and one extra gateway in another AZ. All are R80.40, and other than the problem described working normally.

For my example I have the Standard Policy only opening SSH to be able to access the instances.

My problem is that depending on how I set the topology on the ENIs on the private subnets, DNS resolution stops working in a way that I can't understand. Let me give you the different combinations referring to diagram below:

* If both on A and B I have "Internal defined by address and mask", management server fails to communicate to communicate with B

* If I set both A and B to Specific "10.0.0.0/24" can manage both instances, B resolves DNS, but A instance not.

* If I set both A and B to Specific "10.0.0.128/25" can manage both instances, A resolves DNS, but A doesn't (times out).

* If I set A to "Internal defined by address and mask" and B to "10.0.0.0/24", I get everything working, but can't make any sense of what I am doing anymore.

I simulate all the scenarios above, just by changing interface definition, publishing and install policies. The behaviour switches right after the policy finishes installing.

For sure it has something to do with my less than perfect understanding of how topologies work, but if someone could explain it in the context of my example would be thankful. Ultimately would like a consistent topology definition of all the gateways, that made irrelevant in which AZ the gateways or the management servers were...

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While I'm not too sure of what would have been the right solution in my scenario, I think that I found a solution out of it.

Through trial and error, I confirmed the problem revolved around including AWS's .2 and 169.254.169.253 DNS IPs as internals or not. The problem is that on one of the AZs the .2 is int the attached subnet of the _public_ interface of one of the gateways and that AZ would require an exceptional definition of Topology, while the others required a Group including the subnet plus 2 hosts.

Not pretty. I ended up turning it upside done, making it cleaner and in my view being able to document another good practice on AWS: if you are making a design around a private and public subnet, adding gateways dual-homed in them, _strongly_ advise in making the lower half of the address space the private subnets. That way the .2 address will be included automatically in the internal part of the topology saving you some trouble.

Or maybe there was another totally different and simple answer, but this worked too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which means if DNS access to .2 is going out the external interface and it's defined as part of the internal interface topology...the traffic will get dropped by anti-spoofing.

You should be seeing logs generated for this as I believe we log these violations by default.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. The spoofing part makes sense why we have the different behavior where the address is seen on the same network as the interface.

Is there someplace documented the behaviour of the gateway regarding the use of 169.254.169.x (EC2 metadata and hypervisor DNS server) ?

As far as I see those addresses don't make part of route table, are not on policy rules and still they work of instance roles, so I guess there is some "special handling" ?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general, 169.254.x.x address space is considered "Link Local" address space in traditional IPv4 networks.

The only time you'll see those addresses in most environments is when a client requests a DHCP address and doesn't get one.

The client will then assign itself something in the 169.254.x.x address space.

This is described in RFC 3927: https://tools.ietf.org/html/rfc3927

The public cloud providers use this address space for things like instance metadata and infrastructure services (e.g. DNS).

In general, if there's no explicit route for something, the traffic would go wherever the default router says to go.

169.254 address space is no different in this regard--it goes out the interface of the default route in this case.

Since that interface has a topology of External and you haven't included 169.254 in the internal interface topology, anti-spoofing won't cause an issue.

Also, no explicit rules are required for traffic originating from the gateway itself, those are permitted implicitly.

Hope that clears things up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perfect. Thanks so much for the clear & complete answer.