- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- Re: Can we avoid the promiscuous mode for vSEC clu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can we avoid the promiscuous mode for vSEC clustering ?

I work since few weeks on the virtualization of checkpoint security gateways. And to allow HA protocol (CCP) in order to create a clusterXL, I had to enabled the promiscuous mode on vmware.

So I was wondering if there was not another solution.

If not, is there some best pratices to avoid route causes on datacenters (packet loss for example) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure what you mean by "route causes."

In general, the CCP packets (which are Multicast by default) are there to determine reachability/availability of the cluster members on interfaces.

You can potentially switch ClusterXL mode to Broadcast mode: How to set ClusterXL Control Protocol (CCP) in Broadcast / Multicast mode in ClusterXL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually it may not be the right term.

In order "to determine reachability/availability of the cluster members on interfaces", we must authorize the promiscuous mode on the vSwitch in VMware (both Broadcast and Multicast)

And I have some packet loss in my datacenter due to this mode , so I search some best practices to avoid this mode or reduice its impact.

But I didn't find yet informations about this (in forum or in CP docs).

For information, we use vSphere 5.5.

Maybe you have another idea ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, ClusterXL in its various forms requires multicast or broadcast packets, so this mode is required.

Its use is commensurate with the amount of traffic being passed by the cluster.

Perhaps you can limit it's impact by reducing the number of devices directly connected to the same vSwitches as the vSEC instances.

As this sounds like a VMware issue, have you engaged with them at all?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have perfectly right. It's indeed a VMware issue and it would seem that we must upgrade our vSphere plateform to version 6.

With v6 we could use multicast without promiscuous mode but I would have liked to have Checkpoint confirmation that this is the best practice.

By the way thanks for your response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The packet loss you are referring to may be due to the broadcast control configured on physical switches your ESXi servers are connected to.

Please verify if there are any settings limiting broadcast set on the ports corresponding to NICs that have port groups and vSwitches assigned to the ClusterXL members.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I will check this lead with the virtualization infrastructure team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello , Is there any way to avoid promiscuous mode with R80.20 or R80.30?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why do you need promiscuous mode? Do you have VMAC enabled?

Also, CCP supports unicast mode of operation as of R80.30 (need to configure it).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

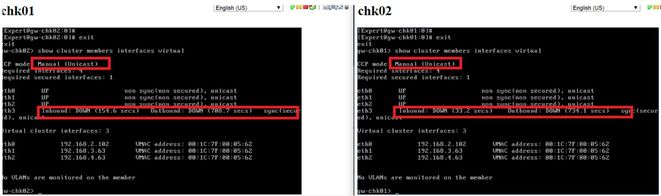

Hello I tried with R80.20, I configured unicast mode, but the sync lync still showing down, I read that promiscuos mode still mandatory to syncronize the cluster, if you have any material or configuration manuals will be great.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello two gw with dvswitch , its configured as unicast, the sync interface remains down. This lab is with version r80.20

Each interface has its own portgroup.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pablo_Barriga , each interface or each pair of interfaces?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

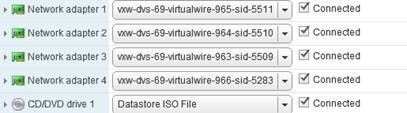

Each network adapter has its own portgroup.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pablo_Barriga , what i am trying to determine if your Sync interfaces of both cluster member are sharing the same portgroup. They should.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are the vSECs on the same host or on two different hosts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suggest v-motioning the vSECs to the same, verifying that it works and if it does, moving them back to separate hosts and looking at the portgroup/dvswitch/physical switch to see where its getting lost.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

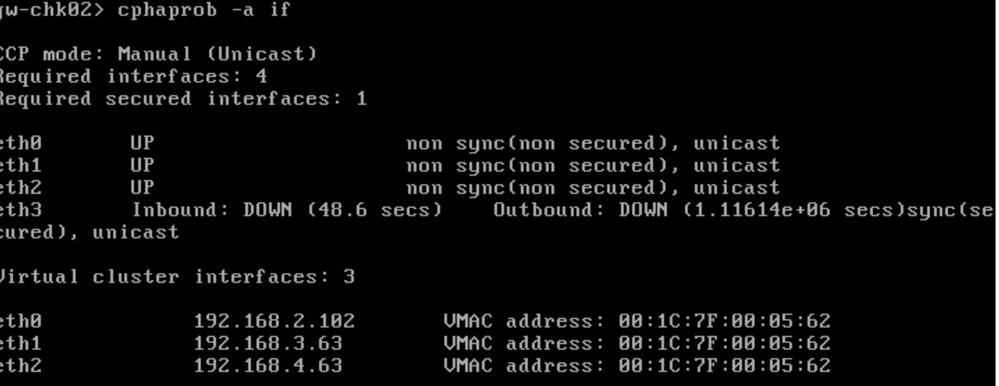

Hello both gws are on the same host, but the cluster remains down, VMAC enabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would involve TAC to resolve this...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, they told me to activated promiscuous mode, thats quite complicated because its a vcloud infraestructure. I'm going to try to replicate the problem with other versions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So far everything is quite secure, looks like I'll have to use an ADC for load balacing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For anything visiting here and in VMWare:

I am on my 3rd lab. The first was done with open source OPNSense (based on PFSense) with its own CARP/VRRP implementation. Network design remained the same throughout all 3 labs and so did the VMWare kit it was hosted on.

I dealt with the promiscious thing in OPNSense, where the implementation is far more finicky. It appears it used the "virtual mac" option and therefore required me to create separate vSwitches just for the router MGMT interface for each network I wanted it to participate in (= double the networks).

I tested this for several hours and while I stopped short of enabling VirtualMac on the CP Cluster in my labs (for the dbl network reason and sticking whole switches in promisc mode isn't my idea of fun) - I CAN 100% CONFIRM Cluster VIPs WORK IN vSwitches that are not in promiscious mode. I had both broadcast and unicast succeeding. (The cluster picked broadcast mode when the dedicated sync interface had no IPs assigned and unicast when they got a /30 in between themselves). I'm on ESX 6.7 but I doubt that matters. It's a under-the-hood VxLan bridging/networking thingie, not inherently a VMWare problem.