- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- Re: Azure Scale Set Gateways Disappeared from Poli...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Azure Scale Set Gateways Disappeared from Policy

We have deployed Azure Cloudguard Scale Set and have an interesting issue where our gateways are no longer present in the console. I looked at the auto-provision log and all I can see is the gateways are stuck in 'INITIALIZING' state. I know if I re-image them they will come back online but that also requires a slight rebuild of the gateway. Has anyone dealt with this and know how to reconnect the gateway to the manager without the redeploy?

Failed scale set log entries during gateway sync:

2019-01-24 21:22:36,179 MONITOR INFO {firewall #1}: INITIALIZING

2019-01-24 21:22:36,203 MONITOR INFO {firewall #2}: INITIALIZING

Our working scale set looks like the following in the same log during gateway sync:

2019-01-24 21:22:36,203 MONITOR INFO updating: {firewall #1}

2019-01-24 21:22:36,204 MONITOR INFO {firewall #1}: COMPLETE

2019-01-24 21:22:36,204 MONITOR INFO {firewall #2}

2019-01-24 21:22:36,256 MONITOR INFO {firewall #2} : COMPLETE

No issues with the auto-provision connectivity in general and no changes on our Azure side. These gateways were in my console at one point and then just disappeared and can't seem to find out why and a way to get them back in without a redeploy. Thanks in advance!

** UPDATE **

Looks like during a gateway sync the instances in the scale set could not be found (even though they existed and still do) and were deleted from the manager. However the firewalls still function but I am unable to manage them or push policy to them. Not sure how to get them back in to the policy without doing a re-image?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might want to compare the tags assigned to these orphan gateways versus the tags assigned to working ones.

My guess (and it's only that) is that the orphan gateways don't have the correct tags assigned.

If they got removed for some reason, then the CloudGuard Controller would remove the gateway objects in the policy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dameon,

I can confirm the tags are correct and they are not being modified/removed. I can re-image the instances in the scale set but after a few days they disappear with no reason I can see in the autoprovision.elg logs. I have a support case open but was curious if anyone else has dealt with this and if there is a way to get the gateway objects back in to the policy without reimage.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Send me the TAC SR in a private message.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SIC reset on one gateway should bring it back through the Learning/Adding process- Wait through the full learning process, then do the next gateway in the AS group

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The issue you describe has many possible causes. Sometimes this happens due to connectivity issues, or due to invalid tags on the scale set.

First of all, I suggest to check that you have the latest version of the autoprovision addon installed on your management server (according to the instructions in the administration guide).

In order to understand the root cause of the problem, please open a support ticket at Check Point and share it with me so I can follow up.

Thanks,

Dmitry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I encountered the same issue.

The GW disappeared from Mgmt after maintenance work on the Check Point scale set. does anybody have idea why it happened?

when checking the autoprovision.elg I saw the following:

2019-05-27 22:17:29,169 MONITOR INFO gateways (after):

2019-05-27 22:17:29,169 MONITOR INFO

2019-05-27 22:18:00,542 MONITOR INFO gateways (before):

2019-05-27 22:18:00,542 MONITOR INFO

2019-05-27 22:18:00,542 MONITOR INFO Azure_con: gateway sync

2019-05-27 22:18:03,531 MONITOR INFO no interface .....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm struggling with the exact same issue only mine have never shown up in the first place. We've validated the tags and we're running the latest autoprovision.

I'm working with a Check Point cloud super guru and our diamond engineer is in on it as well.

We've tried setting the scale set to 0/0/0 (from 2/2/2) and the autoprovision service sees them go away and even come back when we go back to our normal settings. So we know there's communication. But they will not show up so we can manage them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have any insights from TAC about this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't been working with TAC on this case. We've been working with another Check Point employee.

We completely scratched our json file and blew away our Azure gateways. We then went and recreated the autoprovision service line-by-line.

When I ran "service autoprovision test" all of the tests passed (which, they had done previously). Only this time, I noticed that an API session ID had been generated, used and destroyed. That wasn't happening before.

The other issue was a goof on my part. It's a long story, but I didn't have routing setup so our MDS could see the Azure networks. I fixed that and things are better.

We're still researching why, despite our configuration, 2 of our 4 gateways are showing up in the wrong CMA. I want 2 gateways in CMA1 and the other 2 in CMA2. All 4 of them are in the first CMA. With our config, this shouldn't be happening.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would recommend to ensure you are running the latest Autoprovision script before continuing. The current version is 438. This can be identified by running "autoprov-cfg -v". If its not the latest you can upgrade with no downtime by using the following commands

- curl_cli -o autoprovision-addon.tgz https://s3.amazonaws.com/chkp-images/autoprovision-addon.tgz --cacert $CPDIR/conf/ca-bundle.crt

- tar zxfC autoprovision-addon.tgz /

- touch $FWDIR/scripts/autoprovision/need_dbload

- chkconfig --add autoprovision

In an MDS environment ensure you are using the "-cn <Domain>" flag for the proper CMA. If you would like to run autoprovision across multiple CMA's I would also suggest adding a new template for the addition domain. This will also ensure the gateways are deployed in the correct location. This is all documented under SK120992.

As for the disappearing objects there can be many factors that can result in this. There will be an updated Autoprovision in a few weeks that will include configurable values for tolerance level that will address this problem.

Thank you,

Dan Morris, Technology Leader, Ottawa Technical Assistance Center

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dan,

To confirm, the latest version of autoprov is v438? We just installed this a couple weeks ago and we're on v428.

We do have it working-ish now. Long story short, there was a routing issue that was preventing the MDS from seeing Azure.

We're (us and a Check Point resource) currently reviewing why both pairs of gateways are showing up in the same CMA instead of their assigned CMA in the json file. Thinking it was an issue in how we ordered the commands. We are using templates.

We're also working on how to determine if we can get rid of the front end load balancer. One pair of gateways is configured to manage traffic via Express Route. And the template to install VSS instances assumes you're going to be doing external work so it builds a front end load balancer with an external IP.

We've been working from SK120992. But, frankly, that document leaves a lot to be desired in the steps towards making this stuff work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes the latest is v438. A new version will be coming out shortly with addition tolerance values that will assist if there are communication issues causing gateways to disappear.

As for removing the ELB this is required to ensure that the VMSS instances are operational and are able to pass the traffic. There are health checks on both FE and BE side of the GW's. You can deploy a VMSS deployment with no FrontEnd PIP but a LB will still be deployed due to this requirements.

Thank you,

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you make sure that your internal resources don't have any external access then?

Can we remove the external IP from the external LB?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

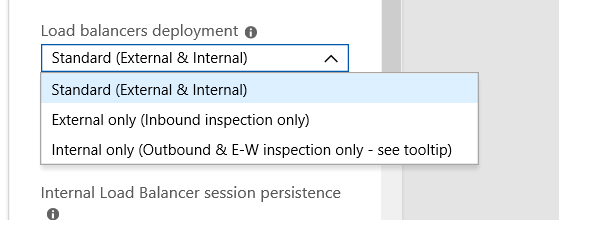

when using the Azure Portal for deploying you have the Option which LBs to use:

- Standard (External & Internal)

- External only

- Internal only

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks.

We need external & internal interfaces but with private addresses.

Internal does not look like it will work because it is outbound with E&W filtering. Express Route will be on our external interface and the Azure infrastructure will be on the internal interface.

I think what we're going to end up doing is to test removing the external IP from the E-LB and sending all traffic to the internal LB. Not ideal, but not sure how else to deal with it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

from my understanding "external" and "internal" is a bit "relativ" in (Azure) Cloud.

As you wrote, you should be able to define a UDR for the Express Route subnet which points to the VIP of the internal LB as next hop for the Azure networks (and vica versa). Depending on your routing on the gateways, only the internal interface of the FW is actually used (would be the case if you route your Azure networks out of the internal interface)

A external LB is only needed if you need to allow access from the Internet (via a public VIP on the external LB)

BR

Matthias

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matthias_Haas - when we go to rebuild using the internal option we are prompted with a warning that if we want outbound inspection, which we do, we must add an external load balancer and a public IP.

Our on-prem corporate network, to this set of firewalls is what we consider the outbound side. We need full inspection capabilities.

Just not with a public IP address. Why is this limitation there? It seems pretty dumb.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dedicated management plane (thought I think this one is coming).