- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- External check to determine active/standby state o...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

External check to determine active/standby state of cluster members?

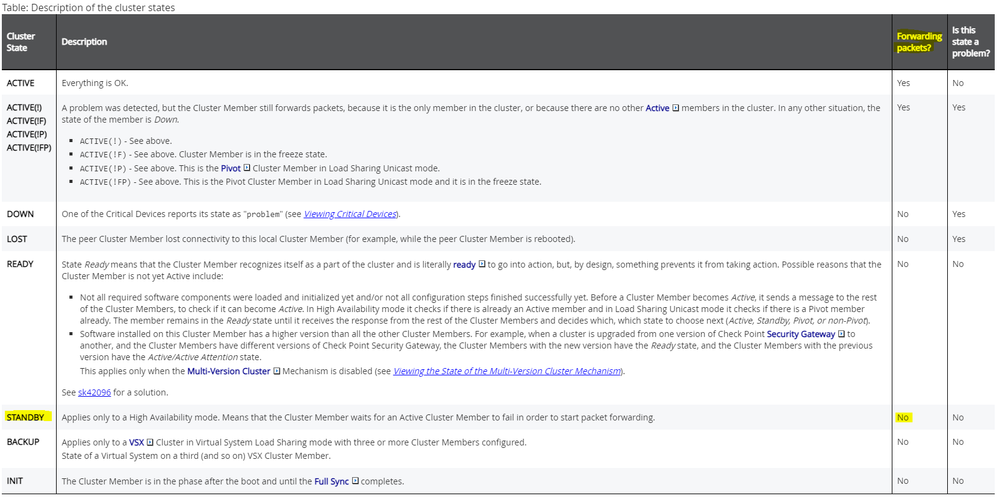

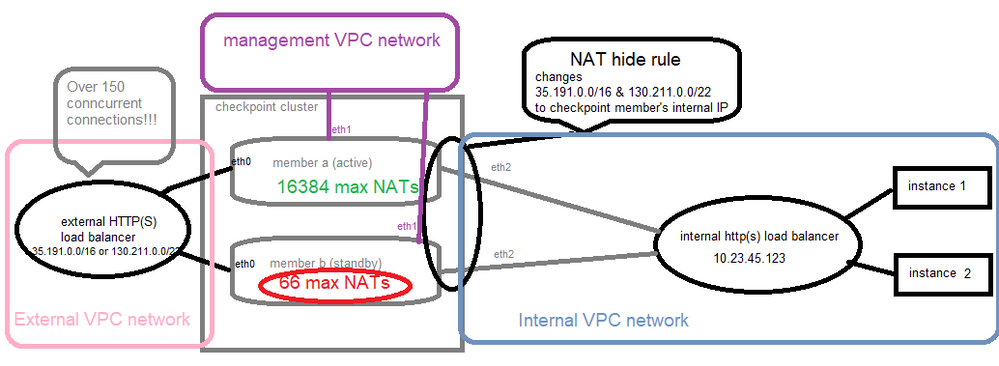

Background: We use Google Cloud Global HTTP(S) load balancers in front of a CheckPoint CloudGuard HA cluster to handle SSL termination and give each service a different external IP addresses. The CheckPoint cluster members are the load balancer's backend and we're left with a fundamental problem:

- The load balancer does a basic TCP check on port 443 to detect a down member.

- Since both members pass the check regardless of active/standby state, the traffic is distributed 50/50 assuming both are up.

- The max NAT sessions are 16384/66 which obviously is 99/1, not 50/50

That NAT issue is described here and I still don't have a fix for it. So I'm thinking if I can somehow just get the traffic to go 100/0, this fixes the issue.

Is there a way to externally check the active/standby status? Perhaps there's a service that runs only on the active and is shutdown when it goes standby?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something seems off with this deployment, would suggest investigating the details/specifics further with TAC.

Also tagging @Shay_Levin in case he has any ideas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ahh yes, the old "surely, this problem is the customer's fault" response. Good 'ol CheckPoint, lol.

I'm currently on my 3rd support case. The NAT sessions issue was identified over 9 months ago:

There's been a series of "bug fixes" for this in R80.40, but none that address the root issue

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's not the intent of my response. Actually it seems we have a mix of HA & Active/Active architectures in play.

We typically have different templates depending on HA or Active/Active (Autoscale) requirements per:

CloudGuard Network High Availability R80.30 and above for Google Cloud Platform Administration Guide

CloudGuard GCP Auto Scaling Managed Instance Group (MIG) for R80.30 and Higher Administration Guide

If the Cluster is a true HA cluster only the "VIP" (Cluster's External IP) should be a backend target of the LB, not the individual gateway / cluster member IPs (standby won't process traffic).

Additionally (but not so relevant to a load-balancer discussion) with normal cluster XL HA gateways you could query via SNMP to determine the active state i.e.

The OID 1.3.6.1.4.1.2620.1.5.6.0

The expected output is:

From the Active member:

SNMPv2-SMI::enterprises.2620.1.5.6.0 = STRING: "Active"

From the Standby member:

SNMPv2-SMI::enterprises.2620.1.5.6.0 = STRING: "Standby"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We typically have different templates depending on HA or Active/Active (Autoscale) requirements per:

It's standard active/standby HA deployment (no auto-scaling or active/active)

If the Cluster is a true HA cluster only the "VIP" should be a backend target of the LB, not the individual gateway / cluster member IPs

Not quite following here. I known in AWS, you can set an IP address for the backend server. But as far as I know, GCP only allows instance groups, target pools, or NEGs. In all cases, these would reference instance vNICs, not just an IP address.

(standby won't process traffic).

I'd have to disagree with that, based on experience. The standby will load the same policy as the active and run all services. It definitely processes traffic just fine as long as asymmetric routing is accounted for.

Additionally with normal cluster XL HA gateways you could query via SNMP to determine the active state i.e.

That's good to know, but GCP load balancers definitely don't support use of SNMP polls for healthchecks. Perhaps I could create a Python script via 1-minute cron job to do the query and then update the LB backend accordingly, but that's a really ugly hack.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The admin guide for HA talks specifically about directing traffic for the Cluster's External IP towards the "Active" member, not both.

Therefor the "standby" node in HA shouldn't receive traffic, load-sharing is not supported in this mode.

Your load-balancer pool configuration should be such that it takes this into account.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The admin guide for HA talks specifically about directing traffic for the Cluster's External IP towards the "Active" member, not both...Your load-balancer pool configuration should be such that it takes this into account.

Again, this is not possible with GCP load balancers, because there's no way to direct traffic to a specific IP address. If CheckPoint is expecting this to work, they need to submit a feature request to Google.

FortiGate doesn't have this problem because the two members act in very strict active/standby mode (i.e. the data plane on the standby is essentially shut down and won't even respond with a SYN+ACK)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With using TCP/443 for the health-probe from the LB, my assumption is it's likely being accepted by implied rules for multi-portal infrastructure including GAiA web portal etc.

In the firewall logs what do you see as the destination for the TCP/443 health probes coming from the LB?

There is a way to influence the implied rule behavior via kernel parameter (sk105740) but whether this will assist may depend on the target of the health probe and the ability to create specific rules to handle this traffic in a more granular way.

Otherwise the standby member of a clusterXL cluster shouldn't forward traffic (sk63942) and if you see something contrary to this it's definitely something for TAC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the standby member of a clusterXL cluster shouldn't forward traffic

Here's what a non-443 TCP healthcheck looks like:

[Expert@xxx-member-b:0]# tcpdump -i eth0 -n port 29413

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

21:27:07.494666 IP 35.191.11.202.59692 > 100.64.100.29413: Flags [S], seq 2790787222, win 65535, options [mss 1420,sackOK,TS val 2593715553 ecr 0,nop,wscale 8], length 0

21:27:07.495411 IP 100.64.100.29413 > 35.191.11.202.59692: Flags [S.], seq 3524699649, ack 2790787223, win 65535, options [mss 1420,nop,nop,sackOK,nop,wscale 6,nop,nop,TS val 616471966 ecr 2593715553], length 0

21:27:07.495563 IP 35.191.11.202.59692 > 100.64.100.29413: Flags [.], ack 1, win 256, options [nop,nop,TS val 2593715554 ecr 616471966], length 0

21:27:07.495584 IP 35.191.11.202.59692 > 100.64.100.29413: Flags [F.], seq 1, ack 1, win 256, options [nop,nop,TS val 2593715554 ecr 616471966], length 0

21:27:07.495766 IP 100.64.100.29413 > 35.191.11.202.59692: Flags [.], ack 1, win 4096, options [nop,nop,TS val 616471966 ecr 2593715554], length 0

21:27:07.495779 IP 100.64.100.29413 > 35.191.11.202.59692: Flags [F.], seq 1, ack 2, win 4096, options [nop,nop,TS val 616471966 ecr 2593715554], length 0

21:27:07.496021 IP 35.191.11.202.59692 > 100.64.100.29413: Flags [.], ack 2, win 256, options [nop,nop,TS val 2593715555 ecr 616471966], length 0

^C

7 packets captured

7 packets received by filter

0 packets dropped by kernel

[Expert@xxx-member-b:0]# cphaprob state

ID Unique Address Assigned Load State Name

1 10.12.34.68 100% ACTIVE xxx-01

2 (local) 10.12.34.69 0% STANDBY xxx-02

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I can see you will need to use HTTP LB on TCP port 8117 for the health probe with the supporting configuration per: sk114577 (Section 7)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes, but not HTTP , it's TCP 8117.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah that was a reference to the type of LB, edited for clarity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello,

Can I ask, why do you have traffic routed specifically through the secondary appliance?

Shouldn't you route through HSRP IP of the cluster ?

Ty,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Google Clusters don't have an Internal VIP address. all the interfaces are configured as private.

So we can use an LB to route traffic to the ACTIVE member in the Cluster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Understood, and initially you said "We use Google Cloud Global HTTP(S) load balancers in front of a CheckPoint CloudGuard HA cluster to handle SSL" and that the "load balancer does a basic TCP check on port 443 to detect a down member" .

So you are stating that Google Cloud Load Balancer checks the HTTPS response from each CheckPoint Cluster Member ? Why ???

I was expecting that Google Cloud Balancer would address smth behind the CheckPoint Cluster, therefore your routing from Google LB to the devices behind CKP Cluster should go through the CKP Cluster VIP .

Like in our case, our cluster on LAN side has 10.xx.3.2 (on CKP node A) and 10.xx.3.2 (on CKP node B) and the HA is 10.xx.3.1 (on CKP ACTIVE node) . So we route internal towards the 10.x.3.1 and we don't care about which node is active or standby .

So don't you have a similar situation ? Can you sketch smth in paint ?

ty,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sorin_Gogean wrote:Understood, and initially you said "We use Google Cloud Global HTTP(S) load balancers in front of a CheckPoint CloudGuard HA cluster to handle SSL" and that the "load balancer does a basic TCP check on port 443 to detect a down member" .

So you are stating that Google Cloud Load Balancer checks the HTTPS response from each CheckPoint Cluster Member ? Why ???

Ummm...TCP healthchecks work at Layer 4/5 of the OSI model. They don't care about the HTTPS response. But the standby checkpoint responses to either type, any port, so it's a moot point anyway.

Here's a diagram of the setup. Note that the standby receiving traffic wouldn't be an issue if not for the max of 66 NAT connections

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you reviewed / tried TCP 8117 with the supporting config as suggested above?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Chris_Atkinson wrote:

Have you reviewed / tried TCP 8117 with the supporting config as suggested above?

It's my understanding this only works on R81.10. We're on R80.40, and in cloud deployments, the upgrade to R81.10 is non trivial.

Even under R81.10, I'm really not down the the whole tweaking kernel parameters thing. There's too much of a risk of that setting being reset upon subsequent upgrades.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The GCP HA solution was released with a reference architecture describing Site-to-Site VPN use case and egress/E-W inspection via routing rule updates to forward traffic to the Active member, and VIP for ingress. A Load Balancer use case for ingress/egress was not designed nor certified.

For ingress inspection we recommend using the auto-scaling MIG solution.

Having said that, as Chris mentioned, with R81.10 we have firewall-kernel code that replies to GCP load balancer health probes - it is in use by the auto-scaling MIG solution from R81.10. On HA, only the Active will respond to the probes on port 8117.

For 2023 roadmap, we are evaluating the possibility to update the HA architecture to rely on load balancers for ingress/egress, or officially support ingress architecture with Load Balancers. In that case, the health probe will automatically be configured during deployment (as happens on other platforms).

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For ingress inspection we recommend using the auto-scaling MIG solution.

We have a requirement to do IPSec VPN termination on the same device, so regular HA is required.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello @johnnyringo ,

thank you for the drawing, it's clarifying some of our guesses.

like others and I were stating, it seems a design problem (we might be wrong) and because you have connected directly the External Load Balancer to each CheckPoint Cluster nodes individually, and you didn't addressed the routing to the ETH0 HA IP and ETH1 HA IP, you get this behavior.

you could change the Cluster set-up from HA (Active/StandBy) to Active/Active - that way both appliances will pass traffic accordingly, or like @Bob_Zimmerman recommended, have both CheckPoint nodes acting separately .

by doing that, your issue that you see on the stand-by CKP node, should not happen anymore, as the appliance will do all things like it's alone and unaware of the other node.

we had an instance in Azure, with 2 appliances in HA and I had both WAN and LAN configured with HA IP address, and all was pointing to the HA IP and I don't remember seeing traffic issues for 3 - 4 years we had that in place.

ty,

PS: the same way you have your external LB and Internal LB, with traffic passing through a firewall cluster, we have in our DataCenters over F5 appliances, where an external ViP (hosted on external LB) would address to an internal ViP ( hosted on internal LB) that would eventually address to internal nodes - like for Exchange if you want to have a service for reference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

like others and I were stating, it seems a design problem (we might be wrong)

No, you guys are right. Every time a customer has a problem operating CheckPoints in public cloud, it's gotta be a "bad deployment" or "bad design" or "bad topology". It couldn't POSSIBLY be a bug on the CheckPoint right? Because then you'd have to fix it. And that's a lot of work, especially on versions like R80.40 that are supported for another 2 years. Jeez.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Though we might not like them I think the answers/options are contained in this thread i.e.

1. Explore the standby cluster member behavior further with TAC

2. Attempt to block the health probes to the standby via FW policy.

3. Upgrade to the version where this is documented as a supported/certified topology

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Explore the standby cluster member behavior further with TAC

Already done via multiple support cases, including escalation to Tier 3 and Diamond support. If anyone can explain why the standby member only goes to 66 nat connections vs. the expected 16384, I'll send them a nice thank you note and PayPal them $100.

2. Attempt to block the health probes to the standby via FW policy.

Firewall policies have no way of determining which member is standby, so this is not possible.

3. Upgrade to the version where this is documented as a supported/certified topology

We're already on R80.40 which is supported for another 2 years. The R81.10 port 8118 "fix" required kernel tuning so even if we upgraded to that version, I'd be hesitant to use it

I'll present 2 additional options:

4. Replace Checkpoint with another vendor. For example, Fortigates do not accept connections to the standby member, thus avoiding the problem

5. Configure the LB backend service to use Internet Network Endpoint Groups rather than Instance Groups or Target Pools. With Internet NEGs, the target is simply an IP address / port combination, so traffic always goes to primary-cluster-address and thus never hits the standby. The big caveat of Internet NEGs is they're only supported on Classic Load balancer. Our Google Account team recommended migrating off Classic LBs last year following the November 16th 2021 outage, so we'd have to postpone that migration indefinitely. I'm not crazy about this option, but it's the path of least resistance and is the best short-term fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not natively but perhaps via a Dynamic object that resolves the standby members IP addresses via a user defined local script/job.

As Dynamic objects only have local significance if the member is Active it's Null if it's Standby it's populated and matches a reference in a drop rule?

Some examples of built-in dynamic objects include LocalMachine, LocalMachine_All_Interfaces, others are simply a list of locally resolved IPs.

If you want PM me with the list of SRs and I'll have a chat with your SE, but the above should be something PS could help you explore if needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, I'm already using Dynamic Objects to source NAT the 35.191.0.0/16 IPs to the local member's internal NIC IP (CheckPoint oddly makes "LocalGatewayInternal" the main interface, which seems like a bug to me since "LocalGateway" already does that, but...whatever).

I'm not quite following how the Dynamic Objects would get updated during a failover even. Like you say, their values are local to the gateway. In any case, I've started to migrated the backend to Internet NEGs and it's working fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@johnnyringo wrote:

That's good to know, but GCP load balancers definitely don't support use of SNMP polls for healthchecks. Perhaps I could create a Python script via 1-minute cron job to do the query and then update the LB backend accordingly, but that's a really ugly hack.

I've got the same configuration as JohnnyRingo and I did resort to a hacky cron job (running R80.40 as well) running on both active and standby nodes (simultaneously)

- Followed sk77300 - How to create a scheduled job (cron job) in Gaia with frequency of less than a day

- Used a shell script:

#!/bin/bash -f

source /opt/CPshrd-R80.40/tmp/.CPprofile.sh

CLUST_STATE=$(cphaprob state | grep local | awk '{print $5;}')

if [[ "${CLUST_STATE}" == "ACTIVE" ]]; then

if [[ ! -f /rest_api/docs/templates/CLUST_ACTIVE.txt ]]; then

# File does not exist, need to create it

touch /rest_api/docs/templates/CLUST_ACTIVE.txt

fi

else

rm -rf /rest_api/docs/templates/CLUST_ACTIVE.txt 2>/dev/null

fi

which writes a trigger file which is then exposed via https://<CLUSTERNODE>/gaia_docs/CLUST_ACTIVE.txt

- Required a restart of the gaia_docs service, based on sk97638 - Check Point Processes and Daemons

- tellpm process:rest_api_docs; tellpm process:rest_api_docs t

- Used /gaia_docs/ because I assumed that it was not going to be impacted by any JHF or management functionality.

- Created healthcheck at Google Cloud layer

- Healthy = HTTP 200 OK, file was found (even with zero byte content)

- Unhealthy = HTTP 401 NOTFOUND, file was not found

- Created Access Policy in Gateway to allow traffic from GCP Healthcheck IP range

- In my policy, I am using dynamic object (LocalGatewayExternal) for destination

During testing, the cluster switched nodes, the external IP address was moved to the second node, within 60 seconds crontab executes, detects cluster state, updates the trigger file and the LB sees the change from healthy to unhealthy and vice versa. In my testing, I never saw failovers take longer than 75 seconds (both from Check Point and from Google LB perspective) and usually were within 45-55 seconds.

This has been in place on four different clusters in two different GCP tenants/environments, so it does work. But ideally, I would rather have a supported solution. Maybe one day in R81.xx land...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At a minimum, the questions you're asking indicate you have a bad topology, which should be redesigned. HA is really bad for availability in cloud environments. This may sound odd, but stick with me.

Deploying an HA firewall cluster protects you from a firewall instance failing at the cost of a lot more complexity (as demonstrated by this thread). Rather than making redundant paths into a given instance of your application, availability is best achieved by making many instances of your application which each break totally, but discretely. If you have 20 instances of the application running, who cares if one instance's single firewall goes down?

Now instead of one firewall going down, consider an application server going down. Or a load balancer. Or a provider zone or even region (not like it's especially rare for cloud provider datacenters to crash hard). Deploying more instances of the application distributed between multiple regions protects you from all of these possible failures. The increase in complexity is marginal at best, since each instance is functionally identical.

In-place upgrades are a bad idea for similar reasons. Each instance should be built such that it lives its whole life running a constant software version. New software version? Deploy a new instance of the whole application. Doing it this way helps keep your deployments perfectly reproducible, which helps keep the application's behavior predictable.

If your application isn't built in a way which can take advantage of this, then you probably shouldn't be running it in GCP. You're just paying a ton of extra money to run your software on Sundar Pichai's computer instead of a computer you own.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you probably shouldn't be running it in GCP. You're just paying a ton of extra money to run your software on Sundar Pichai's computer instead of a computer you own.

We're a 30,000 employee company that's 3 years in on a 10 year contract with Google Cloud, but thanks for the brilliant advice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shay_Levin can you advise please?