- Products

Quantum

Secure the Network IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloudGuard CloudMates

Secure the Cloud CNAPP Cloud Network Security CloudGuard - WAF CloudMates General Talking Cloud Podcast Weekly Reports - Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Detecting Threats Across the Open, Deep

and Dark Web With Infinity ERM

Four Ways to SASE

It's Here!

CPX 2025 Content

Remote Access VPN – User Experience

Help us with the Short-Term Roadmap

CheckMates Go:

What is UPPAK?

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Quantum

- :

- Security Gateways

- :

- Re: High dispatcher cpu

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

High dispatcher cpu

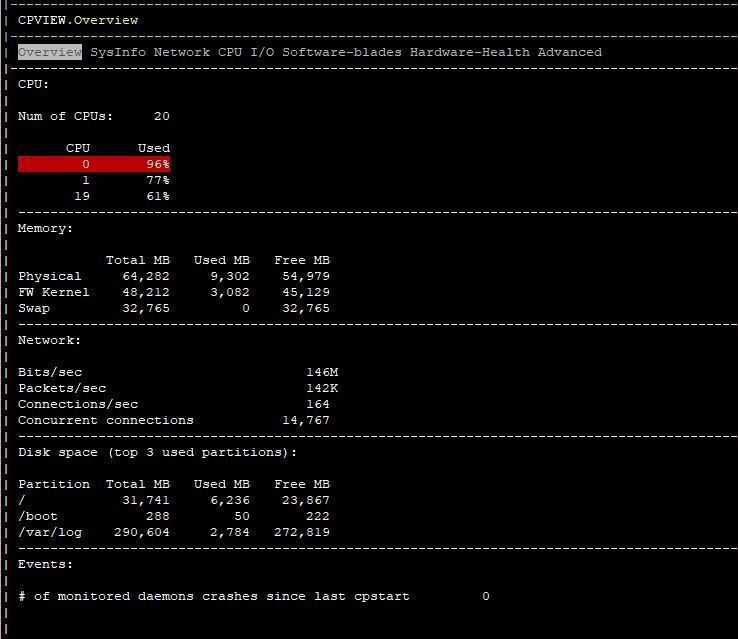

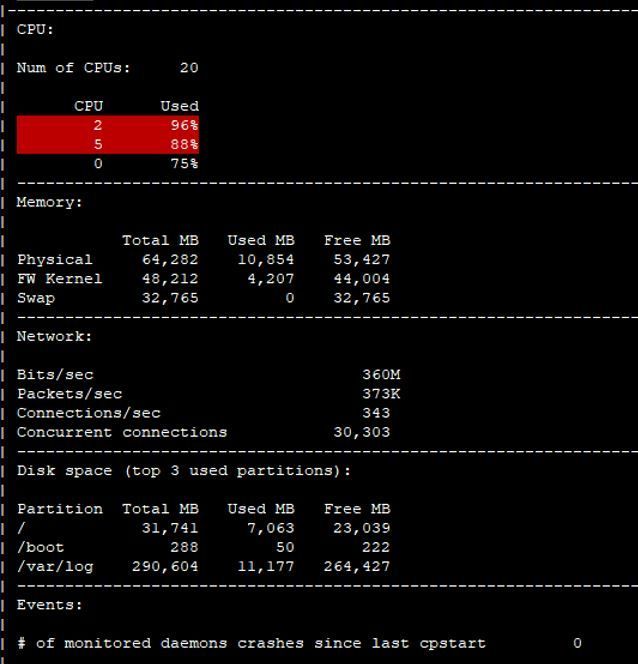

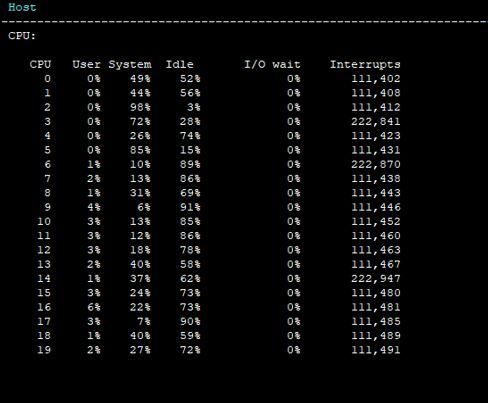

I've had ongoing issues since moving to R80.x with high dispatcher core usage. We have 21800 gateways . We've had multiple TAC cases, they had us add a 3rd dispatcher core and set the affinity for the cores manually, but i still consistently see the 10Gb interfaces spike the dispatcher CPU's in what should be low utilization situations for this model gw. We are on R80.20 jumbo 87, gateways were freshly reinstalled direct on R80.20 after continuous crashes across both cluster members in the last few days (will not be upgrading cluster in place again)..priority queues are off per TAC.

any ideas would be appreciated... put some info below for context

CPU 0: eth1-02 eth1-04

CPU 1: eth3-02 eth1-01 eth1-03

CPU 2: eth3-01 eth3-03 eth3-04 Mgmt

CPU 3: fw_16

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 4: fw_15

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 5: fw_14

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 6: fw_13

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 7: fw_12

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 8: fw_11

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 9: fw_10

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 10: fw_9

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 11: fw_8

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 12: fw_7

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 13: fw_6

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 14: fw_5

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 15: fw_4

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 16: fw_3

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 17: fw_2

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 18: fw_1

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 19: fw_0

in.acapd fgd50 lpd cp_file_convertd rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

All: scanengine_b

sim affinity -l

Mgmt : 2

eth1-01 : 1

eth1-02 : 0

eth1-03 : 1

eth1-04 : 0

eth3-01 : 2

eth3-02 : 1

eth3-03 : 2

eth3-04 : 2

fwaccel stats -s

Accelerated conns/Total conns : 5632/11726 (48%)

Accelerated pkts/Total pkts : 87217254/91349341 (95%)

F2Fed pkts/Total pkts : 4132087/91349341 (4%)

F2V pkts/Total pkts : 349157/91349341 (0%)

CPASXL pkts/Total pkts : 78606120/91349341 (86%)

PSLXL pkts/Total pkts : 5653296/91349341 (6%)

CPAS inline pkts/Total pkts : 0/91349341 (0%)

PSL inline pkts/Total pkts : 0/91349341 (0%)

QOS inbound pkts/Total pkts : 89478397/91349341 (97%)

QOS outbound pkts/Total pkts : 90834289/91349341 (99%)

Corrected pkts/Total pkts : 0/91349341 (0%)

fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 19 | 927 | 1104

1 | Yes | 18 | 805 | 943

2 | Yes | 17 | 781 | 1606

3 | Yes | 16 | 814 | 1217

4 | Yes | 15 | 944 | 1722

5 | Yes | 14 | 895 | 1152

6 | Yes | 13 | 1102 | 1680

7 | Yes | 12 | 781 | 1674

8 | Yes | 11 | 1063 | 1063

9 | Yes | 10 | 741 | 1024

10 | Yes | 9 | 1002 | 1053

11 | Yes | 8 | 810 | 1016

12 | Yes | 7 | 799 | 964

13 | Yes | 6 | 831 | 1837

14 | Yes | 5 | 833 | 1017

15 | Yes | 4 | 841 | 1087

16 | Yes | 3 | 862 | 1329

free -m

total used free shared buffers cached

Mem: 64282 12574 51708 0 105 3124

-/+ buffers/cache: 9344 54938

Swap: 32765 0 32765

11 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First step use multi queueing.

It is an acceleration feature that lets you assign more than one packet queue and CPU to an interface.

When most of the traffic is accelerated by the SecureXL, the CPU load from the CoreXL SND instances can be very high, while the CPU load from the CoreXL FW instances can be very low. This is an inefficient utilization of CPU capacity.

By default, the number of CPU cores allocated to CoreXL SND instances is limited by the number of network interfaces that handle the traffic. Because each interface has one traffic queue, only one CPU core can handle each traffic queue at a time. This means that each CoreXL SND instance can use only one CPU core at a time for each network interface.

Check Point Multi-Queue lets you configure more than one traffic queue for each network interface. For each interface, you can use more than one CPU core (that runs CoreXL SND) for traffic acceleration. This balances the load efficiently between the CPU cores that run the CoreXL SND instances and the CPU cores that run CoreXL FW instances.

More see here:

R80.x Performance Tuning Tip – Multi Queue

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please note the limitations (sk93036) in some scenarios if your system is using SAM cards...

-No dynamic dispatcher (sk105261)

-No multiQ (sk94267)

-No HW assisted acceleration (sk68701)

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

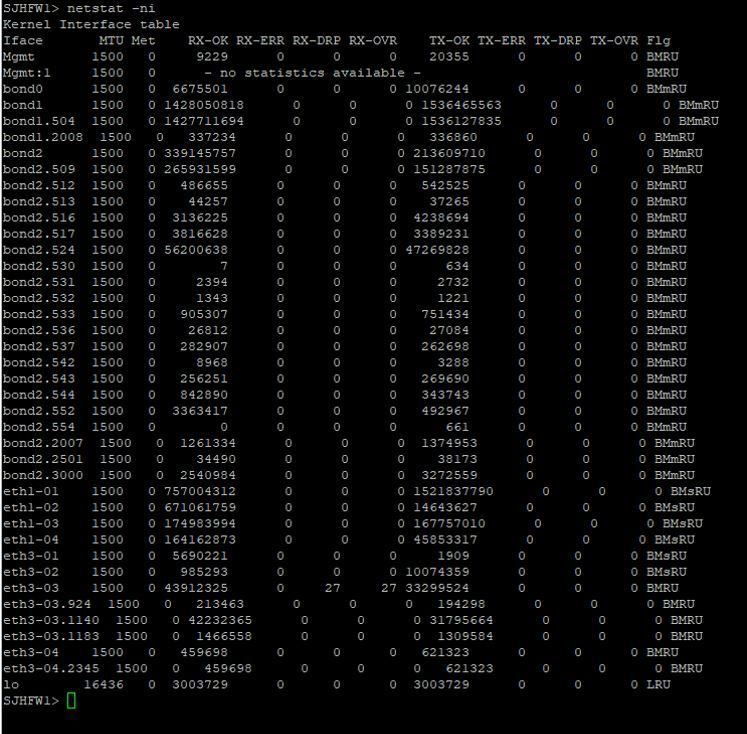

Given the high amount of fully-accelerated traffic (which is handled on the dispatcher cores), you need more of them. A lot more of them in your case, as most of your traffic is now being processed on the dispatchers as compared to R77.30 due to the changes in SecureXL. High dispatcher CPU usage is not necessarily a problem, unless the RX-DRP rate displayed by netstat -ni is > 0.1% on one or more interfaces.

Assuming that is the case which is likely, here is what I would recommend:

0) SMT/Hyperthreading does not appear to be enabled, leave it off for now.

1) Remove the manual interface affinity, setting this is a very old recommendation that overcame some problems with automatic interface affinity in the initial minor releases of R77.XX. No longer necessary on R77.30+ and is likely to just cause further problems; let automatic interface affinity do its thing.

2) Reduce number of kernel instances from 17 to 14 which will give a 6/14 split. Assigning an odd number of SND/IRQ cores (dispatchers) is generally not a good idea anyway due to CPU fast cache thrashing.

3) As Heiko mentioned, now enable Multi-Queue on your busiest interfaces that were showing the highest percentages of RX-DRP. Note that you can't enable Multi-Queue for more than 5 interfaces so choose carefully.

4) Reevaluate the need for the QoS blade, if you are just doing bandwidth limits and no guarantees you should use APCL/URLF Limit actions instead and disable the QoS blade for best performance.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shawn_Fletcher,

Hi @Timothy_Hall,

As Timothy described in point three. I would enable MQ for all relevant 10GBit/s interfaces (max.5 interfaces).

The number of queues is limited by the number of CPU cores and the type of interface driver:

|

Network card driver |

Speed |

Maximal number of RX queues |

|

igb |

1 Gb |

4 |

|

ixgbe |

10 Gb |

16 |

|

i40e |

40 Gb |

14 |

|

mlx5_core |

40 Gb |

10 |

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

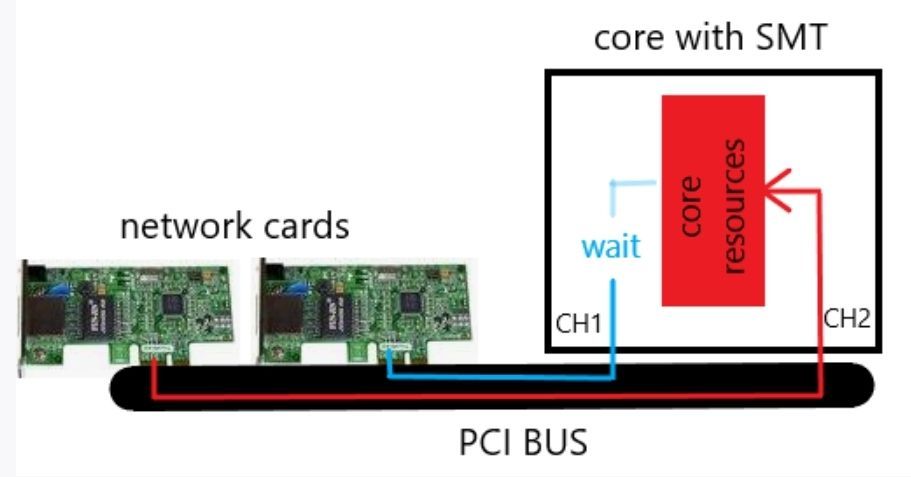

To point zero of @Timothy_Hall ˋs statement and I totally agree with him here.

21800 is already shipped with enabled SMT feature in the BIOS.

Requires enabling of SMT feature only in 'cpconfig' (refer to "Enable SMT" section).

Check Point always recommends turning on SMT on appliances. However, under certain conditions it can also be disadvantageous (in your case QoS). To test SMT performance, you can check it from my point of view only with and without SMT.

The following statement is also often discussed:

SMT can increase message rate for multi process applications by having more logical cores. This increases the latency of a single process due to lower frequency of a single logical core when hyper-threading is enabled. This means interrupt processing of the NICs will be slower, load will be higher and packet rate will decrease. I think that's why Check Point doesn't recommend SMT in pure firewall and VPN mode. From my point of view, it only accelerates software balades. Therefore I use it if necessary, if many blades are activated. I'd like to discuss that with Check Point.

Small example with basic viewing:

|

This presentation is very simplified and should illustrate the issues. If SMT channel 2 uses all core resources with I/O operations, channel 1 must wait for the core resources. This can reduce the performance with enabled SMT. The same effect can occur with multi-queue and enabled SMT. The problem can be fixed by adjusting the Check Point affinity or disable SMT. What we see here, many Intel architecture issues can affect SMT and therefore the firewall performance.

There are also some cases in which SMT should not be used:

-

QoS is not supported (I saw you using QoS so I'd leave it off.)

-

- Data Loss Prevention blade

- Anti-Virus in Traditional Mode

- Using Services with Resources in Firewall policy

SMT is not recommended if these blades/features are enabled

-

SMT is not recommended for environments that use Hide NAT extensively

More for SMT see here in my article:

R80.x Performance Tuning Tip – SMT (Hyper Threading)

Or sk93000:

SMT (HyperThreading) Feature Guide

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great article Heiko, a few updates:

> SMT is not recommended for environments that use Hide NAT extensively

This is still stated all over the documentation, but is outdated now for R80.10+ and was related to the old static allocation of available source ports for each Hide NAT address to all the worker cores. Doubling the number of Firewall Workers via SMT would cause half the usual available source ports for each Hide NAT address to be statically allocated to each Firewall Worker core, and they could run out easily causing NAT failures. A new capability was added to dynamically allocate Hide NAT source ports in R77.30 via the fwx_nat_dynamic_port_allocation variable, but it was not enabled by default. Starting in R80.10 gateway if there are 6 or more Firewall Worker Cores configured, by default the fwx_nat_dynamic_port_allocation variable will be automatically set to 1, otherwise it will still be 0.

As you observed having SMT/Hyperthreading enabled can actually hurt performance if only the Firewall and VPN blades are enabled since it is likely a large percentage of traffic will be fully accelerated by SecureXL in the SXL path. From my TechTalk (slightly updated for this thread):

- Traffic that is being processed in the Medium Path (PXL) or Firewall Path (F2F) on the Firewall Worker cores tends to benefit most from SMT being enabled, since intensive processing in these paths tends to block often while waiting for an I/O event to occur. When a block occurs in a thread running on a physical core, another thread of execution that is ready can jump on that core while the original thread is still waiting.

- However if more than about 60% of a gateway’s traffic is fully accelerated by SecureXL in the SXL path (not common), SMT tends to hurt overall performance, as operations handled by a SND/IRQ core are completed quickly and do not tend to block often waiting for external events; as such two SND/IRQ instances in two separate threads tend to fight each other for the same physical core under heavy load.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Timothy_Hall & @HeikoAnkenbrand

Want to thank you both for the really detailed info. I've been reading up and think MQ could be a good option.

Just to confirm

-I do not have a SAM card

-Unfortunately the QoS blade is actively used as we have some voip traffic we explicitly prioritize so at this point SMT will have to stay out of the available options. I think i will hold off on testing SMT until after MQ to ideally not have to remove the QoS.

-The majority of the traffic comes across 4x10gb interfaces so i think it makes sense to activate those 4 for MQ. It looks like this is a simple command then reboot to activate and is not disruptive (if in an HA cluster) and do one unit at a time

-first i will set the sim affinity back with sim affinity -a (this was a TAC suggestion FYI, to set affinity manually and add 3rd dispatcher core.

- I need to further increase my SND cores from 3/17 to 6/14 with cpconfig

(if memory serves this is a brief outage since the cluster wont sync with mismatching core setups?)

One note, netstat -ni is not currently showing RX-DRP rate > 0.1% (although the gateway was recently reinstalled). The high SND CPU has come up on a couple TAC cases regarding stability so i've been paying attention to it for that reason

Thanks very much for taking the time to assist.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Timothy_Hall,

CUT>>>

> SMT is not recommended for environments that use Hide NAT extensively

This is still stated all over the documentation, but is outdated now for R80.10+ and was related to the old static allocation of available source ports for each Hide NAT address to all the worker cores...

<<<CUT

Thanks @Timothy_Hall for this info. That wasn't 100% known to me either. I'll add that to my article.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just an update on this, 1 day into the revised SND setup with 6/14 and with MQ on the 10gb interfaces the CPU load was much better on the SND cores. It still significantly spiked during some periods where packets/sec got up to near 400k from typical 50k-100k during production hours. Any tips to help identify what those bursts are would be great.

I did notice today with netstat -ni that one of my 1gb interfaces now has RX-DRP count. I know it was max 5 of 10gb interfaces for MQ , but am i safe to add additional 1gb interfaces?

fw ctl affinity -l -r

CPU 0: Mgmt

CPU 1: eth3-01

CPU 2: eth3-02

CPU 3: eth3-03

CPU 4: eth3-04

CPU 5:

CPU 6: fw_13

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 7: fw_12

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 8: fw_11

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 9: fw_10

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 10: fw_9

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 11: fw_8

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 12: fw_7

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 13: fw_6

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 14: fw_5

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 15: fw_4

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 16: fw_3

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 17: fw_2

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 18: fw_1

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

CPU 19: fw_0

in.acapd fgd50 lpd cp_file_convertd scanengine_b rtmd usrchkd rad in.geod mpdaemon in.msd wsdnsd pdpd pepd in.asessiond vpnd fwd cpd cprid

All:

Interface eth1-01: has multi queue enabled

Interface eth1-02: has multi queue enabled

Interface eth1-03: has multi queue enabled

Interface eth1-04: has multi queue enabled

fwaccel stats -s

Accelerated conns/Total conns : 2271/14754 (15%)

Accelerated pkts/Total pkts : 5159177017/5770079738 (89%)

F2Fed pkts/Total pkts : 610902721/5770079738 (10%)

F2V pkts/Total pkts : 46842804/5770079738 (0%)

CPASXL pkts/Total pkts : 4386384160/5770079738 (76%)

PSLXL pkts/Total pkts : 690434477/5770079738 (11%)

CPAS inline pkts/Total pkts : 0/5770079738 (0%)

PSL inline pkts/Total pkts : 0/5770079738 (0%)

QOS inbound pkts/Total pkts : 5554439911/5770079738 (96%)

QOS outbound pkts/Total pkts : 5800977548/5770079738 (100%)

Corrected pkts/Total pkts : 0/5770079738 (0%)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how did you serolve this?

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 31 | |

| 16 | |

| 11 | |

| 7 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 |

Upcoming Events

Tue 13 May 2025 @ 04:00 PM (CEST)

German Session: NIS2- Compliance: Effiziente Vorbereitung und UmsetungTue 13 May 2025 @ 04:00 PM (CEST)

Maestro Masters EMEA: Quantum Maestro Architectures and OptimizationTue 13 May 2025 @ 02:00 PM (EDT)

Maestro Masters Americas: Quantum Maestro Architectures and OptimizationWed 14 May 2025 @ 10:00 AM (CEST)

ATAM 360°: Elevate Your Cyber Security Strategy with Proactive services - EMEAWed 14 May 2025 @ 03:00 PM (CEST)

NIS2 Readiness: Assess, Secure, and Comply with ConfidenceWed 14 May 2025 @ 10:30 AM (BRT)

Transforme sua Segurança de Rede com Agilidade e EficiênciaTue 13 May 2025 @ 04:00 PM (CEST)

Maestro Masters EMEA: Quantum Maestro Architectures and OptimizationTue 13 May 2025 @ 02:00 PM (EDT)

Maestro Masters Americas: Quantum Maestro Architectures and OptimizationWed 14 May 2025 @ 10:00 AM (CEST)

ATAM 360°: Elevate Your Cyber Security Strategy with Proactive services - EMEAWed 14 May 2025 @ 03:00 PM (CEST)

NIS2 Readiness: Assess, Secure, and Comply with ConfidenceWed 14 May 2025 @ 10:30 AM (BRT)

Transforme sua Segurança de Rede com Agilidade e EficiênciaWed 14 May 2025 @ 05:00 PM (CEST)

ATAM 360°: Elevate Your Cybersecurity Strategy with Proactive services - AMERICASThu 15 May 2025 @ 09:00 AM (IDT)

PA In-Person CloudGuard Workshop (CGNS, WAF, & API Security)About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter