- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- More

- :

- Off Topic

- :

- Re: HP ProLiant DL360 Gen 10 Bios workload profile

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HP ProLiant DL360 Gen 10 Bios 4 cores with or without HT on 16 core system (8 cores with out HT)?

It looks like the default is General Power Efficient compute. Does any one use General Peak Frequency or General throughput compute? I'm considering changing to General throughput compute for Gaia R81.20. Also, I don't see an option in the bios to turn Intel (R) Turbo Boost Technology off (it's grayed out). Even after I switch to General Throughput compute, it's still grayed out.

8 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure what of those BIOS profile we test with.

I believe "Turbo Boost" applies to Hyperthreading...are you wanting to disable that?

You can disable it in cpconfig (at least on Check Point appliances) per sk93000.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's hard to tell if hyperthreading is recommended for open servers or not, but it looks like it is supported after R80.40.

I'll test General throughput compute for Gaia R81.20 and get back to the community on how it goes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the past, there was an issue with licensing on Open Server when HT was enabled (namely we were treating HT cores as real cores from a licensing point of view).

That might have led to the decision to disable it on Open Servers (at least when R80.40 was released).

Believe that issue has been addressed: https://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut...

Given it's the default on most Check Point appliances now, it's probably safe to have it enabled in most cases on Open Server as well (with the caveats in sk93000 in mind).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder which would have better performance optimization running Gaia R81.20 on an open server with 8 cores (16 with HT turned on). 8 cores with HT (8 are unused/other due to CP licensing) or 4 cores without HT (4 unused/other due to CP licensing)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SMT / HT will give you some performance advantage if all logical cores are licensed. Mind you will need to double the licensed cores in this case. How many cores are allowed by your license?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

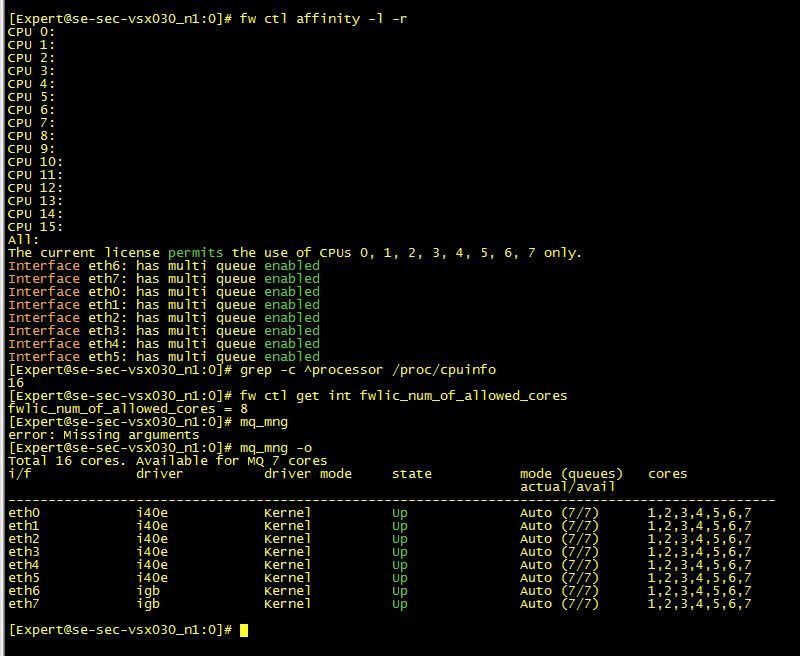

U will run in to some issues.

This is a 16 Core machine with 8 Core licens.

So as you see here CPU 1-7 is assigned to multiQ, so at first look it looks awesome.

Like free performance... Yee!

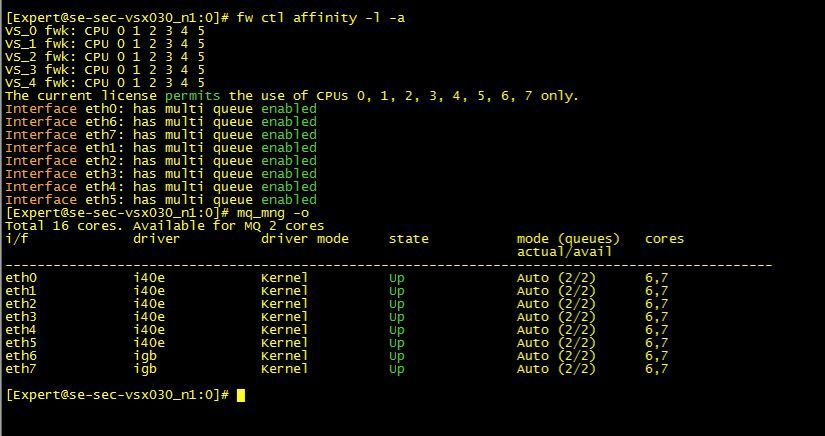

And then you check like the VS allocation.

And here you see the crap then, meaning u only have 1 core for the firewalls.

Fixed with changing the fwk

fw ctl affinity -s -d -fwkall 6 and reboot.

You are not allowed to add 2 x 8 core licenses.

But oyu can ofc change it to a 16 core later if more umf is needed.

Regards

Magnus

https://www.youtube.com/c/MagnusHolmberg-NetSec

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, this looks like multi-queue isn't enabled on some interfaces. How do I do that? I found the command to set an interface to use MQ, but I'm getting an error. Some drivers aren't supported, maybe that's it? Update: yeah it doesn't look like tg3 is supported RE: MQ. Well, that explains it, that's gtk.

fw ctl multik dynamic_dispatching get_mode

Current mode is on

[Expert@gw1:0]# fw ctl affinity -l -a

Interface eth4: CPU 2

Interface eth6: CPU 3

Interface eth7: CPU 4

Interface eth8: CPU 5

Interface eth10: CPU 1

Kernel fw_0: CPU 7

Kernel fw_1: CPU 6

Kernel fw_2: CPU 5

Kernel fw_3: CPU 4

Kernel fw_4: CPU 3

Kernel fw_5: CPU 2

Daemon cprid: CPU 2 3 4 5 6 7

Daemon mpdaemon: CPU 2 3 4 5 6 7

Daemon fwd: CPU 2 3 4 5 6 7

Daemon rad: CPU 2 3 4 5 6 7

Daemon lpd: CPU 2 3 4 5 6 7

Daemon core_uploader: CPU 2 3 4 5 6 7

Daemon wsdnsd: CPU 2 3 4 5 6 7

Daemon usrchkd: CPU 2 3 4 5 6 7

Daemon in.asessiond: CPU 2 3 4 5 6 7

Daemon pepd: CPU 2 3 4 5 6 7

Daemon pdpd: CPU 2 3 4 5 6 7

Daemon in.acapd: CPU 2 3 4 5 6 7

Daemon cp_file_convertd: CPU 2 3 4 5 6 7

Daemon iked: CPU 2 3 4 5 6 7

Daemon vpnd: CPU 2 3 4 5 6 7

Daemon cprid: CPU 2 3 4 5 6 7

Daemon cpd: CPU 2 3 4 5 6 7

Interface eth0: has multi queue enabled

Interface eth1: has multi queue enabled

Interface eth2: has multi queue enabled

Interface eth3: has multi queue enabled

[Expert@gw1:0]# mq_mng -o

Total 8 cores. Available for MQ 2 cores

i/f driver driver mode state mode (queues) cores

actual/avail

------------------------------------------------------------------------------------------------

eth0 i40e Kernel Up Auto (2/2) 0,1

eth1 i40e Kernel Up Auto (2/2) 0,1

eth2 i40e Kernel Up Auto (2/2) 0,1

eth3 i40e Kernel Up Auto (2/2) 0,1

[Expert@gw1# fw ctl affinity -l -r

CPU 0:

CPU 1: eth10

CPU 2: eth4

fw_5

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

CPU 3: eth6

fw_4

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

CPU 4: eth7

fw_3

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

CPU 5: eth8

fw_2

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

CPU 6: fw_1

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

CPU 7: fw_0

cprid mpdaemon fwd rad lpd core_uploader wsdnsd usrchkd in.asessiond pepd pdpd in.acapd cp_file_convertd iked vpnd cprid cpd

All:

Interface eth0: has multi queue enabled

Interface eth1: has multi queue enabled

Interface eth2: has multi queue enabled

Interface eth3: has multi queue enabled

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In summary,

I don't think this will be effective if those interfaces (tg3 driver) are NOT set for MQ

fw ctl affinity -s -d -fwkall 6 and reboot. Also, is there a way to revert that command if it doesn't work?

See attached. Do I have better performance with HT and 16, (12 cores in other) or is there a more optimal configuration?

Also, my understanding now is that the affinity of each interface should be set to one of the SND CPUs. RE: using sim affinity -s In the case of 4 cores, you don't have to worry about it, since there is only one SND. The SND will then allocate to one of the FW cores.

On an 8 core (16 with HT) system and with a license of 4 core, enabling HT doesn't provide any benefit only confusion. 4 cores are used, see attached. My theory is that if I don't use HT, the 4 cores that are used will be faster than if I split them in 2 with HT.

Upcoming Events

Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter