- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- Re: AWS Transit VPC vs Transit Gateway use cases a...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AWS Transit VPC vs Transit Gateway use cases and limitations

Please clarify the differences and highlight the advantages and limitations of these two solutions.

If I recall accurately, Transit VPC had a bandwidth limitation for each VPC connected to it. Not sure if the same is true for Transit Gateway, since it accommodates auto-scaling, but there are still limitations on VPN connectivity to spokes.

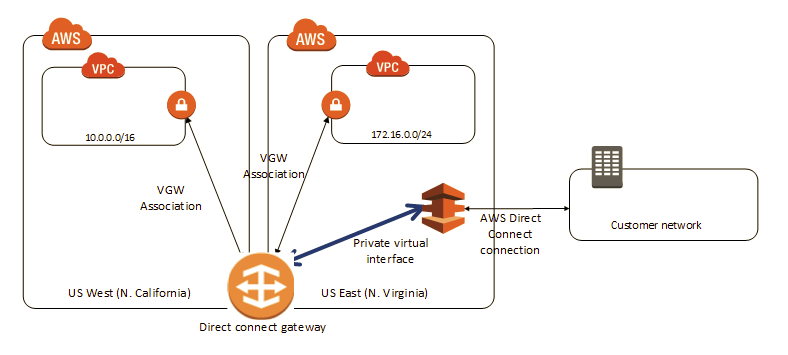

Also, I am having trouble figuring out how either solution would fit into a multi-region implementation with Direct Connect gateway, as depicted here:

As well as how to connect a VPC containing public-facing web servers protected by AWS WAF to the CP Transit Gateway for internal communication only, (i.e. routing access to the back-end servers and the Internet via CP, but preserving inbound traffic from the Internet to LBs and the return traffic).

Thank you,

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vladimir,

Currently we're migrating from TransitVPC to TGW. As you mentioned with TransitVPC you have speed limitations because all spoke VPCs are connected to the TransitVPC with VPN, which limitation is 1.25Gbps. There's a tool/script called autoprovision, and it's something of the ugliest things I've ever seen. When you have to add or remove VPC it's fine, but if you make a small mistake or do some major change, that script going to delete all the VPC connections and then re-create them again! Please keep in mind this is irreversable process which takes very long time if you have many VPCs attached. We had about 40 VPCs connected to TransitVPC, and once I made a typo which caused that script to start deleting and then adding again all the VPCs. That operation took several hours - I repeat - several hours! That means during that time those VPCs weren't reachable!

Currently we have TGW and migrating VPCs one by one. We're planning to implement the so called Geo Cluster solution (R80.40 Active-Active CloudGuards) and route VPC to VPC and Internet traffic to it. There's BGP session between TGW and on-prem via DirectConnect for the rest of the traffic to and from AWS.

Meanwhile you can watch below videos which I think are useful.

https://www.youtube.com/watch?v=VA4Zag-ohTo&list=LLwHXoodgydeZSj-UkVzcxsg&index=6

https://www.youtube.com/watch?v=yqTu_T3AT9Y

https://www.youtube.com/watch?v=PcpcGNvjZ2c

https://www.youtube.com/watch?v=13W8eBQlU0M

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mk1 , Thank you.

I'd like to ask you a few more questions, if it is not too much trouble, before I dive into videos:

Can you clarify if the autoprovision tool is specific to the Transit VPC or if it is still present in TGW?

What other gotchas have you encountered in TGW that I should be keeping an eye on?

In TGW, do we have a means to limit the number of gateways in autoscaling groups of inbound and outbound instances?

Are we specifying the instance types for GWs in TGW, or is it hardcoded?

Regards,

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vladimir,

autoprovision tool is used only for TransitVPC. You create new role in IAM and the tool use it for changes. Everything happens automatically. How it works? When you create new VPC and want it to be part of TransitVPC, you must add tag on that VPC - for instance x-chkp-vpn. Autoprovision runs on your management station responsible for CloudGuards. When the tool notice there's new VPC with the proper tag, it creates new VGW, IPSec (VTI) tunnels to CloudGuards together with BGP session. Everything works fine until you don't have to troubleshoot something or change something major (as I mentioned before). In my opinion TransitVPC was good option before, when TGW didn't exist.

We're still in the early stage with implementing TGW. The main idea is to have two separate routing tables - one of them is used only for the VPCs which have to talk each other directly (without FW), and the other one is the so called default one, where the default GW will be CloudGuard cluster (Active-Active). The idea behind that cluster is to have one CloudGuard in one AZ, and another one in another AZ. Traffic is directed to ENI attached to the active one, and in case of failure this ENI is detached from the primary CloudGuard and moved to the other one. All that should be configured by the CloudFormation template provided by Check Point. Both members should work as Active-Active, but only one of them will handle the traffic.

Our cloud architect wrote Terraform scripts, so when we need to add new VPCs or remove old ones, we just add them to script and run it. But even without automation script the process of adding new VPC to TGW is very simple. You just have to create new TGW attachment and add it to the TGW.

What other gotchas have you encountered in TGW that I should be keeping an eye on?

There are some limitations about maximum BGP routes. Keep in mind that if you have to receive many routes from TGW to on-prem or vice-versa. Geo Cluster solution doesn't support IPsec (yes, I know it's very strange).

In TGW, do we have a means to limit the number of gateways in autoscaling groups of inbound and outbound instances?

Currently we don't use autoscaling groups, because one active firewall could take the load.

Are we specifying the instance types for GWs in TGW, or is it hardcoded?

I'm not sure if I fully understand your question, but the recommended minimum is c5.xlarge instance. If you think it's not enough for your needs you can always use something bigger.

Please find attached screenshots. They are from very good TGW presentation which I found in YouTube. In one of them you will found the solution I mentioned with two route tables in TGW.

I hope I gave you more information. Let me know if explained something not clear enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mk1 Thank you so much for taking time to answer my questions!

In regards to the Active-Active cluster though, you are essentially foregoing the suggested autoscaling group in favor of HA cluster (is it a load-sharing cluster used in HA mode?). What I do not yet understand is the load-balancing mechanism in the blueprint for the CP TGW between all GWs the autoscaling group. Since each is connected to AWS TGW via VPN and there is a background BGP routing exchange, it implies that the gateways are not clustered in any way, but that there is some LB logic that I am missing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In regards to the Active-Active cluster though, you are essentially foregoing the suggested autoscaling group in favor of HA cluster

Yes. In our AWS environment one firewall can take the load so we're fine with it.

is it a load-sharing cluster used in HA mode?

Yes. It's configured as Active-Active, but only one of the members process the traffic. At least you will have sync between GWs in different AZs. With TransitVPC if your GWs are in different AZs you should rely on BGP only, so you don't have sync in this case.

What I do not yet understand is the load-balancing mechanism in the blueprint for the CP TGW between all GWs the autoscaling group. Since each is connected to AWS TGW via VPN and there is a background BGP routing exchange, it implies that the gateways are not clustered in any way, but that there is some LB logic that I am missing.

I'm not sure I can understand your question. With TransitVPC we relied on BGP, but in TGW you have BGP only for DirectConnect (between TGW and on-prem). All the rest is routed internally inside TGW. We do not rely on autoscaling groups, so I suppose in your case you can need something different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By this: "What I do not yet understand is the load-balancing mechanism in the blueprint for the CP TGW between all GWs the autoscaling group. Since each is connected to AWS TGW via VPN and there is a background BGP routing exchange, it implies that the gateways are not clustered in any way, but that there is some LB logic that I am missing.

I'm not sure I can understand your question. With TransitVPC we relied on BGP, but in TGW you have BGP only for DirectConnect (between TGW and on-prem). All the rest is routed internally inside TGW. We do not rely on autoscaling groups, so I suppose in your case you can need something different."

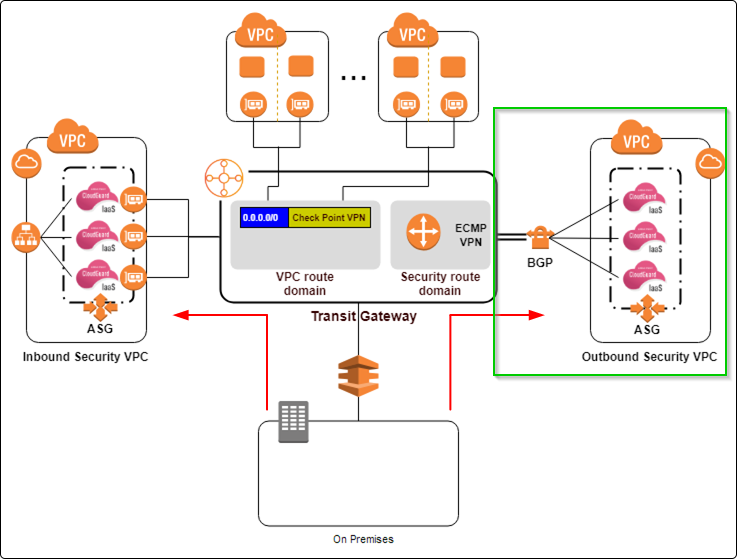

I was referring to Check Point's reference architecture depicted here:

Specifically, the section on the right, which is responsible for VPC to VPC inspection as well, (if I understand it correctly), for inspection of the traffic between VPCs and Direct Connect.

As you can see, it shows VPN with BGP to an ASG of the CP gateways. What I do not understand in this picture, is how the load balancing between all of those gateway is happening.

@PhoneBoy , can we get some input from CP Cloud Experts, if possible?