- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- NTP clock sync not working on cloudguard R80.10 HA

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NTP clock sync not working on cloudguard R80.10 HA

Hi All...

I have successfully deployed Checkpoint cloudguard HA cluster running R80.10 using Check Point CloudGuard IaaS High Availability for Microsoft Azure R80.10 and above Deployment Guide ..

Internet ---- eth0 (172.19.16.20(FW2 21)/28) FW1 eth1 (172.19.16.37(FW2 38)/28) -------Inside (towards on-premise NTP server)

As per the template the FW is deployed wth a backend loadbalancer with an IP of 172.19.16.36. No frontend-lb has been deployed

My NTP server sits on-premise network with IP address of 10.64.17.10

I have added the route for NTP server on the firewall pointing towards 172.19.16.33 (first IP on the inside eth1 subnet)

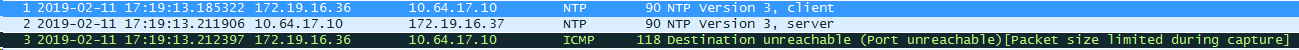

However I see a strange behaviour where the initial NTP packet is sourced from the backend-lb IP address (172.19.16.36) towards NTP server which then replies back to the FW1 IP address (.37) followed by an ICMP unreachable sourced from backend-lb to the NTP server

As soon as I remove the route via eth1 interface (forcing traffic to go out via default route on eth0 interface) I can see bi-directional comms between the FW eth0 interface and the NTP server

15:00:13.292177 IP 172.19.16.20.entextmed > 10.64.17.10.ntp: NTPv3, Client, length 48

15:00:13.317949 IP 10.64.17.10.ntp > 172.19.16.20.entextmed: NTPv3, Server, length 48

However even with this bi-directional comms, the output of show ntp current displays

No server has yet to be synchronized

I have attached wireshark captures from both eth0 and eth1 interface

The end goal here is to get NTP (and all other comms to on-premise network) working via the inside interface

Any ideas ?

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |

Thu 09 May 2024 @ 05:00 PM (CEST)

Under the Hood: Automate Azure Virtual WAN security deployments with Terraform